filmov

tv

How to implement Decision Trees from scratch with Python

Показать описание

In the fourth lesson of the Machine Learning from Scratch course, we will learn how to implement Decision Trees. This one is a bit longer due to all the details we need to implement, but we will go through it all in less than 40 minutes.

Welcome to the Machine Learning from Scratch course by AssemblyAI.

Thanks to libraries like Scikit-learn we can use most ML algorithms with a couple of lines of code. But knowing how these algorithms work inside is very important. Implementing them hands-on is a great way to achieve this.

And mostly, they are easier than you’d think to implement.

In this course, we will learn how to implement these 10 algorithms.

We will quickly go through how the algorithms work and then implement them in Python using the help of NumPy.

▬▬▬▬▬▬▬▬▬▬▬▬ CONNECT ▬▬▬▬▬▬▬▬▬▬▬▬

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

#MachineLearning #DeepLearning

Welcome to the Machine Learning from Scratch course by AssemblyAI.

Thanks to libraries like Scikit-learn we can use most ML algorithms with a couple of lines of code. But knowing how these algorithms work inside is very important. Implementing them hands-on is a great way to achieve this.

And mostly, they are easier than you’d think to implement.

In this course, we will learn how to implement these 10 algorithms.

We will quickly go through how the algorithms work and then implement them in Python using the help of NumPy.

▬▬▬▬▬▬▬▬▬▬▬▬ CONNECT ▬▬▬▬▬▬▬▬▬▬▬▬

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

#MachineLearning #DeepLearning

How to implement Decision Trees from scratch with Python

How to Implement Decision Trees in Python (Train, Test, Evaluate, Explain)

Let’s Write a Decision Tree Classifier from Scratch - Machine Learning Recipes #8

Decision and Classification Trees, Clearly Explained!!!

Decision Tree Classification Clearly Explained!

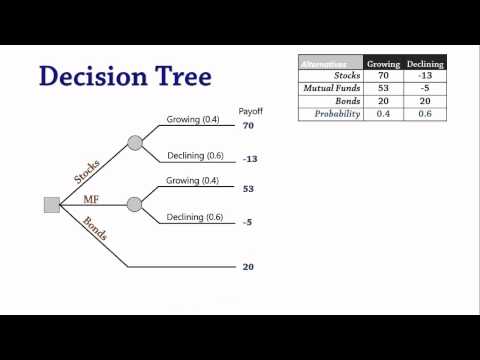

Decision Analysis 3: Decision Trees

Machine Learning Tutorial Python - 9 Decision Tree

How Do Decision Trees Work (Simple Explanation) - Learning and Training Process

PyTorch Bootcamp Class #14 | Decision Tree in Machine Learning | Pytorch Course | Pytorch Tutorial

Part 3- Decision Tree And Post Prunning Practical Implementation In Hindi | Krish Naik

How to Create a Decision Tree | Decision Making Process Analysis

Implement Decision Tree in Python using sklearn|Implementing decision tree in python

Decision Tree: Important things to know

How to MAKE (and USE) Decision Tree Analysis in Excel

How to Use Decision Trees to Systemize Your Business (+ Mistakes to Avoid)

What is Random Forest?

Decision Tree In Machine Learning | Decision Tree Algorithm In Python |Machine Learning |Simplilearn

1. Decision Tree | ID3 Algorithm | Solved Numerical Example | by Mahesh Huddar

Building Decision Tree Models using RapidMiner Studio

Implement Decision Trees from Scratch - Part 1

Decision Tree Classification in Python (from scratch!)

Demystifying Decision Trees in Machine Learning: A Visual Guide

Overfitting in Decision Trees #shorts #datascience

Build a Decision Tree with TreeAge Pro

Комментарии

0:37:24

0:37:24

0:22:25

0:22:25

0:09:53

0:09:53

0:18:08

0:18:08

0:10:33

0:10:33

0:03:06

0:03:06

0:14:46

0:14:46

0:31:44

0:31:44

0:21:08

0:21:08

0:15:46

0:15:46

0:05:32

0:05:32

0:11:07

0:11:07

0:04:24

0:04:24

0:10:54

0:10:54

0:07:33

0:07:33

0:05:21

0:05:21

0:32:40

0:32:40

0:23:53

0:23:53

0:18:44

0:18:44

0:23:21

0:23:21

0:17:43

0:17:43

0:01:00

0:01:00

0:00:52

0:00:52

0:03:15

0:03:15