filmov

tv

Unizor - Matrix Multiplication - General Case

Показать описание

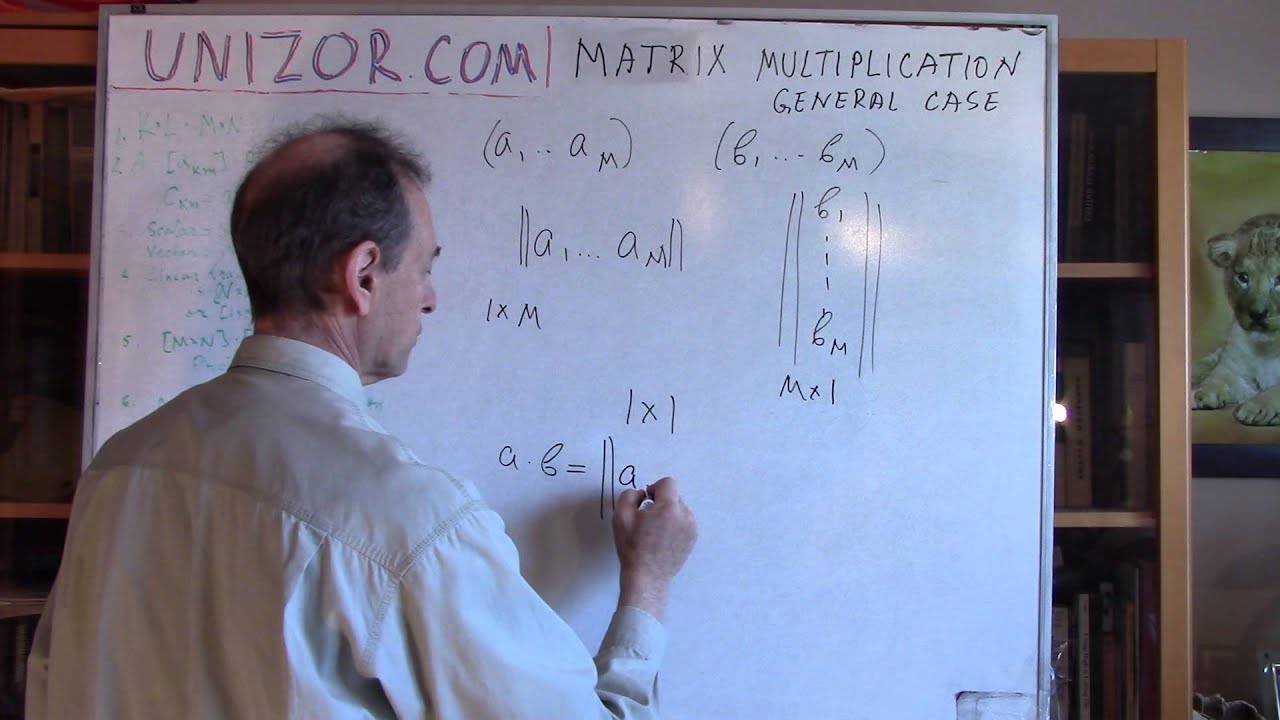

The operation of matrix multiplication is defined for a pair of matrices (let's call them "left" and "right") with the only requirement - the number of elements in each row of the left matrix (left operand) in an operation of multiplication must be equal to a number of elements in each column of the right matrix (right operand). In other words, if the sizes of these two matrices are KxL for the left operand (that is, it has K rows and L columns) and MxN for the right operand (M rows and N columns) , then L must be equal to M. The reason for this requirement is the necessity to form a scalar product of row-vectors that form rows of the left operand with column-vectors that form columns of the right operand, as we did in the examples above, and scalar product is defined only for two vectors of the same dimension.

Now, if a KxM matrix A=[aij] is multiplied by an MxN matrix B=[bmn], the result, by definition, will be a KxN matrix C=[ckn] with elements calculated as a scalar (dot) product

ckn = ak*·b*n

where ak* is a k-th row-vector of a matrix A and b*n is a n-th column-vector of a matrix B.

Finally, let's recall how we came to a formula for matrix product in 2x2 and 3x3 cases of matrix size. We wanted two consecutive linear transformations represented by two matrices A and B to be replaced by one transformation represented by a matrix C that we called the product of two initial matrices. That is C, by definition, was called the result of a new matrix operation A·B. All we needed was to find such a matrix C for any two given matrices A and B, which we did and the formula for each element of the matrix C was

ckn = bk*·a*n

where bk* is a k-th row-vector of a matrix B and a*n is a n-th column-vector of a matrix A.

As a result of such a definition, we wrote the equivalence of two transformations by A and B with one transformation by their product A·A as

B(Av) = (B·A)v

We know now that a linear transformation of a vector by a matrix constitutes the multiplication of that matrix by a column-vector viewed as Nx1 matrix. In this light the formula above is just an associative law of matrix multiplication. So, to derive with a reasonable formula for multiplication of two matrices we used, as a necessary property of this operation, its associativity - a very reasonable requirement indeed.

In other words, linear transformation of vectors was viewed as a matrix multiplication of the NxN square matrix by a column-vector interpreted as the Nx1 matrix; and a composition of two linear transformations being equivalent to one, their matrix product, is an associative property of the operation of multiplication. These considerations lie in the foundation of the definition of matrix multiplication.

Now, if a KxM matrix A=[aij] is multiplied by an MxN matrix B=[bmn], the result, by definition, will be a KxN matrix C=[ckn] with elements calculated as a scalar (dot) product

ckn = ak*·b*n

where ak* is a k-th row-vector of a matrix A and b*n is a n-th column-vector of a matrix B.

Finally, let's recall how we came to a formula for matrix product in 2x2 and 3x3 cases of matrix size. We wanted two consecutive linear transformations represented by two matrices A and B to be replaced by one transformation represented by a matrix C that we called the product of two initial matrices. That is C, by definition, was called the result of a new matrix operation A·B. All we needed was to find such a matrix C for any two given matrices A and B, which we did and the formula for each element of the matrix C was

ckn = bk*·a*n

where bk* is a k-th row-vector of a matrix B and a*n is a n-th column-vector of a matrix A.

As a result of such a definition, we wrote the equivalence of two transformations by A and B with one transformation by their product A·A as

B(Av) = (B·A)v

We know now that a linear transformation of a vector by a matrix constitutes the multiplication of that matrix by a column-vector viewed as Nx1 matrix. In this light the formula above is just an associative law of matrix multiplication. So, to derive with a reasonable formula for multiplication of two matrices we used, as a necessary property of this operation, its associativity - a very reasonable requirement indeed.

In other words, linear transformation of vectors was viewed as a matrix multiplication of the NxN square matrix by a column-vector interpreted as the Nx1 matrix; and a composition of two linear transformations being equivalent to one, their matrix product, is an associative property of the operation of multiplication. These considerations lie in the foundation of the definition of matrix multiplication.

Комментарии

0:25:10

0:25:10

0:25:01

0:25:01

0:28:03

0:28:03

0:33:41

0:33:41

0:23:01

0:23:01

0:22:33

0:22:33

0:31:47

0:31:47

0:20:48

0:20:48

0:29:17

0:29:17

0:14:19

0:14:19

0:29:35

0:29:35

0:01:02

0:01:02

0:33:17

0:33:17

0:19:45

0:19:45

0:23:56

0:23:56

0:43:40

0:43:40

0:04:37

0:04:37

0:06:38

0:06:38

0:28:16

0:28:16

0:09:45

0:09:45

0:16:43

0:16:43

0:25:11

0:25:11

0:08:37

0:08:37

0:21:51

0:21:51