filmov

tv

LLM Understanding:1. R. FUTRELL 'Language Models in the Information-Theoretic Science of Language'

Показать описание

The Place of Language Models in the Information-Theoretic Science of Language

Richard Futrell

Language Science, UC Irvine

ISC Summer School on Large Language Models: Science and Stakes, June 3-14, 2024

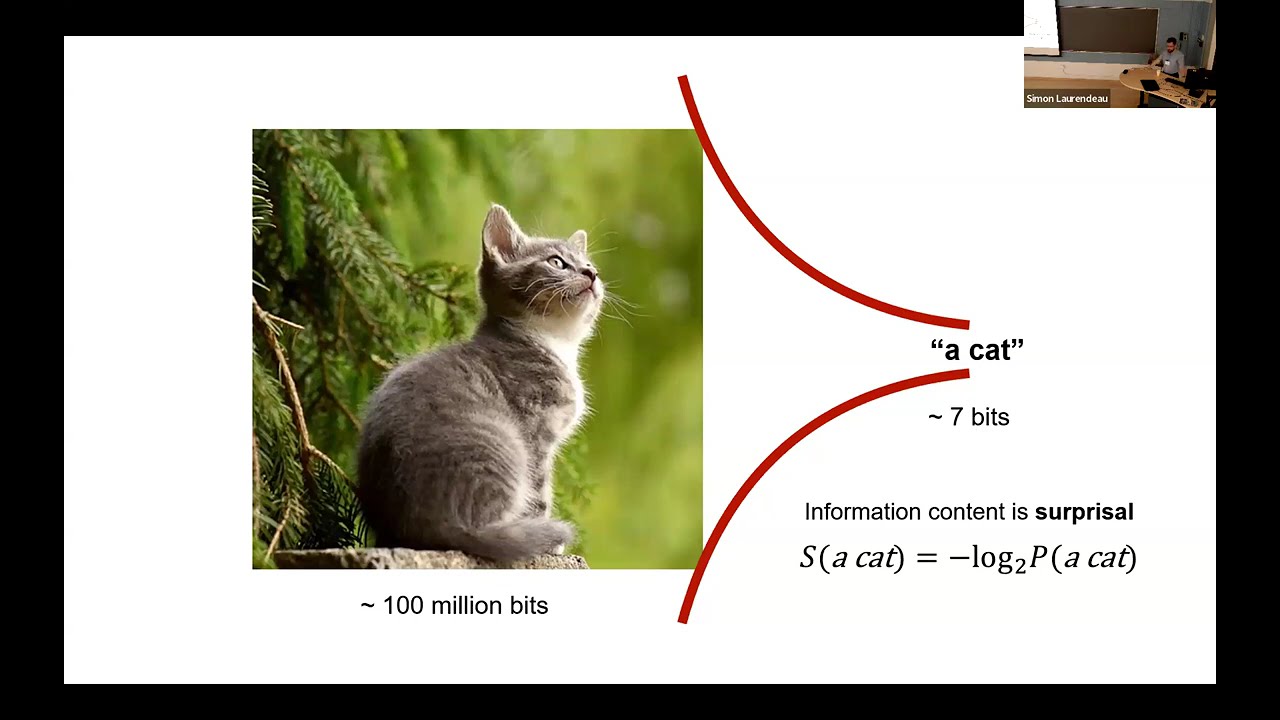

Mon, June 3, 9am-10:30am

Abstract: Language models succeed in part because they share information-processing constraints with humans. These information-processing constraints do not have to do with the specific neural-network architecture nor any hardwired formal structure, but with the shared core task of language models and the brain: predicting upcoming input. I show that universals of language can be explained in terms of generic information-theoretic constraints, and that the same constraints explain language model performance when learning human-like versus non-human-like languages. I argue that this information-theoretic approach provides a deeper explanation for the nature of human language than purely symbolic approaches, and links the science of language with neuroscience and machine learning.

Richard Futrell, Associate Professor in the UC Irvine Department of Language Science, leads the Language Processing Group, studying language processing in humans and machines using information theory and Bayesian cognitive modeling. He also does research on NLP and AI interpretability.

Kallini, J., Papadimitriou, I., Futrell, R., Mahowald, K., & Potts, C. (2024). Mission: Impossible language models. arXiv preprint arXiv:2401.06416.

Futrell, R. & Hahn, M. (2024) Linguistic Structure from a Bottleneck on Sequential Information Processing. arXiv preprint arXiv:2405.12109.

Wilcox, E. G., Futrell, R., & Levy, R. (2023). Using computational models to test syntactic learnability. Linguistic Inquiry, 1-44.

Richard Futrell

Language Science, UC Irvine

ISC Summer School on Large Language Models: Science and Stakes, June 3-14, 2024

Mon, June 3, 9am-10:30am

Abstract: Language models succeed in part because they share information-processing constraints with humans. These information-processing constraints do not have to do with the specific neural-network architecture nor any hardwired formal structure, but with the shared core task of language models and the brain: predicting upcoming input. I show that universals of language can be explained in terms of generic information-theoretic constraints, and that the same constraints explain language model performance when learning human-like versus non-human-like languages. I argue that this information-theoretic approach provides a deeper explanation for the nature of human language than purely symbolic approaches, and links the science of language with neuroscience and machine learning.

Richard Futrell, Associate Professor in the UC Irvine Department of Language Science, leads the Language Processing Group, studying language processing in humans and machines using information theory and Bayesian cognitive modeling. He also does research on NLP and AI interpretability.

Kallini, J., Papadimitriou, I., Futrell, R., Mahowald, K., & Potts, C. (2024). Mission: Impossible language models. arXiv preprint arXiv:2401.06416.

Futrell, R. & Hahn, M. (2024) Linguistic Structure from a Bottleneck on Sequential Information Processing. arXiv preprint arXiv:2405.12109.

Wilcox, E. G., Futrell, R., & Levy, R. (2023). Using computational models to test syntactic learnability. Linguistic Inquiry, 1-44.

1:31:08

1:31:08

1:31:08

1:31:08

1:32:21

1:32:21

0:22:39

0:22:39

0:05:17

0:05:17

0:57:41

0:57:41