filmov

tv

Redfin Analytics|python ETL pipeline with airflow|Data Engineering Project|Snowpipe|Snowflake|Part 1

Показать описание

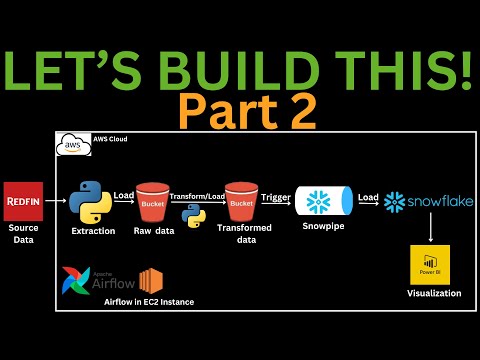

This is the part 1 of this Redfin Real Estate Data Analytics python ETL data engineering project using Apache Airflow, Snowpipe, snowflake and AWS services.

In this Redfin Real Estate Data Analytics python ETL data engineering project, you will learn how to connect to the Redfin data center data source to extract real estate data using python after which we will transform the data using pandas and load it into an Amazon S3 bucket. The raw data will also be loaded into an Amazon S3 bucket.

As soon as the transformed data lands inside the AWS S3 bucket, Snowpipe would be triggered which would automatically run a COPY command to load the transformed data into a snowflake data warehouse table. We would then connect PowerBi to the snowflake data warehouse to then visualize the data to obtain insight.

Apache airflow would be used to orchestrate and automate this process.

Apache Airflow is an open-source platform used for orchestrating and scheduling workflows of tasks and data pipelines. We would install the Apache-airflow on our EC2 instance to orchestrate the pipeline.

Remember the best way to learn data engineering is by doing data engineering - Get your hands dirty!

If you have any questions or comments, please leave them in the comment section below.

Please don’t forget to LIKE, SHARE, COMMENT and SUBSCRIBE to our channel for more AWESOME videos.

**Books I recommend**

***************** Commands used in this video *****************

***************** USEFUL LINKS *****************

DISCLAIMER: This video and description have affiliate links. This means when you buy through one of these links, we will receive a small commission and this is at no cost to you. This will help support us to continue making awesome and valuable contents for you.

In this Redfin Real Estate Data Analytics python ETL data engineering project, you will learn how to connect to the Redfin data center data source to extract real estate data using python after which we will transform the data using pandas and load it into an Amazon S3 bucket. The raw data will also be loaded into an Amazon S3 bucket.

As soon as the transformed data lands inside the AWS S3 bucket, Snowpipe would be triggered which would automatically run a COPY command to load the transformed data into a snowflake data warehouse table. We would then connect PowerBi to the snowflake data warehouse to then visualize the data to obtain insight.

Apache airflow would be used to orchestrate and automate this process.

Apache Airflow is an open-source platform used for orchestrating and scheduling workflows of tasks and data pipelines. We would install the Apache-airflow on our EC2 instance to orchestrate the pipeline.

Remember the best way to learn data engineering is by doing data engineering - Get your hands dirty!

If you have any questions or comments, please leave them in the comment section below.

Please don’t forget to LIKE, SHARE, COMMENT and SUBSCRIBE to our channel for more AWESOME videos.

**Books I recommend**

***************** Commands used in this video *****************

***************** USEFUL LINKS *****************

DISCLAIMER: This video and description have affiliate links. This means when you buy through one of these links, we will receive a small commission and this is at no cost to you. This will help support us to continue making awesome and valuable contents for you.

Комментарии

1:24:16

1:24:16

0:01:39

0:01:39

0:02:20

0:02:20

0:05:54

0:05:54

1:06:56

1:06:56

0:14:20

0:14:20

0:12:19

0:12:19

0:02:55

0:02:55

0:05:48

0:05:48

0:04:54

0:04:54

0:01:14

0:01:14

1:48:08

1:48:08

0:58:40

0:58:40

0:05:25

0:05:25

0:24:36

0:24:36

0:00:05

0:00:05

0:02:13

0:02:13

1:04:44

1:04:44

0:05:32

0:05:32

2:37:28

2:37:28

0:06:41

0:06:41

1:23:28

1:23:28

0:18:59

0:18:59

0:28:38

0:28:38