filmov

tv

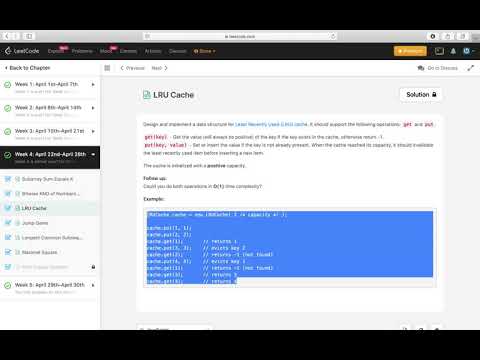

LRU Cache Implementation | Codewise explanation using Queue & Map | Java Code

Показать описание

Solution:

- We implement lru cache using queue & map

- Map helps us to fetch data faster

- While pushing value in cache if data is already in cache, we move it to top & update the value. If cache is full, we remove data from last

- While getting the data, we move accessed data to top of queue.

Time Complexity: O(1)

Space Complexity: O(n)

Do Watch video for more info

CHECK OUT CODING SIMPLIFIED

★☆★ VIEW THE BLOG POST: ★☆★

I started my YouTube channel, Coding Simplified, during Dec of 2015.

Since then, I've published over 400+ videos.

★☆★ SUBSCRIBE TO ME ON YOUTUBE: ★☆★

★☆★ Send us mail at: ★☆★

- We implement lru cache using queue & map

- Map helps us to fetch data faster

- While pushing value in cache if data is already in cache, we move it to top & update the value. If cache is full, we remove data from last

- While getting the data, we move accessed data to top of queue.

Time Complexity: O(1)

Space Complexity: O(n)

Do Watch video for more info

CHECK OUT CODING SIMPLIFIED

★☆★ VIEW THE BLOG POST: ★☆★

I started my YouTube channel, Coding Simplified, during Dec of 2015.

Since then, I've published over 400+ videos.

★☆★ SUBSCRIBE TO ME ON YOUTUBE: ★☆★

★☆★ Send us mail at: ★☆★

LRU Cache Implementation Explained Step-By-Step

Implement LRU cache

LRU Cache Implementation | Codewise explanation using Queue & Map | Java Code

Design and implement methods of LRU Cache

LRU Cache Solution DLL and Map

Master Java's LinkedHashMap with this LRU Cache Implementation - LeetCode 146. LRU Cache

Implement LRU cache with example

HashMap as a Design Choice for LRU Cache

Array as Design Choice for LRU Cache

LRU Cache Algo and Code Explained

Least Recently Used (LRU) Cache

Linked List as Design Choice for LRU Cache

#1 Coding Interview Question: LRU Cache Implementation(Logicmojo.com)

LRU Cache Implementation (Doubly LinkedList, Hashing)

LRU Cache [Easy] - LeetCode Day 24 Challenge

LRU Cache Implementation in Java

LRU Cache - System Design | How LRU works

Ace Algorithms and Programming Interviews in Swift : LRU Cache implementation

LRU cache implementation | How to implement LRU cache in Java

LRU Cache Data Structure - Problem Statement

Daily Coding Problem - Problem 52 (Implement LRU cache)

Design LRU Cache

Implement LRU Cache | Implement LRU Cache using HashMap & Doubly Linked List | Programming Tutor...

Implementation of LRU Cache using javascript

Комментарии

0:11:14

0:11:14

0:12:25

0:12:25

0:18:47

0:18:47

0:11:58

0:11:58

0:06:34

0:06:34

0:06:44

0:06:44

0:10:02

0:10:02

0:01:27

0:01:27

0:04:58

0:04:58

0:05:36

0:05:36

0:07:01

0:07:01

0:02:37

0:02:37

0:09:00

0:09:00

0:00:38

0:00:38

![LRU Cache [Easy]](https://i.ytimg.com/vi/NsvGIgDo2Mk/hqdefault.jpg) 0:16:07

0:16:07

0:10:31

0:10:31

0:07:08

0:07:08

0:43:35

0:43:35

0:13:40

0:13:40

0:01:29

0:01:29

0:20:47

0:20:47

0:13:31

0:13:31

0:23:43

0:23:43

0:09:48

0:09:48