filmov

tv

Scaling Explained Through Kubernetes HPA, VPA, KEDA & Cluster Autoscaler

Показать описание

Discover the secrets behind effectively scaling your applications and servers with this dive into Kubernetes scaling mechanisms. This video demystifies the concepts of Horizontal Pod Autoscaler (HPA), Vertical Pod Autoscaler (VPA), Kubernetes Event-driven Autoscaling (KEDA), and Cluster Autoscaler. Learn when and how to use each tool, understand their differences, and catch a glimpse of real-world scenarios that showcase their capabilities.

#KubernetesScaling #PodAutoscaling #KubernetesTutorial #ClusterAutoscaler

▬▬▬▬▬▬ 🔗 Additional Info 🔗 ▬▬▬▬▬▬

▬▬▬▬▬▬ 💰 Sponsorships 💰 ▬▬▬▬▬▬

▬▬▬▬▬▬ 👋 Contact me 👋 ▬▬▬▬▬▬

▬▬▬▬▬▬ 🚀 Other Channels 🚀 ▬▬▬▬▬▬

▬▬▬▬▬▬ ⏱ Timecodes ⏱ ▬▬▬▬▬▬

00:00 Scaling Introduction

01:04 Port (Sponsor)

02:08 Scaling Introduction (cont.)

05:38 Vertical Scaling Applications with VerticalPodAutoscaler

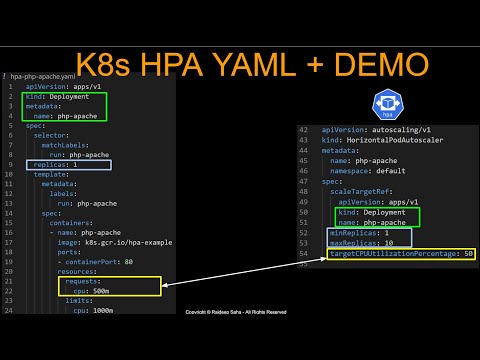

13:58 Horizontal Scaling Applications with HorizontalPodAutoscaler

16:04 Horizontal Scaling Applications with KEDA

18:38 Vertical Scaling Nodes

19:33 Horizontal Scaling Nodes Cluster Autoscaler

24:25 What to Use and When to Use It

#KubernetesScaling #PodAutoscaling #KubernetesTutorial #ClusterAutoscaler

▬▬▬▬▬▬ 🔗 Additional Info 🔗 ▬▬▬▬▬▬

▬▬▬▬▬▬ 💰 Sponsorships 💰 ▬▬▬▬▬▬

▬▬▬▬▬▬ 👋 Contact me 👋 ▬▬▬▬▬▬

▬▬▬▬▬▬ 🚀 Other Channels 🚀 ▬▬▬▬▬▬

▬▬▬▬▬▬ ⏱ Timecodes ⏱ ▬▬▬▬▬▬

00:00 Scaling Introduction

01:04 Port (Sponsor)

02:08 Scaling Introduction (cont.)

05:38 Vertical Scaling Applications with VerticalPodAutoscaler

13:58 Horizontal Scaling Applications with HorizontalPodAutoscaler

16:04 Horizontal Scaling Applications with KEDA

18:38 Vertical Scaling Nodes

19:33 Horizontal Scaling Nodes Cluster Autoscaler

24:25 What to Use and When to Use It

Scaling Explained Through Kubernetes HPA, VPA, KEDA & Cluster Autoscaler

Optimizing Resource Utilization with Horizontal Pod Autoscaling (HPA) in Kubernetes | AKS

Learn Kubernetes with Google - Intro to Horizontal Pod Autoscaler (HPA)

Kubernetes Autoscaling: HPA vs. VPA vs. Keda vs. CA vs. Karpenter vs. Fargate

How Autoscaling Works In Kubernetes (And Beyond)? Kubernetes Tutorial

Learn Kubernetes with Google: HPA: Scaling using Custom Metrics

Autoscaling in Kubernetes

Kubernetes Horizontal Pod Autoscaler (CPU Utilization | Based on Memory | Autoscaling | HPA | EKS)

Learn Kubernetes with Google - HPA: Scaling by Resource

Day 17/40 - Kubernetes Autoscaling Explained| HPA Vs VPA

K8s Horizontal Pod Autoscaler | HPA Manifest File Explained | Pod Requests Limits | HPA Demo

Horizontal POD Autoscaler setup in Kubernetes | Auto Scaling |

Vertical and horizontal autoscaling on Kubernetes Engine

Scaling with confidence - a deep dive into autoscaling in Kubernetes

Horizontal Pod Autoscaler CUSTOM METRICS & PROMETHEUS: (Kubernetes | EKS | Autoscaling | HPA | K...

Kubernetes Autoscaling 101 How HPA, VPA and CA work to scale workloads and infrastructure

Kubernetes Autoscaling 101

Kubernetes tutorial | Horizontal Pod Autoscaling | HPA

Autoscaling and Cost Optimization on Kubernetes: From 0 to 100 - Guy Templeton & Jiaxin Shan

Autoscaling Kubernetes Deployments: A (Mostly) Practical Guide - Natalie Serrino, New Relic

Keda with Prometheus: Scaling Your Kubernetes Application with... - David Solanas & Jesus Samiti...

Event-driven Autoscaling on Kubernetes: Use case 1 - HPA with Kafka

Kubernetes Horizontal Pod Autoscaler vs Vertical Pod Autoscaler

Scaling options in Azure Kubernetes Service + Horizontal Pod Autoscaler(HPA) Demo

Комментарии

0:26:42

0:26:42

0:07:18

0:07:18

0:05:31

0:05:31

0:14:38

0:14:38

0:30:55

0:30:55

0:11:02

0:11:02

0:19:07

0:19:07

0:04:35

0:04:35

0:05:40

0:05:40

0:25:50

0:25:50

0:22:38

0:22:38

0:14:11

0:14:11

0:04:00

0:04:00

0:24:34

0:24:34

0:20:57

0:20:57

0:01:57

0:01:57

0:07:10

0:07:10

0:10:42

0:10:42

0:35:46

0:35:46

0:34:54

0:34:54

0:17:44

0:17:44

0:11:15

0:11:15

0:00:57

0:00:57

0:20:31

0:20:31