filmov

tv

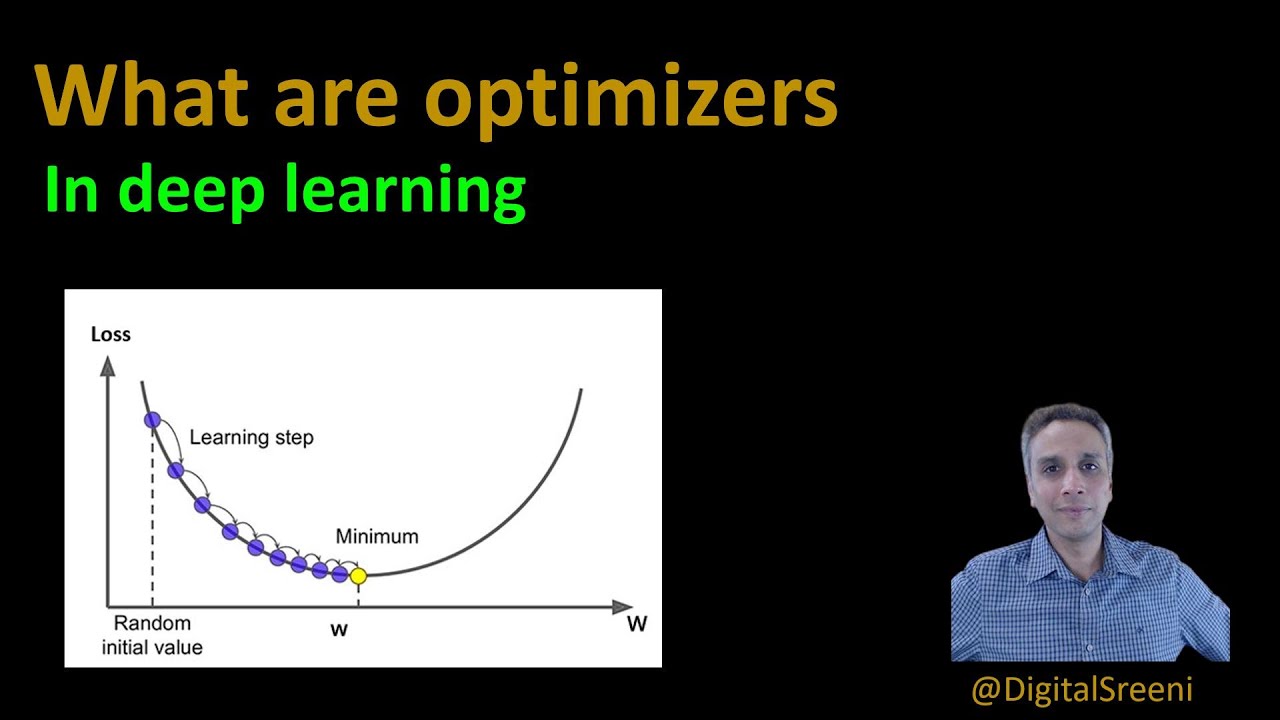

134 - What are Optimizers in deep learning? (Keras & TensorFlow)

Показать описание

134 - What are Optimizers in deep learning? (Keras & TensorFlow)

AdamW Optimizer Explained #datascience #machinelearning #deeplearning #optimization

Adam Optimizer Explained in Detail | Deep Learning

Deep Learning-All Optimizers In One Video-SGD with Momentum,Adagrad,Adadelta,RMSprop,Adam Optimizers

Adam Optimizer

Basics of Optimizer

How to select the correct optimizer for Neural Networks

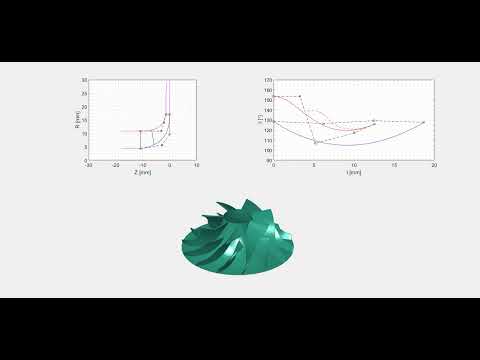

Shape optimization of R134a compressor impeller

Machine Learning Optimizers- How ADAM Optimizer works?

ADAM OPTIMIZER IMPLEMENTATION

Lecture 8.4 - Neural network optimizers

Which Loss Function, Optimizer and LR to Choose for Neural Networks

Day 13 Machine Learning + Neural Networks Live Sessions | Optimizers

Adam Optimizer

3.2. Optimizers

RMSprop Optimizer Explained in Detail | Deep Learning

Optimizer -part 5-Adam Optimizer

PR-134 How Does Batch Normalization Help Optimization?

CS 152 NN—8: Optimizers—Adam

Neural Network - Optimizer and Regularization

Optimizer-part 4 -RmsProp

MAT 134 -- Lecture 16 -- Applied Optimization

How to test different OPTIMIZERs in a Deep Learning model

Lec 10 RMSProp, Adam and other optimizers

Комментарии

0:08:36

0:08:36

0:00:50

0:00:50

0:05:05

0:05:05

1:41:55

1:41:55

0:00:36

0:00:36

0:05:07

0:05:07

0:13:48

0:13:48

0:00:42

0:00:42

0:07:00

0:07:00

0:06:10

0:06:10

0:35:38

0:35:38

0:04:59

0:04:59

0:30:16

0:30:16

0:19:23

0:19:23

0:01:48

0:01:48

0:06:11

0:06:11

0:06:29

0:06:29

0:16:21

0:16:21

0:04:38

0:04:38

1:46:36

1:46:36

0:05:36

0:05:36

1:27:11

1:27:11

0:01:58

0:01:58

0:15:49

0:15:49