filmov

tv

The Only Type of Testing You Need

Показать описание

Hello, everybody. I'm Nick, and in this video, I will introduce you to my newest favourite testing technique called Snapshot testing, using the nuget package, Verify.

Don't forget to comment, like and subscribe :)

Social Media:

#csharp #dotnet

5 Types of Testing Software Every Developer Needs to Know!

Software Testing Explained in 100 Seconds

Software Testing Tutorial #16 - Types of Software Testing

Software Testing Theory + A Few Less Obvious Testing Techniques

5 Common Software Testing Types Explained in 7 minutes | Software Testing Types With Examples

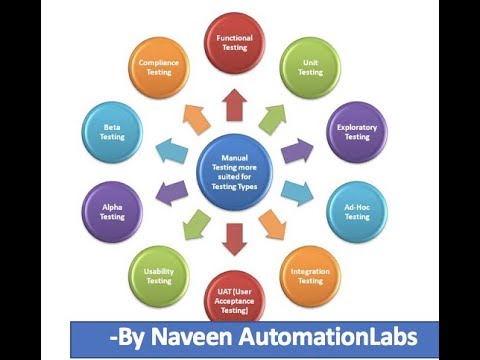

Different Types of Manual Testing || Functional & Non Functional Testing

Software Testing Tutorial #11 - Levels in Software Testing

Types of Testing in Software Engineering | Levels of Testing

Day 5/28 - Terraform Variables - Input vs Output vs Local Variables

Static, Unit, Integration, and End-to-End Tests Explained - Software Testing Series #1

Types of Software Testing | Software Testing Certification Training | Edureka

Software Testing Explained: How QA is Done Today

What are key elements of a software testing strategy? #softwaretesting #testing

Testing out a CurveBall⚽️💥 #football #kickerball

How to get Software Testing job easily | STAD Solution

Zac Hatfield-Dodds - Escape from auto-manual testing with Hypothesis! - PyCon 2019

Beyond Unit Tests: Modern Testing in Angular

Testing Roblox Avatar Hacks!

Everything you should know about Test Cases | Software Testing

Bromine is scary

Key Elements of a Testing Strategy - Software Testing Community Question No. 3

How to Write Test Cases in Manual Testing with Template

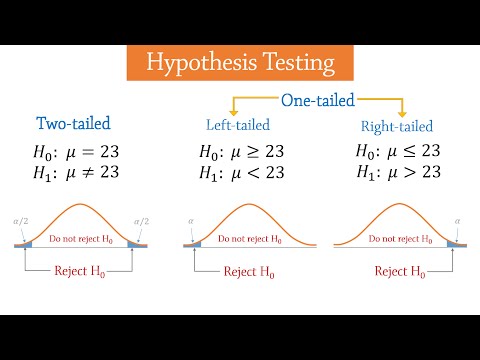

Hypothesis Testing - Introduction

Functional Testing vs Non-Functional Testing | Software Testing Training | Edureka

Комментарии

0:06:24

0:06:24

0:02:16

0:02:16

0:10:00

0:10:00

0:20:33

0:20:33

0:06:27

0:06:27

0:34:35

0:34:35

0:13:25

0:13:25

0:08:27

0:08:27

0:20:30

0:20:30

0:13:45

0:13:45

0:29:42

0:29:42

0:11:26

0:11:26

0:00:33

0:00:33

0:00:39

0:00:39

0:00:59

0:00:59

0:33:52

0:33:52

0:44:42

0:44:42

0:00:26

0:00:26

0:13:26

0:13:26

0:00:49

0:00:49

0:13:30

0:13:30

0:11:50

0:11:50

0:04:00

0:04:00

0:15:54

0:15:54