filmov

tv

Quantization vs Pruning vs Distillation: Optimizing NNs for Inference

Показать описание

Four techniques to optimize the speed of your model's inference process:

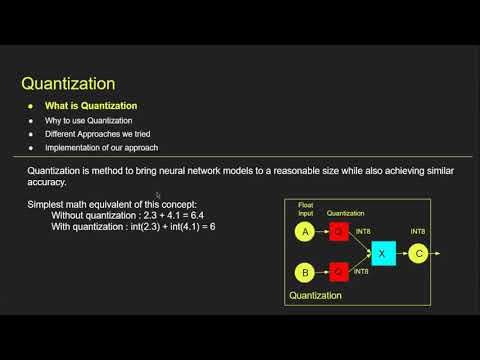

0:38 - Quantization

5:59 - Pruning

9:48 - Knowledge Distillation

13:00 - Engineering Optimizations

References:

Quantization vs Pruning vs Distillation: Optimizing NNs for Inference

Quantization in deep learning | Deep Learning Tutorial 49 (Tensorflow, Keras & Python)

AI Model Compression (Quantization, Pruning and Knowledge Distillation)

Pruning a neural Network for faster training times

PQK: Model Compression via Pruning, Quantization, and Knowledge Distillation - (3 minutes introd...

Smaller Models Are Better Ones: Prune and Quantize

Unstructured vs Structured Pruning in Neural Networks #shorts

✂️ Mastering Model Optimization: Distillation, Pruning, and Quantization! 🚀 #optimization #genai...

Lecture 03 - Pruning and Sparsity (Part I) | MIT 6.S965

Knowledge Distillation in Deep Neural Network

Pruning and Model Compression

Lecture 05 - Quantization (Part I) | MIT 6.S965

Neural Network Compression – Dmitri Puzyrev

Knowledge Distillation in Deep Learning - Basics

[Part 1] A Crash Course on Model Compression for Data Scientists

CMU Advanced NLP Fall 2024 (11): Distillation, Quantization, and Pruning

CMU Advanced NLP 2024 (11): Distillation, Quantization, and Pruning

Better not Bigger: Distilling LLMs into Specialized Models

Learning Highly Sparse Deep Neural Networks through Pruning and Quantization

Advanced Machine Learning with Neural Networks 2021 - Class 8 - Quantization and pruning

ICLR Paper: Learn Step Size Quantization

Lecture 12.2 - Network Pruning, Quantization, Knowledge Distillation

structured vs unstructured pruning in PyTorch

Quantization in Deep Learning (LLMs)

Комментарии

0:19:46

0:19:46

0:15:34

0:15:34

0:02:52

0:02:52

0:02:01

0:02:01

0:03:09

0:03:09

0:03:29

0:03:29

0:00:59

0:00:59

0:00:52

0:00:52

1:07:07

1:07:07

0:04:10

0:04:10

0:22:55

0:22:55

1:11:43

1:11:43

0:16:40

0:16:40

0:09:51

0:09:51

![[Part 1] A](https://i.ytimg.com/vi/L1uuKPxNsHE/hqdefault.jpg) 0:10:51

0:10:51

1:04:21

1:04:21

1:14:29

1:14:29

0:16:49

0:16:49

0:05:17

0:05:17

1:31:15

1:31:15

0:04:41

0:04:41

0:30:28

0:30:28

0:02:58

0:02:58

0:13:04

0:13:04