filmov

tv

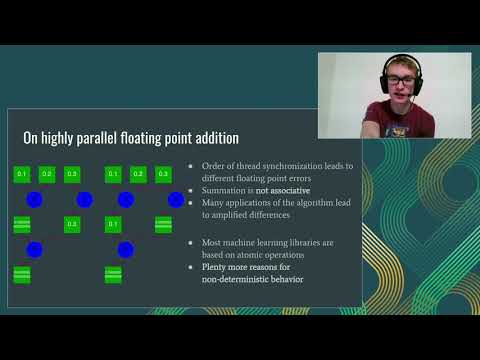

Deep Learning Determinism

Показать описание

This is a presentation was given on March 20, 2019 at GTC in San Jose, California.

Some items covered:

* What non-determinism is in the context of deep learning

* Why it's important to achieve deterministic operation

* The most common sources of non-determinism on GPUs

* An methodology for debugging non-determinism

* A tool for debugging non-determinism in TensorFlow

* Solutions to make frameworks operate deterministically on GPUs

Links from the talk:

The TensorFlow determinism debug tool will be open-sourced at the following URL. Updates to the content of the talk will also be released there. Please watch and/or follow the repository.

Accompanying poster (presented at ScaledML 2019 at the Computer History Museum, Mountain View, CA):

This was my first public tech talk. I wrote about what I learned in preparing for it here:

This video (S9911) and many others from the conference can be viewed (for free) at

Some items covered:

* What non-determinism is in the context of deep learning

* Why it's important to achieve deterministic operation

* The most common sources of non-determinism on GPUs

* An methodology for debugging non-determinism

* A tool for debugging non-determinism in TensorFlow

* Solutions to make frameworks operate deterministically on GPUs

Links from the talk:

The TensorFlow determinism debug tool will be open-sourced at the following URL. Updates to the content of the talk will also be released there. Please watch and/or follow the repository.

Accompanying poster (presented at ScaledML 2019 at the Computer History Museum, Mountain View, CA):

This was my first public tech talk. I wrote about what I learned in preparing for it here:

This video (S9911) and many others from the conference can be viewed (for free) at

Deep Learning Determinism

Slavoj Žižek on determinism

Probabilistic vs. deterministic models explained in under 2 minutes

Legendary AI Researcher Secret to Mastering Machine Learning

2.4) Deterministic vs Stochastic Gradient Descent

AI and Determinism – Are AI systems inherently deterministic?

AI and Determinism – Are AI systems inherently deterministic?

Advice for machine learning beginners | Andrej Karpathy and Lex Fridman

Are Machine Learning Models Deterministic

Deep Learning Reproducibility with TensorFlow

Determinism - Can Newtonian Physics Predict the Future?

James Walker on the importance of determinism | Why Didn't You Test That? #softwarequality

Genius Machine Learning Advice for 10 Minutes Straight

Determinism in the AI Tech Stack (LLMs): Temperature, Seeds, and Tools

AI Question 3: What is Determinism in AI Models? | AWS AI Practitioner Exam

Stochasticity of Deterministic Gradient Descent

Deterministic Machine Learning with MLflow and mlf-core

Pytorch Quick Tip: Reproducible Results and Deterministic Behavior

(Ep8) Free Will vs. Determinism: Are We More Like AI Than We Think?

Can Free Will Coexist with Determinism?

Determinism explained in 1 minute

🧠🤖 Spinoza, AI, and the Illusion of Free Will 🤯 #aipodcast #aidebate #spinoza #freewill #determinism...

A Deterministic Local Interpretable Model-Agnostic Explanations Approach for Computer-Aided Diagnosi

MIT 6.S191 (2019): Deep Generative Modeling

Комментарии

0:37:39

0:37:39

0:00:58

0:00:58

0:01:27

0:01:27

0:00:21

0:00:21

0:03:41

0:03:41

0:00:55

0:00:55

0:00:55

0:00:55

0:05:48

0:05:48

0:00:22

0:00:22

0:11:39

0:11:39

0:10:24

0:10:24

0:00:56

0:00:56

0:09:46

0:09:46

0:22:59

0:22:59

0:04:13

0:04:13

0:54:53

0:54:53

0:29:35

0:29:35

0:03:06

0:03:06

0:00:55

0:00:55

0:00:53

0:00:53

0:00:56

0:00:56

0:01:00

0:01:00

0:40:21

0:40:21

0:43:44

0:43:44