filmov

tv

Creating and Training a Generative Adversarial Networks (GAN) in Keras (7.2)

Показать описание

Implement a Generative Adversarial Networks (GAN) from scratch in Python using TensorFlow and Keras. Using two Kaggle datasets that contain human face images, a GAN is trained that is able to generate human faces.

Code for This Video:

** Follow Me on Social Media!

Creating and Training a Generative Adversarial Networks (GAN) in Keras (7.2)

Introduction to Generative AI

Introduction to Generative AI

Build a Generative Adversarial Neural Network with Tensorflow and Python | Deep Learning Projects

Generative AI in a Nutshell - how to survive and thrive in the age of AI

What is generative AI and how does it work? – The Turing Lectures with Mirella Lapata

What are GANs (Generative Adversarial Networks)?

Generative AI Full Course – Gemini Pro, OpenAI, Llama, Langchain, Pinecone, Vector Databases & M...

NODES 2024 - Keynote: Tracking the Pulse of Generative AI (APAC)

Introduction to Generative AI

Roadmap to Learn Generative AI(LLM's) In 2024 With Free Videos And Materials- Krish Naik

Generative AI | Jobs with GPT 3, GPT 4, ChatGPT Knowledge and Skills | Career Talk With Anand

How To Build Generative AI Applications

What are Generative AI models?

AI, Machine Learning, Deep Learning and Generative AI Explained

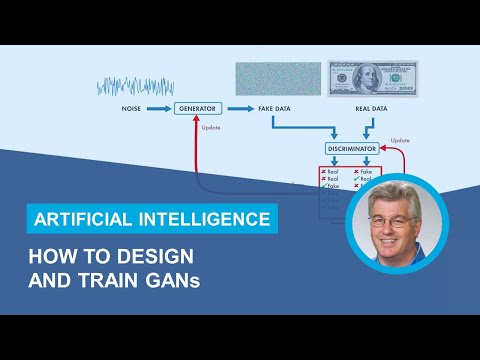

How to Design and Train Generative Adversarial Networks (GANs)

How AIs, like ChatGPT, Learn

Generative AI Fundamentals

Building a neural network FROM SCRATCH (no Tensorflow/Pytorch, just numpy & math)

L-1 Generative AI for Beginners

Integrating Generative AI Models with Amazon Bedrock

Make $660/Day with Free Google Generative AI Certificates

What Are GANs? | Generative Adversarial Networks Tutorial | Deep Learning Tutorial | Simplilearn

Get Hands-on Experience with Generative AI - watsonx AI Prompt Lab

Комментарии

0:18:07

0:18:07

0:22:08

0:22:08

0:08:17

0:08:17

2:01:24

2:01:24

0:17:57

0:17:57

0:46:02

0:46:02

0:08:23

0:08:23

6:18:02

6:18:02

0:44:19

0:44:19

0:22:02

0:22:02

0:20:17

0:20:17

0:06:32

0:06:32

0:01:56

0:01:56

0:08:47

0:08:47

0:10:01

0:10:01

0:07:47

0:07:47

0:08:55

0:08:55

0:45:41

0:45:41

0:31:28

0:31:28

0:13:49

0:13:49

0:14:19

0:14:19

0:07:31

0:07:31

0:09:58

0:09:58

0:05:31

0:05:31