filmov

tv

PySpark Window, ranking (rank, dense_rank, row_number etc.) and aggregation(sum,min,max) function

Показать описание

In this video, I have illustrated the spark window analytical function like rank, dense rank, row number, and aggregation functions like sum, min, max, etc.

with example. employee dataset is present in the following GitHub.

employee schema which I used to read the data set is as below.

employee_schema=StructType(((StructField('EMPLOYEE_ID',IntegerType(),True)), \

(StructField('FIRST_NAME',StringType(),True)), \

(StructField('LAST_NAME',StringType(),True)), \

(StructField('EMAIL',StringType(),True)), \

(StructField('PHONE_NUMBER',StringType(),True)), \

(StructField('HIRE_DATE',StringType(),True)), \

(StructField('JOB_ID',StringType(),True)), \

(StructField('SALARY',FloatType(),True)), \

(StructField('COMMISSION_PCT',IntegerType(),True)), \

(StructField('MANAGER_ID',IntegerType(),True)), \

(StructField('DEPARTMENT_ID',IntegerType(),True))))

with example. employee dataset is present in the following GitHub.

employee schema which I used to read the data set is as below.

employee_schema=StructType(((StructField('EMPLOYEE_ID',IntegerType(),True)), \

(StructField('FIRST_NAME',StringType(),True)), \

(StructField('LAST_NAME',StringType(),True)), \

(StructField('EMAIL',StringType(),True)), \

(StructField('PHONE_NUMBER',StringType(),True)), \

(StructField('HIRE_DATE',StringType(),True)), \

(StructField('JOB_ID',StringType(),True)), \

(StructField('SALARY',FloatType(),True)), \

(StructField('COMMISSION_PCT',IntegerType(),True)), \

(StructField('MANAGER_ID',IntegerType(),True)), \

(StructField('DEPARTMENT_ID',IntegerType(),True))))

54. row_number(), rank(), dense_rank() functions in PySpark | #pyspark #spark #azuresynapse #azure

PySpark Window, ranking (rank, dense_rank, row_number etc.) and aggregation(sum,min,max) function

Pyspark - Rank vs. Dense Rank vs. Row Number

PySpark Examples - How to use window function row number, rank, dense rank over dataframe- Spark SQL

Pyspark Window Ranking functions - row_number(), rank (), dense_rank()

Spark SQL for Data Engineering 23 : Spark Sql window ranking functions #rank #denserank #sqlwindow

rank dense rank row number in pyspark | window functions in pyspark | databricks | #interview

Quick! What's the difference between RANK, DENSE_RANK, and ROW_NUMBER?

PySpark Tutorial 27: PySpark dense_rank vs rank | PySpark with Python

How to write Windows Ranking functions(Rank, Dense Rank, Row Number, NTile) in Spark SQL DataBricks

Rank() vs Dense_Rank() Window Function() | SQL Interview🎙Questions🙋🙋♀️

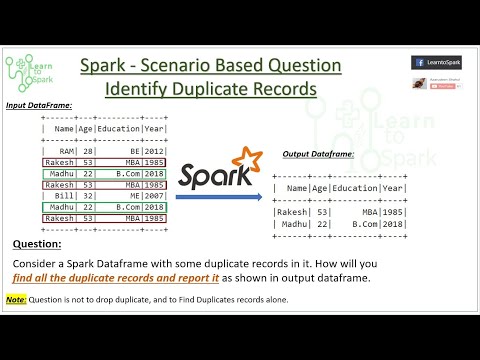

Spark Scenario Based Question | Window - Ranking Function in Spark | Using PySpark | LearntoSpark

window function in pyspark | rank and dense_rank | Lec-15

Spark SQL - Windowing Functions - Ranking using Windowing Functions

How to use Ranking Functions in Apache Spark | RANK | DENSE_RANK | ROW_NUMBER

29. row_number(), rank(), dense_rank() functions in PySpark | Azure Databricks | PySpark Tutorial

6.4 Spark Rank vs Dense Rank | Spark Tutorial Interview Questions

PySpark Window Function : Use Cases and practical Examples.

How to use Dataframe Window operation (Rank,dense_rank and row number) in SPARK.

Spark SQL - Windowing Functions - Ranking Functions

Spark SQL - Windowing Functions - Using LEAD or LAG

How to use Percent_Rank() window function in spark with example

Deference between Row_Numer and Rank and Dense rank | Pyspark tutorials | Azure databricks tutorials

25. Windows function in Pyspark | PySpark Tutorial

Комментарии

0:13:26

0:13:26

0:23:13

0:23:13

0:10:21

0:10:21

0:11:42

0:11:42

0:24:02

0:24:02

0:17:47

0:17:47

0:19:47

0:19:47

0:01:47

0:01:47

0:07:56

0:07:56

0:19:19

0:19:19

0:07:39

0:07:39

0:06:56

0:06:56

0:29:46

0:29:46

0:11:06

0:11:06

0:11:04

0:11:04

0:06:06

0:06:06

0:03:43

0:03:43

0:10:13

0:10:13

0:09:16

0:09:16

0:03:33

0:03:33

0:10:44

0:10:44

0:02:44

0:02:44

0:22:20

0:22:20

0:10:16

0:10:16