filmov

tv

Intro to JAX: Accelerating Machine Learning research

Показать описание

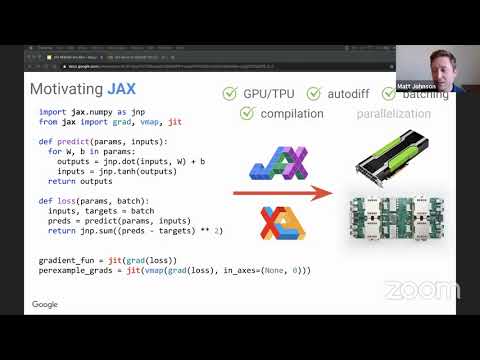

JAX is a Python package that combines a NumPy-like API with a set of powerful composable transformations for automatic differentiation, vectorization, parallelization, and JIT compilation. Your code can run on CPU, GPU or TPU. This talk will get you started accelerating your ML with JAX!

Resources:

Speaker:

Jake VanderPlas (Software Engineer)

#MLCommunityDay

product: TensorFlow - General; event: ML Community Day 2021; fullname: Jake VanderPlas; re_ty: Publish;

Resources:

Speaker:

Jake VanderPlas (Software Engineer)

#MLCommunityDay

product: TensorFlow - General; event: ML Community Day 2021; fullname: Jake VanderPlas; re_ty: Publish;

Intro to JAX: Accelerating Machine Learning research

JAX Crash Course - Accelerating Machine Learning code!

JAX in 100 Seconds

Introduction to JAX

What is JAX?

JAX: Accelerated Machine Learning Research via Composable Function Transformations in Python

Machine Learning with JAX - From Zero to Hero | Tutorial #1

Who uses JAX?

JAX: accelerated machine learning research via composable function transformations in Python

Accelerating Machine Learning using JAX

Numpy on the GPU? Speeding up Simple Machine Learning Algorithms with JAX

Introduction to JAX

JAX: Accelerated Machine Learning Research | SciPy 2020 | VanderPlas

Introduction to JAX 2023

Donal Byrne - Introduction To Jax: the next step in high performance machine learning

Introduction to JAX for Machine Learning and More

JAX: accelerated machine learning research via composable function transformations in Python

Intro to Machine Learning with JAX

Stanford MLSys Seminar Episode 6: Roy Frostig on JAX

Magical NumPy with JAX - 00 Introduction

Intro to Jax/Flax (Taha Bouhsine)

*Fast* Python and more - Functional languages for machine learning

EI Seminar - Matthew Johnson - JAX: accelerated ML research via composable function transformations

Comparing Automatic Differentiation in JAX, TensorFlow and PyTorch #shorts

Комментарии

0:10:30

0:10:30

0:26:39

0:26:39

0:03:24

0:03:24

0:07:05

0:07:05

0:04:15

0:04:15

0:13:50

0:13:50

1:17:57

1:17:57

0:03:31

0:03:31

1:09:58

1:09:58

0:49:16

0:49:16

0:09:46

0:09:46

1:30:33

1:30:33

0:23:40

0:23:40

0:19:00

0:19:00

0:35:38

0:35:38

1:08:46

1:08:46

0:51:59

0:51:59

1:01:19

1:01:19

1:06:58

1:06:58

0:07:07

0:07:07

0:53:38

0:53:38

0:15:02

0:15:02

0:57:06

0:57:06

0:00:38

0:00:38