filmov

tv

Deep Learning for Tabular Data: A Bag of Tricks | ODSC 2020

Показать описание

Table of Contents

Motivation: 0:15

Impute missing values: 1:37

Prepare categoricals, text, and numerics: 2:49, 3:10, 3:31

Properly validate: 3:54

Establish a benchmark: 5:24

Start with a low capacity network: 6:10

Determine output activation and loss function for classification and regression: 7:17, 8:26

Determine hidden activation: 9:46

Choose batch size: 10:57

Build learning rate schedule: 12:02

Determine number of epochs: 14:35

Track and interpret regression predictions: 15:30

Track metric and/or loss: 16:09

Track and interpret classification predictions: 16:45

Benchmark the network: 17:11

Dealing with discontinuities: 18:16

Tuning the network: 19:31

Handing overfitting vs. underfitting: 20:41

All tricks in one place: 21:35

Stay connected with DataRobot!

Why Deep Neural Networks (DNNs) Underperform Tree-Based Models on Tabular Data

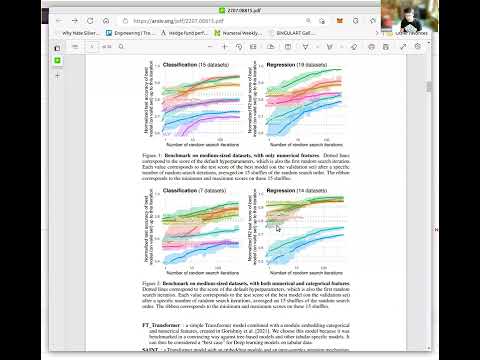

Finally, Deep Learning for Tabular Data: The TabPFN Transformer

Why Do Tree Based-Models Outperform Neural Nets on Tabular Data?

Machine Learning on Tabular Data - First Chapter Summary

Deep Learning for Tabular Data: A Bag of Tricks | ODSC 2020

INTRODUCTION to Deep Learning with tabular data | Lecture 00

Neural Networks on Tabular Data #datascience #machinelearning #neuralnetworks #deeplearning

GANs for Tabular Synthetic Data Generation (7.5)

Batch 6 Sql DBA Advanced Performance Tuning Introduction Session Class 1 || Contact +91 990259140

Deep Learning for Tabular Data – Mr. Yam Peleg

Contrastive Learning for Tabular Data - SCARF

XGBoost outperforms Deep Learning Models for Tabular Data: Paper Summary

Intro to Machine Learning for Tabular Data

#DLDC2020 | Deep Learning Dev Con | Luca Massron - Deep Learning For Tabular Data

Deep Learning with Tabular dataset: Dos and Don'ts

Deep Learning for tabular data - #DevFestVeneto19

Talks # 4: Sebastien Fischman - Pytorch-TabNet: Beating XGBoost on Tabular Data Using Deep Learning

Tabular Data Analysis with Neural Networks: Predictive Modeling Tutorial

Tensor Flow 2.0 - Part 1: Deep Learning and Tabular Data + tf.function

W&B Paper Reading Group: Revisiting Deep Learning Models for Tabular Data

Deep Learning for Tabular Data Innovation Meets Practice, Adi Watzman

[Open DMQA Seminar] Comparison of Machine Learning and Deep Learning for Tabular Datasets

Numerai Quant Club / Why do tree-based models still outperform deep learning on tabular data?

Tabular Learning: skrub and Foundation Models with Gaël Varoquaux, PhD

Комментарии

0:05:37

0:05:37

0:04:46

0:04:46

0:00:58

0:00:58

0:04:08

0:04:08

0:21:45

0:21:45

0:07:27

0:07:27

0:00:26

0:00:26

0:06:48

0:06:48

1:40:16

1:40:16

0:19:09

0:19:09

0:03:36

0:03:36

0:11:32

0:11:32

1:29:30

1:29:30

0:38:11

0:38:11

0:22:36

0:22:36

0:48:21

0:48:21

0:51:59

0:51:59

0:04:50

0:04:50

1:25:51

1:25:51

1:00:50

1:00:50

0:08:08

0:08:08

![[Open DMQA Seminar]](https://i.ytimg.com/vi/9tQqjO5C-jg/hqdefault.jpg) 0:24:59

0:24:59

0:54:51

0:54:51

0:21:44

0:21:44