filmov

tv

Normal equation solution of the least-squares problem | Lecture 27 | Matrix Algebra for Engineers

Показать описание

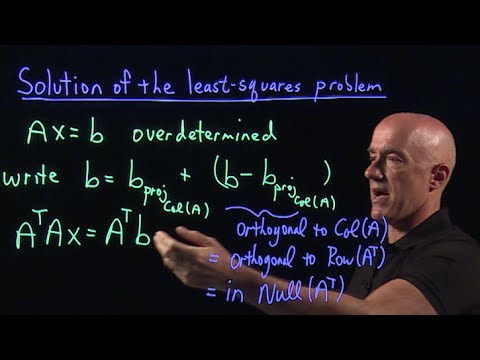

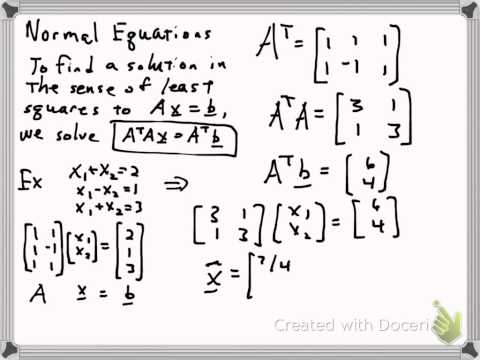

How to solve the least-squares problem using matrices.

Normal equation solution of the least-squares problem | Lecture 27 | Matrix Algebra for Engineers

Normal Equations | Ch. 3, Linear Regression

4.2.3 Solving the Normal Equations

CS549 - Linear regression and normal equation solution

Normal Equation Derivation with Calculus for Least Squares Regression

Normal Equation Derivation for Regression

10.4.2 Method of Normal Equations

Normal Equations

Pure Math P3 May/June 2024 [Q9] Edexcel IAL WMA 13/01|Normal equation, Exact area of tirangle

CS596 Machine Learning: Linear regression - Minimizing the cost function, normal equation solution

Lecture 15.04 - Solving the Normal Equation

15b: The Amazing Normal Equation

The normal equations

Regression Normal Equations

Normal equations for least squares regression

Lecture 15.03 - Deriving the Normal Equation

Geometric Interpretation of Least Squares to Derive Normal Equation

Normal Equation Regression in Machine Learning

Machine Learning | Linear Regression | Closed Form Solution | Normal Equation

Linear Regression: Deriving the Normal Equation

[CPSC 340] The Normal Equations

Least squares - normal equations

3-2 Least squares problems and the normal equations

Least squares - example using normal equations

Комментарии

0:15:05

0:15:05

0:04:35

0:04:35

0:07:16

0:07:16

1:14:55

1:14:55

0:09:54

0:09:54

0:08:02

0:08:02

0:11:28

0:11:28

0:24:23

0:24:23

0:18:15

0:18:15

0:13:51

0:13:51

0:03:25

0:03:25

0:19:00

0:19:00

0:09:36

0:09:36

0:18:07

0:18:07

0:12:09

0:12:09

0:07:30

0:07:30

0:08:52

0:08:52

0:05:40

0:05:40

0:08:06

0:08:06

0:06:02

0:06:02

![[CPSC 340] The](https://i.ytimg.com/vi/0tIPRjdrsho/hqdefault.jpg) 0:47:38

0:47:38

0:04:59

0:04:59

0:18:42

0:18:42

0:03:43

0:03:43