filmov

tv

ChatGPT 3.5 Turbo Fine Tuning For Specific Tasks - Tutorial with Synthetic Data

Показать описание

ChatGPT 3.5 Turbo Fine Tuning For Specific Tasks - Tutorial with Synthetic Data

👊 Become a member and get access to GitHub:

Get a FREE 45+ ChatGPT Prompts PDF here:

📧 Join the newsletter:

🌐 My website:

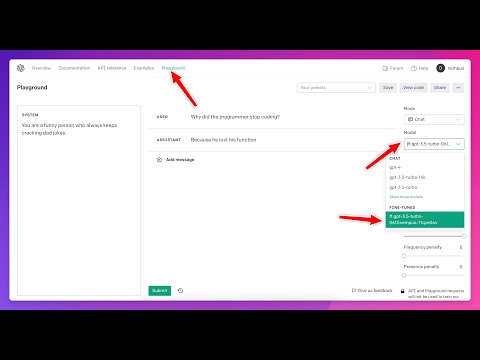

I created a tutorial on how you can fine tune chatgpt 3.5 turbo for a specific task or job with synthetic data. This is a step by step guide to fine tune OpenAIs ChatGPT 3.5 Turbo with Syntethic Data

00:00 ChatGPT 3.5 Turbo Fine Tuning Intro

00:18 When to Fine Tune a model?

01:42 Why do Fine-tuning?

02:42 Todays Task

04:24 Creating Synthetic Data Python

09:13 Cleaning the Dataset

11:04 Fine Tuning ChatGPT 3.5 Turbo

14:32 Testing The Fine Tuned Model

👊 Become a member and get access to GitHub:

Get a FREE 45+ ChatGPT Prompts PDF here:

📧 Join the newsletter:

🌐 My website:

I created a tutorial on how you can fine tune chatgpt 3.5 turbo for a specific task or job with synthetic data. This is a step by step guide to fine tune OpenAIs ChatGPT 3.5 Turbo with Syntethic Data

00:00 ChatGPT 3.5 Turbo Fine Tuning Intro

00:18 When to Fine Tune a model?

01:42 Why do Fine-tuning?

02:42 Todays Task

04:24 Creating Synthetic Data Python

09:13 Cleaning the Dataset

11:04 Fine Tuning ChatGPT 3.5 Turbo

14:32 Testing The Fine Tuned Model

How to Fine-tune a ChatGPT 3.5 Turbo Model - Step by Step Guide

ChatGPT 3.5 Turbo Fine Tuning For Specific Tasks - Tutorial with Synthetic Data

Fine-Tune ChatGPT For Your Exact Use Case

Fine Tuning GPT-3.5-Turbo - Comprehensive Guide with Code Walkthrough

Fine-Tuning GPT-3.5 on Custom Dataset: A Step-by-Step Guide | Code

Fine-Tuning ChatGPT 3.5 with Synthetic Data from GPT-4 | VERY Interesting Results (!)

Fine-tune GPT3.5 Turbo with bank customer service data | With Code!

Fine-tuning Open AI Chat GPT GPT 3.5 Turbo

ChatGPT Fine-Tuning: The Next Big Thing!

Open AI Launch Fine-Tuning For GPT-3.5 API Users 👏

Le FINE TUNING GPT-3.5 (ChatGPT) change TOUT !

How to Fine Tune GPT 3.5 Turbo with OpenAI's API

Fine tuning GPT 3.5 turbo to do highly complex tasks with minimal system message

GPT-3.5: API Guide & Warum FINETUNING so NICHT OKAY ist

GPT3.5 Turbo Fine-tuning + Graphical Interface

Fine Tune GPT-3.5 Turbo Model for 10x results - 2 minute Explanation

Fine-tuning GPT-3.5 Turbo Model using Python and Google Sheets

HUGE ChatGPT Update! Fine-tune GPT 3.5 Model!

ChatGPT Fine-Tuning 🚀 SO EASY, You Won't Believe It! 🤩

Crafting AI Excellence | GPT 3 5 Turbo's Fine Tuning Unveiled!

OpenAI's GPT-3.5 Turbo Fine-Tuning: Boosting AI Performance and Customization

Fine tune GPT 3.5 Turbo in Python. Step by step instructions for entire process

🔥Finally Possible: FINE-TUNING GPT-3.5 Turbo!

Comment fine tune ChatGPT de OpenAI ? Pre entrainer intelligence artificielle Chat GPT 🤖

Комментарии

0:16:05

0:16:05

0:18:16

0:18:16

0:06:29

0:06:29

0:15:57

0:15:57

0:24:47

0:24:47

0:26:55

0:26:55

0:07:47

0:07:47

0:07:45

0:07:45

0:08:27

0:08:27

0:00:57

0:00:57

0:17:13

0:17:13

0:13:06

0:13:06

0:31:23

0:31:23

0:15:57

0:15:57

0:12:13

0:12:13

0:03:39

0:03:39

0:05:28

0:05:28

0:11:52

0:11:52

0:06:28

0:06:28

0:03:02

0:03:02

0:02:48

0:02:48

0:16:41

0:16:41

0:08:06

0:08:06

0:13:49

0:13:49