filmov

tv

Performance Testing JSON in SQL Server vs C#

Показать описание

A case study in why JSON performance in SQL Server is much better than I originally thought.

Performance testing in SQL Server is hard enough. When you start trying to compare SQL Server functions to code in .NET, lots of of other factors come in to play.

This video examines five JSON performance tests and describes what to consider when performance testing in SQL Server vs other languages.

Elsewhere on the internet:

Performance testing in SQL Server is hard enough. When you start trying to compare SQL Server functions to code in .NET, lots of of other factors come in to play.

This video examines five JSON performance tests and describes what to consider when performance testing in SQL Server vs other languages.

Elsewhere on the internet:

Performance Testing JSON in SQL Server vs C#

JSON Usage and Performance in SQL Server 2016 Bert Wagner

One SQL Cheat Code For Amazingly Fast JSON Queries - SQL JSON Index performance

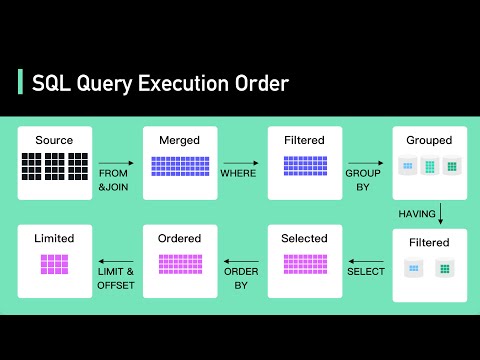

Secret To Optimizing SQL Queries - Understand The SQL Execution Order

How to load JSON file to SQL Server?

Performance Tips for JSON in Oracle Database

XML vs JSON in SQL Server 2016

Convert a JSON file to a SQL Database in 30 seconds

Impact of storing JSON data in MySQL | Partial JSON updates in Binary log | Performance | MySQL 8

How to Work with JSON Data in SQL Server (Simple and Complex JSON)

JSON Data Improvements in MySQL 8.0

Get Better Database Performance. Learn from SQL Server / MongoDB Experts

Using JSON with SQL Server 2016

Store and analyze JSON data using the Oracle API for MongoDB and SQL/JSON I Oracle Database World

Part-5| SDET Essentials| How to Convert Database Results into JSON Files

Part-4| SDET Essentials| How to Convert Database Results into JSON Files

DBAs vs Developers: JSON in SQL Server 2016 with Bert Wagner

SQL for JSON: Querying with Performance for NoSQL Databases & Applications by Arun Gupta

Difference JSON AUTO & PATH In SQL SERVER | Part 12 #sqlserver #dataqueries

Deep dive: Using JSON with SQL Server

Generating Multi-Object JSON Arrays in SQL Server

Using JSON in MySQL to get the best of both worlds (JSON + SQL) by Chaithra Gopalareddy

SQL Server - Working with JSON Data

MySQL vs MongoDB

Комментарии

0:58:32

0:58:32

0:10:41

0:10:41

0:05:57

0:05:57

0:08:08

0:08:08

0:38:37

0:38:37

0:17:46

0:17:46

0:00:18

0:00:18

0:20:02

0:20:02

0:28:06

0:28:06

0:19:28

0:19:28

0:27:34

0:27:34

0:54:19

0:54:19

0:22:41

0:22:41

0:32:59

0:32:59

0:19:31

0:19:31

1:11:57

1:11:57

0:47:26

0:47:26

0:00:44

0:00:44

0:43:08

0:43:08

0:09:11

0:09:11

0:20:33

0:20:33

0:07:05

0:07:05

0:05:30

0:05:30