filmov

tv

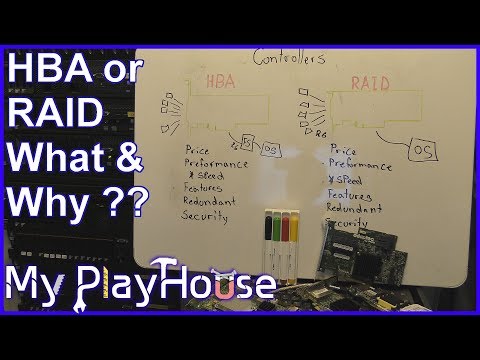

HBA vs. RAID Controller card - 833

Показать описание

I long chat about HBA vs. Raid controllers. Trying to explain how this works,,, there are always someone way smarter than me, in the comments, so be sure to check that out,, or be him :-)

[Affiliate Links]

________________________________________________________________

Even just 1$ a month, comes out to the same as Binge-watching like 400+ of me Videos every month.

My PlayHouse is a channel where i will show, what i am working on. I have this house, it is 168 Square Meters / 1808.3ft² and it is full, of half-finished projects.

I love working with heating, insulation, Servers, computers, Datacenter, green power, alternative energy, solar, wind and more. It all costs, but I'm trying to get the most out of my money, and my time.

[Affiliate Links]

________________________________________________________________

Even just 1$ a month, comes out to the same as Binge-watching like 400+ of me Videos every month.

My PlayHouse is a channel where i will show, what i am working on. I have this house, it is 168 Square Meters / 1808.3ft² and it is full, of half-finished projects.

I love working with heating, insulation, Servers, computers, Datacenter, green power, alternative energy, solar, wind and more. It all costs, but I'm trying to get the most out of my money, and my time.

HBA vs. RAID Controller card - 833

RAID vs HBA SAS controllers | What's the difference? Which is better?

HBA disk controller cards vs RAID disk controller cards for your Home needs while saving money

What is a SAS HBA card ?

Hardware RAID controller and HBA with ZFS - 1235

Add a Bunch of Hard Drives to Your PC! (kinda...) | LSI 9207-8i HBA

Comparing HBA IT mode SAS controllers | 2020 Edition

I was wrong... smallest LSI IT mode HBA...

How to drive 120 HDDs with a single 2-port HBA IT mode SAS controller

6 Things you need to know about the LSI 9211-8i (9201-8i) vs Dell PERC H310

Hardware Raid is Dead and is a Bad Idea in 2022

LSI 9207-8i - PCIe 8 Port SATA Controller and cooling solution - Expanding Unraid

Hardware RAID vs. ZFS vs. MDADM: 2025 Performance Showdown

Don't be afRAID (of RAID) | How to access hardware RAID-5 array with HBA and software

ASR-71605 Adaptec PCI-E SAS SATA RAID Controller #ASR71605

LSI 9300-8e H5-25460-00 HBA card sff8644 sas controller Host Bus Adapter

DevOps & SysAdmins: What is the difference between a HBA card and a RAID card? (5 Solutions!!)

Is this the best counterfeit LSI HBA? | Tips to avoid fake LSI SAS controller cards

LSI 9305-16i 05-25703-00 HBA card 12gb/s sff8643 sas controller Host Bus Adapter

LSI 9220-8i (IBM M1015) HBA Controller Card Unboxing

Adding a RAID card to an ASRock Rack server. The Broadcom 9460-16i is a high-performance controller.

Comparing HBA IT mode SAS controllers

HBA on a HPE DL560 G10 and Raid on a HPE DL380 G9 - 1263

2021 Broadcom Storage 9500 HBAs

Комментарии

0:29:04

0:29:04

0:22:43

0:22:43

0:09:57

0:09:57

0:04:13

0:04:13

0:16:56

0:16:56

0:06:37

0:06:37

1:05:45

1:05:45

0:07:14

0:07:14

0:11:59

0:11:59

0:08:10

0:08:10

0:22:19

0:22:19

0:06:40

0:06:40

0:14:41

0:14:41

0:23:06

0:23:06

0:00:16

0:00:16

0:00:26

0:00:26

0:03:19

0:03:19

0:15:20

0:15:20

0:00:25

0:00:25

0:06:15

0:06:15

0:00:13

0:00:13

0:16:16

0:16:16

0:21:41

0:21:41

0:00:52

0:00:52