filmov

tv

Building Database - Creating a Chatbot with Deep Learning, Python, and TensorFlow p.5

Показать описание

Welcome to part 5 of the chatbot with Python and TensorFlow tutorial series. Leading up to this tutorial, we've been working with our data and preparing the logic for how we want to insert it, now we're ready to start inserting.

How to Create Your First Database

How to Design a Database

Learn How to Create a Database | First Steps in SQL Tutorial

Creating a database

MySQL: How to create a DATABASE

Creating Company Database | SQL | Tutorial 12

The Power of Firebase for Your Web Development! 🚀 #firebase #shorts #firebasedatabase

How To Create a Database in Microsoft Access

How to create customer data in POS #retail #business #billings #excel #stockmarket #exceltips

How to create a database website with PHP and mySQL 01 - Intro

How to Create a Database | SQL Tutorial for Beginners | 2021

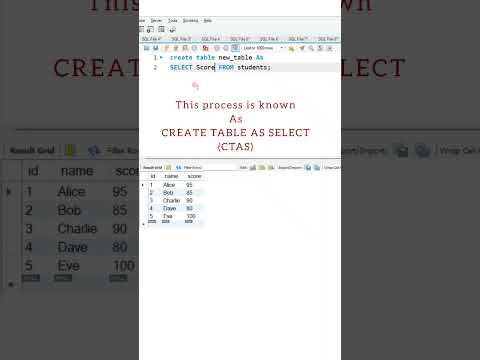

create table as select in MySQL database #shorts #mysql #database

Ms Access Database Development Process Tutorial PT 21 -- Tables, queries, reports and Relationships

SQL Database Design Tutorial for Beginners | Data Analyst Portfolio Project (1/3)

How to create a database front-end in 5 minutes

How to Create a Database Project in Visual Studio

Build a Database Programming Interface!

How to create a new database in XAMPP MySQL | 2021 Complete Guide

3 Simple Ways to Build a Database with Airtable [2024]

How to create a DATA PORTFOLIO that stands out #dataanalyst #portfolio #projects

Create Database and table in Microsoft SQL Server Management Studio #sql #sqlserver #sqlqueries

How to create Database in SQL #sql #code #coding #how_to #tutorial #database

Create a full Log-in page with just ChatGPT

SQL indexing best practices | How to make your database FASTER!

Комментарии

0:08:01

0:08:01

0:10:57

0:10:57

0:07:00

0:07:00

0:02:32

0:02:32

0:04:01

0:04:01

0:13:41

0:13:41

0:00:37

0:00:37

0:12:48

0:12:48

0:00:34

0:00:34

0:06:12

0:06:12

0:01:55

0:01:55

0:00:18

0:00:18

0:20:00

0:20:00

0:15:58

0:15:58

0:05:07

0:05:07

0:07:39

0:07:39

0:01:00

0:01:00

0:03:30

0:03:30

0:06:02

0:06:02

0:00:57

0:00:57

0:00:45

0:00:45

0:00:13

0:00:13

0:00:33

0:00:33

0:04:08

0:04:08