filmov

tv

Anon Leaks NEW Details About Q* | 'This is AGI'

Показать описание

A new anonymous drop has been released about Q*. Let's review!

Join My Newsletter for Regular AI Updates 👇🏼

Need AI Consulting? ✅

My Links 🔗

Rent a GPU (MassedCompute) 🚀

USE CODE "MatthewBerman" for 50% discount

Media/Sponsorship Inquiries 📈

Links:

Join My Newsletter for Regular AI Updates 👇🏼

Need AI Consulting? ✅

My Links 🔗

Rent a GPU (MassedCompute) 🚀

USE CODE "MatthewBerman" for 50% discount

Media/Sponsorship Inquiries 📈

Links:

Anon Leaks NEW Details About Q* | 'This is AGI'

Anon Leaks NEW Details About Q*

Anon Leaks NEW Details About Q* | 'This is AGI'

Anonymous Leaks New No Mans Sky Update For 2023

Greenwald: Anonymous leaks not evidence in Russian hacking

Avengers Doomsday LEAK & Spiderman 4 NEW RELEASE DATE

Anonymous leak strange hacked FBI call to Scotland Yard

Reddit leak, Anonymous Sudan & Banks *darknet parliament is now a thing? | Weekend Recap

Anonymous CNN Staffers LEAK Details About Pro-Israel Bias

Insane Data Leak

Anonymous Has Leaked Central Bank Of Russia’s Files

NEW FTC COPPA LEAK from Anonymous YouTube Employee?!

Anonymous leaks database of the Russian Ministry of Defence | cybernews.com

Anonymous LEAKS New SEASON 2 MAP.. Is it Fake? (Fortnite)

LOTS OF NEW SYNOLOGY NAS - TOS 5 COMING SOON - ANONYMOUS LEAK 1TB RUSSIAN DATA - EDGE SAVES YOUR RAM

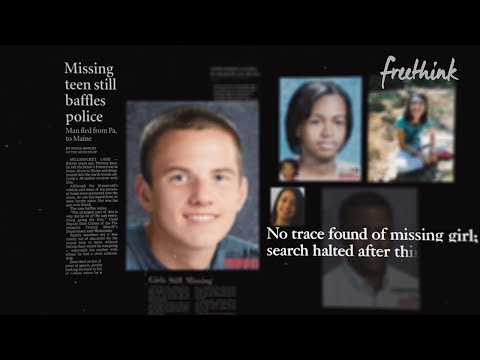

Hackers Find Missing People For Fun

Anonymous Leaks Nestlé Data For Not Pulling Out Of Russia #ukraine

Clix Leaked His Bank Account on Stream!

RUMOR: Pokémon Sword & Shield Direct Leaked 2 Weeks Ago By Anonymous User + Revealed More Detail...

Anonymous leak of welfare records exposed and damaged family, mother says

Paris Attacks | Hacker Group Anonymous Leaks Details Of Suspected ISIS Accounts

Nintendo Switch Online NEW Systems Have LEAKED... (New Details)

uhhhh... new minecraft update leaked... (actually)

Anonymous suspended from Twitter amid threatening leaks

Комментарии

0:22:17

0:22:17

0:05:18

0:05:18

0:09:13

0:09:13

0:10:54

0:10:54

0:05:20

0:05:20

0:08:56

0:08:56

0:02:31

0:02:31

0:03:28

0:03:28

0:07:50

0:07:50

0:00:27

0:00:27

0:01:00

0:01:00

0:06:22

0:06:22

0:04:49

0:04:49

0:04:33

0:04:33

0:07:16

0:07:16

0:06:07

0:06:07

0:00:38

0:00:38

0:01:00

0:01:00

0:03:20

0:03:20

0:01:36

0:01:36

0:01:43

0:01:43

0:10:14

0:10:14

0:00:50

0:00:50

0:00:38

0:00:38