filmov

tv

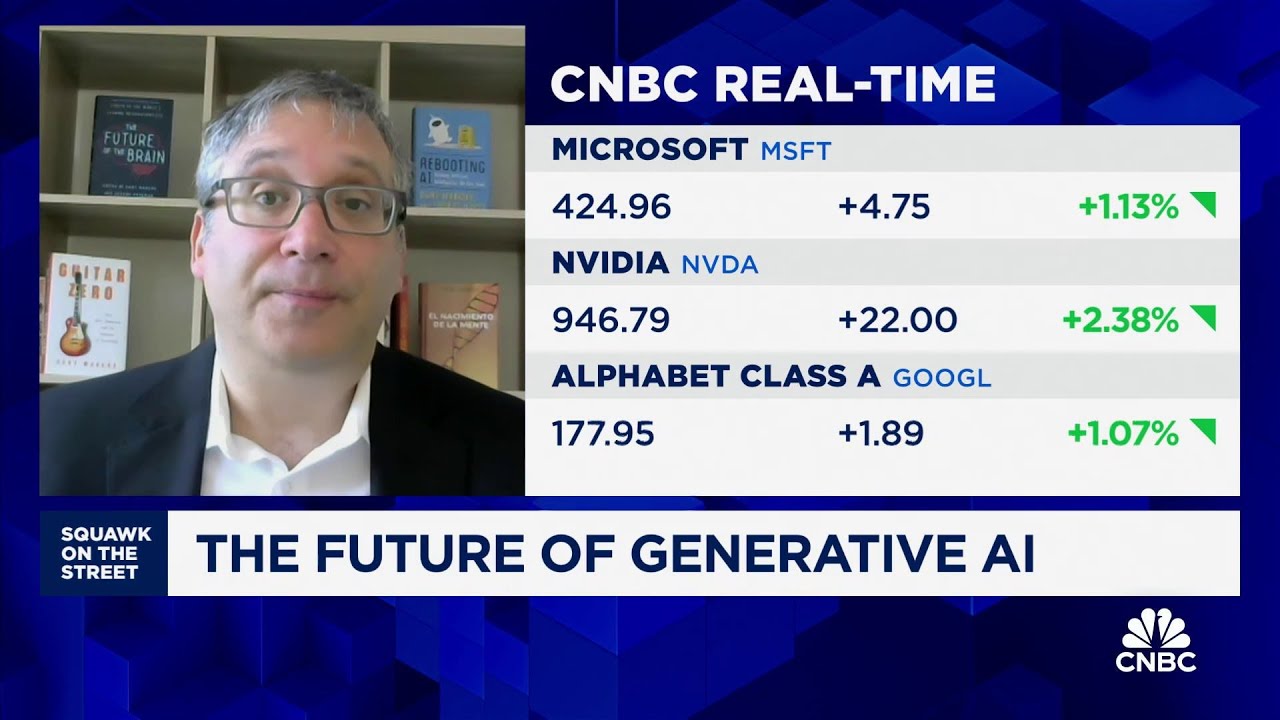

We're 'at least a decade away' from solving AI, says NYU Professor Gary Marcus

Показать описание

Gary Marcus, New York University professor emeritus, joins 'Squawk on the Street' to discuss artificial intelligence implications, the future of generative AI, investor decisions, and more.

We're 'at least a decade away' from solving AI, says NYU Professor Gary Marcus

Best player from every Decade 🥶🤩

POV: You wake up in a different decade

We Compared Nearly a DECADE of The Last Of Us Graphics...

Craziest Nature Videos of the Decade

The Biggest Song Each Year The Past Decade #shorts #hits #music

15 Years of Journey | Severe Bilateral Deformity in a Brave Kid

We’re in an infrastructure decade #shorts

Keep Reading - A Decade with A Word on Words

Best F1 races from the last decade 😈🤩😏

Why Top Investors are Warning of a 'Lost Decade' for Stocks

This is Football - 2010's Decade Recap

Top 30 Biggest Scientific Discoveries of the Decade

The greatest NBA player in the last DECADE #shorts

Peter Attia on the science and art of longevity

REVEALED: The BIGGEST Spending Clubs Of The Last DECADE! 🤑

10 Companies We Lost In The Last Decade

Every Hour Daughter Survives New Decade

Taylor Swift Accepts Woman of the Decade Award | Women In Music

25 Companies We Lost in the Last Decade

Disneyland a Decade Ago: Rare 2014 Adventures from the Randomland Vault

How each decade influenced AVA MAX’s music! Who did it best? 🤯♻️📅

U.S. obesity rates drop for 1st time in a decade

Syria has affected us deeply over the last decade! #VonderLeyen #Syria #eudebates #refugees #shorts

Комментарии

0:05:10

0:05:10

0:00:43

0:00:43

0:00:58

0:00:58

0:00:31

0:00:31

0:16:09

0:16:09

0:01:01

0:01:01

0:10:17

0:10:17

0:00:27

0:00:27

0:26:46

0:26:46

0:00:15

0:00:15

0:15:42

0:15:42

0:08:01

0:08:01

0:30:37

0:30:37

0:00:19

0:00:19

0:01:00

0:01:00

0:00:58

0:00:58

0:09:52

0:09:52

0:41:00

0:41:00

0:15:17

0:15:17

0:17:59

0:17:59

0:35:50

0:35:50

0:00:49

0:00:49

0:00:29

0:00:29

0:00:14

0:00:14