filmov

tv

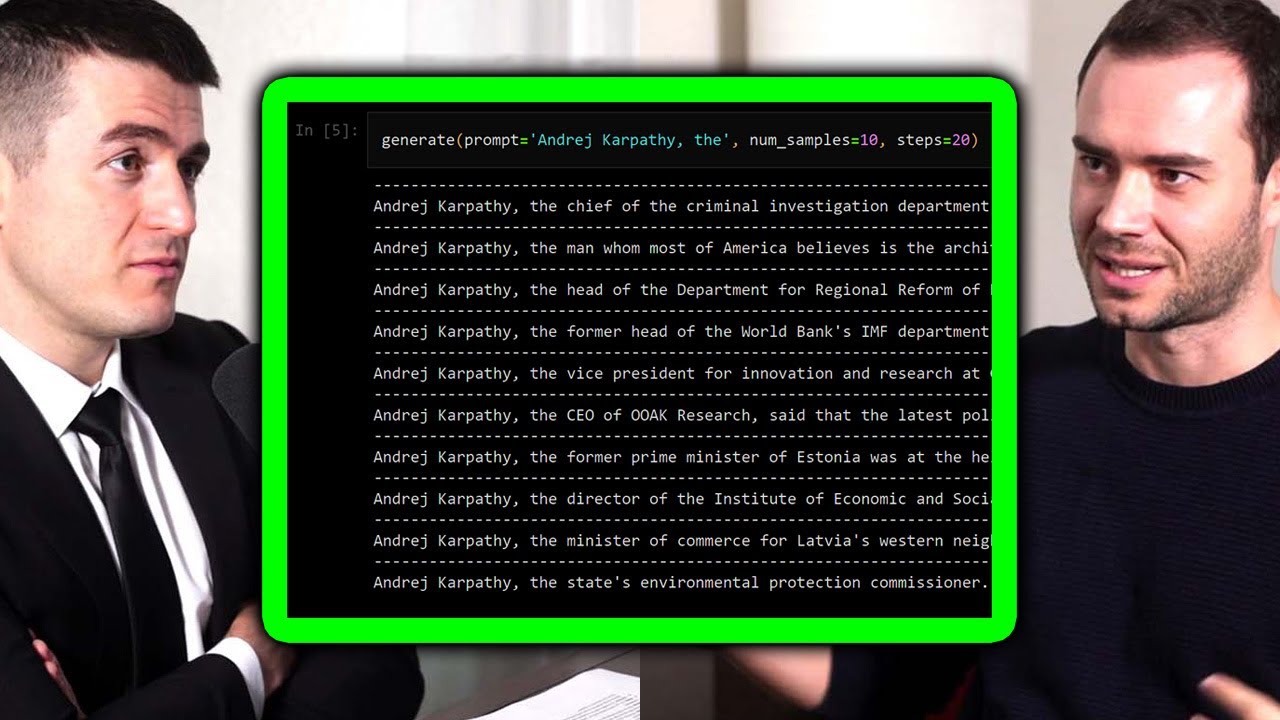

Transformers: The best idea in AI | Andrej Karpathy and Lex Fridman

Показать описание

Please support this podcast by checking out our sponsors:

GUEST BIO:

Andrej Karpathy is a legendary AI researcher, engineer, and educator. He's the former director of AI at Tesla, a founding member of OpenAI, and an educator at Stanford.

PODCAST INFO:

SOCIAL:

GUEST BIO:

Andrej Karpathy is a legendary AI researcher, engineer, and educator. He's the former director of AI at Tesla, a founding member of OpenAI, and an educator at Stanford.

PODCAST INFO:

SOCIAL:

Transformers: The best idea in AI | Andrej Karpathy and Lex Fridman

Illustrated Guide to Transformers Neural Network: A step by step explanation

Transformers, explained: Understand the model behind GPT, BERT, and T5

What are Transformers (Machine Learning Model)?

But what is a GPT? Visual intro to transformers | Chapter 5, Deep Learning

Transformers: Age of Extinction (2014) || Lockdown: 'You have no idea.' [4K]

Mission: Eliminate Optimus Prime #shorts #transformers #optimusprime

Transformers vehicles names #shorts #transformers #optimusprime

Transformers: Rescue Bots 🔴 SEASON 4 | FULL Episodes LIVE 24/7 | Transformers Junior

Knight Optimus was something else #shorts #transformers #optimusprime

Marvel and DC CGI vs Transformers CGI #transformers #edits #marvel #dc

Megatron🔥 Attitude WhatsApp Status 🔥#shorts #transformers #megatron

Top 5 ELECTRONICS PROJECTS Using TRANSFORMERS

Optimus Prime quotes are the best #shorts #transformers #optimusprime

Transformers Age of Extinction (Blu Ray) Edition - Lockdown

Transformers in NLP | GeeksforGeeks

Optimus Prime vs Grimlock - 'Let Me Lead You' Scene | Transformers Age of Extinction (2014...

I Can Now! | Transformers G1 | 40th Anniversary

Sqweeks Smart Move! #edformers #shorts #transformers

Why LOCKDOWN'S THEME SOUNDS SO GOOD 🎧 #optimusprime #Transformers #AgeOfExtinction #lockdown

Transformers : Age of Extinction - Lockdown and Attinger Scene (1080pHD VO)

Live -Transformers Indepth Architecture Understanding- Attention Is All You Need

DIY Transformers Car Costume for Halloween #halloween #chainsawman #fnaf #halloweencostume #diy

Transformers | Basics of Transformers

Комментарии

0:08:38

0:08:38

0:15:01

0:15:01

0:09:11

0:09:11

0:05:50

0:05:50

0:27:14

0:27:14

0:01:00

0:01:00

0:00:19

0:00:19

0:00:30

0:00:30

7:59:00

7:59:00

0:00:20

0:00:20

0:00:23

0:00:23

0:00:36

0:00:36

0:13:25

0:13:25

0:00:50

0:00:50

0:03:20

0:03:20

0:28:45

0:28:45

0:05:33

0:05:33

0:00:18

0:00:18

0:00:18

0:00:18

0:00:36

0:00:36

0:01:18

0:01:18

1:19:24

1:19:24

0:01:01

0:01:01

0:00:18

0:00:18