filmov

tv

Multi-Class Text Classification with Cansen Çağlayan: Using Bert, Conv1D, Transformer Encoder Block

Показать описание

In Part A, we discussed and explained the necessary steps for Data Text Analysis & Text Preprocessing of Multi-Class Text Classification. We applied text preprocessing by using the Keras Text Vectorization layer. Text processing means that the text needs to be preprocessed so that we can feed it to the classification algorithm.

In Part B, we first designed a custom model based on the GPT3 approach. Then, we used a pre-train Bert model to classify multi-topic text reviews.

Natural language processing (NLP) is everywhere, one of the most used concepts in the business world. Whether to predict the sentiment in a sentence or differentiate the emails, or flag a toxic comment, all these scenarios use a strong natural language processing concept called text classification. I can understand in the above cases we have a maximum target class of two or three. Can we go beyond that? Can we build a multi-Class (say more than 5) text classification model? Well! Yes, we can do it. In this research meeting, I and Cansen Çağlayan discuss how to design Deep Learning models based on Transformer Encoder Block and Conv1D and conduct Transfer Learning using Bert Transformer as a Multi-Topic (Multi-Class) Text Classifier. Multi-class text classification using BERT and TensorFlow is a step-by-step tutorial from data loading to prediction. We provide a Guide to Building an End-to-End Multiclass Text Classification Model.

Multi Class Text Classification With Deep Learning Using BERT, Natural Language Processing, NLP, Hugging Face. We implement BERT with SimpleTransformer library.

1D convolution layer (e.g. temporal convolution) creates a convolution kernel that is convolved with the layer input over a single spatial (or temporal) dimension to produce a tensor of outputs.

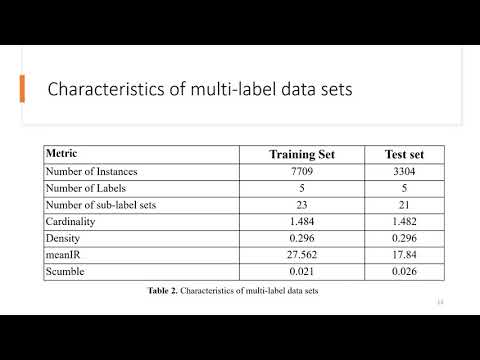

The transformer-based encoder-decoder model was introduced by Vaswani et al. in the famous Attention is all you need paper and is today the de-facto standard encoder-decoder architecture in natural language processing (NLP). Encoder-Decoder - The transformer-based encoder-decoder model is presented and it is explained how the model is used for inference. Encoder - The encoder part of the model is explained in detail. Decoder - The decoder part of the model is explained in detail. In machine learning, multiclass or multinomial classification is the problem of classifying instances into one of three or more classes (classifying instances into one of two classes is called binary classification). The multiclass classification should not be confused with multi-label. classification, where multiple labels are to be predicted for each instance.

Комментарии

1:31:36

1:31:36

1:10:08

1:10:08

1:42:06

1:42:06

0:24:53

0:24:53

0:44:38

0:44:38

0:29:32

0:29:32

0:19:08

0:19:08

0:49:55

0:49:55

0:36:05

0:36:05

0:04:55

0:04:55

0:14:58

0:14:58

0:09:43

0:09:43

0:15:21

0:15:21

0:05:33

0:05:33

0:37:52

0:37:52

0:32:37

0:32:37

0:14:23

0:14:23

0:25:57

0:25:57

0:19:55

0:19:55

1:47:05

1:47:05

0:11:36

0:11:36

1:52:08

1:52:08

0:52:31

0:52:31

0:17:25

0:17:25