filmov

tv

API For Open-Source Models 🔥 Easily Build With ANY Open-Source LLM

Показать описание

In this video, we review OpenLLM, and I show you how to install and use it. OpenLLM makes building on top of open-source models (llama, vicuna, falcon, opt, etc) as easy as it is to build on top of ChatGPT's API. This allows developers to create incredible apps on top of open-source LLMs while having first-class support for tools (langchain, hugging face agents bentoML), and one-click deployment. Also, fine-tuning is coming soon!

Enjoy :)

Join My Newsletter for Regular AI Updates 👇🏼

Need AI Consulting? ✅

Rent a GPU (MassedCompute) 🚀

USE CODE "MatthewBerman" for 50% discount

My Links 🔗

Media/Sponsorship Inquiries 📈

Links:

Enjoy :)

Join My Newsletter for Regular AI Updates 👇🏼

Need AI Consulting? ✅

Rent a GPU (MassedCompute) 🚀

USE CODE "MatthewBerman" for 50% discount

My Links 🔗

Media/Sponsorship Inquiries 📈

Links:

API For Open-Source Models 🔥 Easily Build With ANY Open-Source LLM

This new AI is powerful and uncensored… Let’s run it

$0 Embeddings (OpenAI vs. free & open source)

Should You Use Open Source Large Language Models?

Hugging Face + Langchain in 5 mins | Access 200k+ FREE AI models for your AI apps

Open Source AI Inference API w/ Together

Insanely Fast LLAMA-3 on Groq Playground and API for FREE

Deploy LLM App as API Using Langserve Langchain

Image Generation Using Flux AI Models

Build Anything With ChatGPT API, Here’s How

Combine MULTIPLE LLMs to build an AI API! (super simple!!!) Langflow | LangChain | Groq | OpenAI

Building a Text Generation API with Open Source LLMs: Easy Step-by-Step Guide

Using ChatGPT with YOUR OWN Data. This is magical. (LangChain OpenAI API)

250 Unique APIs for your next Project

Build API for Open Source LLM's 🚀 🔥

How to Run Open-Source AI Models Locally | LM Studio Tutorial

API Modeling with OpenTravel 2.0

3-Langchain Series-Production Grade Deployment LLM As API With Langchain And FastAPI

How to Use the Open-Source Hugging Chat API in Python

End To End Document Q&A RAG App With Gemma And Groq API

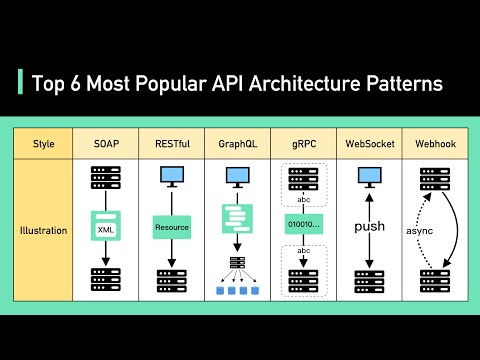

Top 6 Most Popular API Architecture Styles

LibreTranslate - Free Open Source Machine Translation API - PART 2

LangChain + HuggingFace's Inference API (no OpenAI credits required!)

How To Deploy Machine Learning Models Using FastAPI-Deployment Of ML Models As API’s

Комментарии

0:08:17

0:08:17

0:04:37

0:04:37

1:24:42

1:24:42

0:06:40

0:06:40

0:09:48

0:09:48

0:25:25

0:25:25

0:08:54

0:08:54

0:17:49

0:17:49

0:04:07

0:04:07

0:12:11

0:12:11

0:36:31

0:36:31

0:07:35

0:07:35

0:16:29

0:16:29

0:07:11

0:07:11

0:04:07

0:04:07

0:12:00

0:12:00

0:18:59

0:18:59

0:27:12

0:27:12

0:07:38

0:07:38

0:32:59

0:32:59

0:04:21

0:04:21

0:04:26

0:04:26

0:24:36

0:24:36

0:18:58

0:18:58