filmov

tv

A Gentle Introduction To Math Behind Neural Networks and Deep Learning (nested composite function)

Показать описание

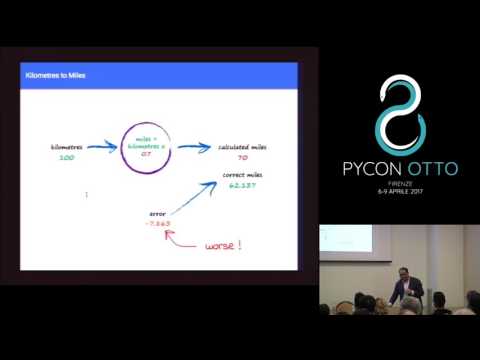

In this video, we explain the basic mathematics behind neural networks and deep learning through a simple classification example. We start by defining a threshold logic unit or TLU, also known as neuron. We then discuss the concept of a weight sum or linear combination followed by applying a nonlinear activation function, such as sigmoid or ReLU. We then put together a few units to show how we can express their relationships in the form matrix/vector multiplications. The main idea is that we can extract meaningful features from the input to develop better classification models. Therefore, we see that we have a nested or composite function to represent neural networks and deep learning models.

#neuralnetworks #deeplearning #mathematics

#neuralnetworks #deeplearning #mathematics

0:02:57

0:02:57

2:55:14

2:55:14

0:12:45

0:12:45

0:08:22

0:08:22

0:08:16

0:08:16

0:00:49

0:00:49

0:04:26

0:04:26

0:08:47

0:08:47

0:56:23

0:56:23

0:06:51

0:06:51

1:01:59

1:01:59

0:02:51

0:02:51

0:05:18

0:05:18

0:00:28

0:00:28

0:45:04

0:45:04

0:04:22

0:04:22

1:00:06

1:00:06

0:00:38

0:00:38

0:04:39

0:04:39

1:01:31

1:01:31

0:16:14

0:16:14

0:19:14

0:19:14

0:07:01

0:07:01

3:29:26

3:29:26