filmov

tv

ExtraTrees vs RandomForest in Predicting Boston House Price

Показать описание

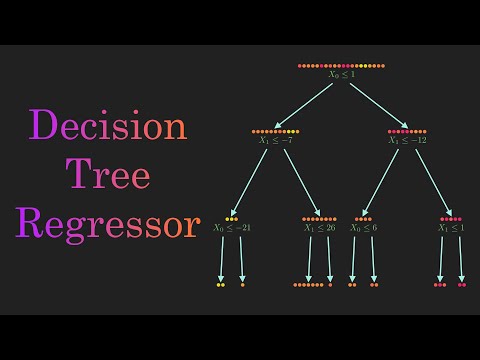

ExtraTrees and Random Forest are two popular machine learning algorithms that are used for regression and classification problems. Both of these algorithms are based on the concept of decision trees, which are models that can be used to make predictions by breaking down a problem into a series of yes or no questions. In this article, we will be comparing ExtraTrees and Random Forest in terms of their performance in predicting the prices of houses in the Boston area using the Boston Housing dataset in Python.

The Boston Housing dataset is a well-known dataset that contains information about housing prices in the Boston area. It includes features such as the number of rooms in a house, the crime rate in the area, and the age of the house. We will use this dataset to train and test our ExtraTrees and Random Forest models.

ExtraTrees is an extension of the Random Forest algorithm that creates multiple decision trees during the training process. It works by randomly selecting a subset of the features for each decision tree and then averaging the predictions of all the trees to make a final prediction. This helps to reduce overfitting and improve the overall performance of the model.

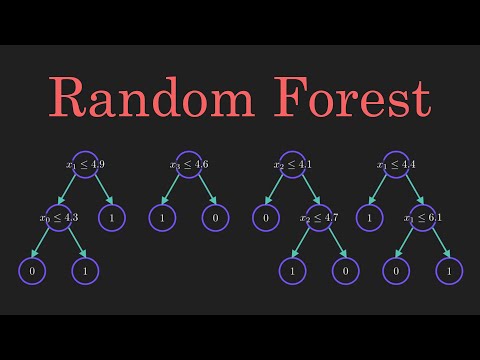

Random Forest, on the other hand, creates multiple decision trees during the training process, but it uses a different method for selecting the features for each tree. Instead of randomly selecting a subset of the features, it selects the best features based on the information gain. This helps to improve the accuracy of the individual decision trees and the overall performance of the model.

When comparing ExtraTrees and Random Forest, we can use metrics such as mean squared error (MSE) and root mean squared error (RMSE) to evaluate their performance on the Boston Housing dataset. MSE is the average of the squared differences between the predicted and actual values, while RMSE is the square root of MSE. Lower values for these metrics indicate a better fit for the model.

In conclusion, ExtraTrees and Random Forest are both powerful machine learning algorithms that can be used for regression and classification problems. Both algorithms are based on decision trees and use multiple trees during the training process. The main difference between the two is the method used for selecting the features for each decision tree. ExtraTrees selects the features randomly while Random Forest selects the best features based on the information gain. Both algorithms can produce good results, but the choice of algorithm will depend on the specific problem and the desired level of accuracy. To evaluate the performance of these algorithms, we can use metrics such as MSE and RMSE.

#DataScienceBootcamp #datascience #machinelearning #Bagging #ExtraTrees #RandomForest #PythonCoding

The Boston Housing dataset is a well-known dataset that contains information about housing prices in the Boston area. It includes features such as the number of rooms in a house, the crime rate in the area, and the age of the house. We will use this dataset to train and test our ExtraTrees and Random Forest models.

ExtraTrees is an extension of the Random Forest algorithm that creates multiple decision trees during the training process. It works by randomly selecting a subset of the features for each decision tree and then averaging the predictions of all the trees to make a final prediction. This helps to reduce overfitting and improve the overall performance of the model.

Random Forest, on the other hand, creates multiple decision trees during the training process, but it uses a different method for selecting the features for each tree. Instead of randomly selecting a subset of the features, it selects the best features based on the information gain. This helps to improve the accuracy of the individual decision trees and the overall performance of the model.

When comparing ExtraTrees and Random Forest, we can use metrics such as mean squared error (MSE) and root mean squared error (RMSE) to evaluate their performance on the Boston Housing dataset. MSE is the average of the squared differences between the predicted and actual values, while RMSE is the square root of MSE. Lower values for these metrics indicate a better fit for the model.

In conclusion, ExtraTrees and Random Forest are both powerful machine learning algorithms that can be used for regression and classification problems. Both algorithms are based on decision trees and use multiple trees during the training process. The main difference between the two is the method used for selecting the features for each decision tree. ExtraTrees selects the features randomly while Random Forest selects the best features based on the information gain. Both algorithms can produce good results, but the choice of algorithm will depend on the specific problem and the desired level of accuracy. To evaluate the performance of these algorithms, we can use metrics such as MSE and RMSE.

#DataScienceBootcamp #datascience #machinelearning #Bagging #ExtraTrees #RandomForest #PythonCoding

Комментарии

0:08:49

0:08:49

0:08:01

0:08:01

0:03:16

0:03:16

0:06:39

0:06:39

0:03:44

0:03:44

0:02:31

0:02:31

0:11:56

0:11:56

0:08:33

0:08:33

0:06:51

0:06:51

0:08:08

0:08:08

0:09:51

0:09:51

0:09:17

0:09:17

0:10:00

0:10:00

0:05:44

0:05:44

0:10:33

0:10:33

0:01:14

0:01:14

0:23:20

0:23:20

0:18:02

0:18:02

0:06:27

0:06:27

0:16:48

0:16:48

1:20:33

1:20:33

0:06:03

0:06:03

0:20:07

0:20:07

0:03:40

0:03:40