filmov

tv

Deep Learning Tutorial with Python | Machine Learning with Neural Networks [Top Udemy Instructor]

Показать описание

In this video, Deep Learning Tutorial with Python | Machine Learning with Neural Networks Explained, Udemy instructor Frank Kane helps de-mystify the world of deep learning and artificial neural networks with Python!

In less than 3 hours, you can understand the theory behind modern artificial intelligence, and apply it with several hands-on examples. This is machine learning on steroids! Find out why everyone’s so excited about it and how it really works – and what modern AI can and cannot really do.

In this course, we will cover:

• Deep Learning Pre-requistes (gradient descent, autodiff, softmax)

• The History of Artificial Neural Networks

• Deep Learning in the Tensorflow Playground

• Deep Learning Details

• Introducing Tensorflow

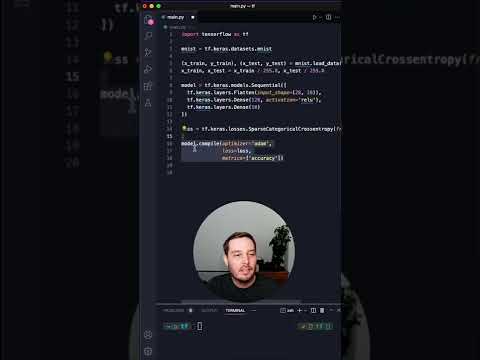

• Using Tensorflow

• Introducing Keras

• Using Keras to Predict Political Parties

• Convolutional Neural Networks (CNNs)

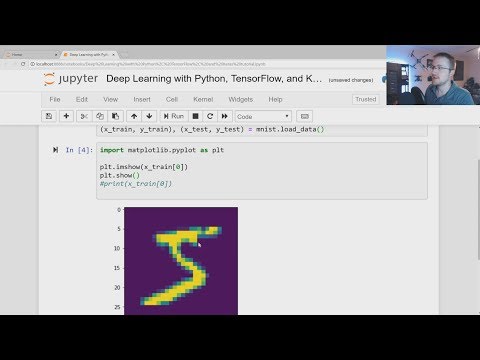

• Using CNNs for Handwriting Recognition

• Recurrent Neural Networks (RNNs)

• Using a RNN for Sentiment Analysis

• The Ethics of Deep Learning

• Learning More about Deep Learning

At the end, you will have a final challenge to create your own deep learning / machine learning system to predict whether real mammogram results are benign or malignant, using your own artificial neural network you have learned to code from scratch with Python.

Separate the reality of modern AI from the hype – by learning about deep learning, well, deeply. You will need some familiarity with Python and linear algebra to follow along, but if you have that experience, you will find that neural networks are not as complicated as they sound. And how they actually work is quite elegant!

This is hands-on tutorial with real code you can download, study, and run yourself.

#Udemy

#ITeachOnUdemy

Share your story with #BeAble

In less than 3 hours, you can understand the theory behind modern artificial intelligence, and apply it with several hands-on examples. This is machine learning on steroids! Find out why everyone’s so excited about it and how it really works – and what modern AI can and cannot really do.

In this course, we will cover:

• Deep Learning Pre-requistes (gradient descent, autodiff, softmax)

• The History of Artificial Neural Networks

• Deep Learning in the Tensorflow Playground

• Deep Learning Details

• Introducing Tensorflow

• Using Tensorflow

• Introducing Keras

• Using Keras to Predict Political Parties

• Convolutional Neural Networks (CNNs)

• Using CNNs for Handwriting Recognition

• Recurrent Neural Networks (RNNs)

• Using a RNN for Sentiment Analysis

• The Ethics of Deep Learning

• Learning More about Deep Learning

At the end, you will have a final challenge to create your own deep learning / machine learning system to predict whether real mammogram results are benign or malignant, using your own artificial neural network you have learned to code from scratch with Python.

Separate the reality of modern AI from the hype – by learning about deep learning, well, deeply. You will need some familiarity with Python and linear algebra to follow along, but if you have that experience, you will find that neural networks are not as complicated as they sound. And how they actually work is quite elegant!

This is hands-on tutorial with real code you can download, study, and run yourself.

#Udemy

#ITeachOnUdemy

Share your story with #BeAble

Комментарии

0:49:43

0:49:43

0:37:59

0:37:59

0:20:34

0:20:34

2:50:10

2:50:10

0:02:43

0:02:43

6:52:08

6:52:08

2:47:55

2:47:55

0:20:56

0:20:56

0:10:42

0:10:42

0:11:33

0:11:33

0:02:39

0:02:39

1:37:26

1:37:26

0:05:52

0:05:52

10:15:28

10:15:28

1:10:44

1:10:44

0:11:01

0:11:01

0:03:39

0:03:39

0:02:35

0:02:35

3:53:53

3:53:53

0:00:50

0:00:50

0:23:37

0:23:37

1:25:39

1:25:39

4:35:42

4:35:42

0:30:20

0:30:20