filmov

tv

PySpark Interview Questions | Azure Data Engineer #azuredataengineer #databricks #pyspark

Показать описание

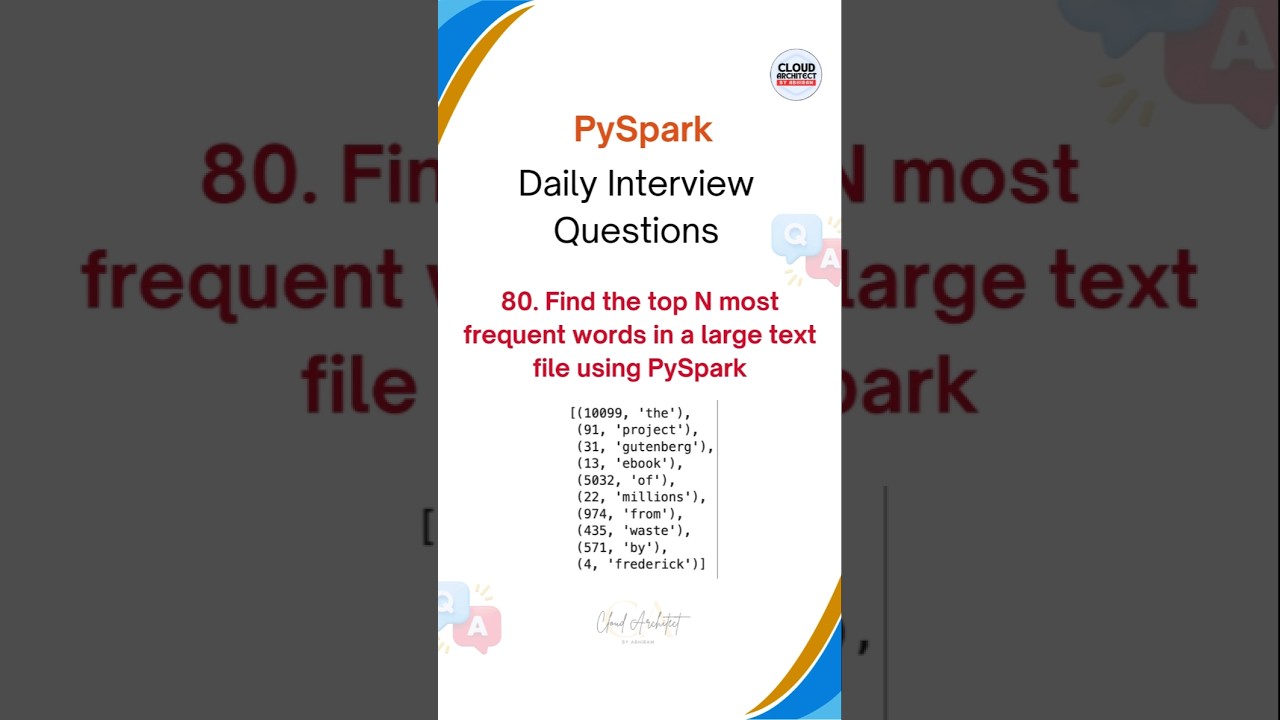

Q80. Find the top N most frequent words in a large text file using PySpark

"Need to find the most common words in a massive text file? 🔍

PySpark makes it a breeze! Learn how to extract the top N words using a simple and efficient approach. ⚡"

Don't forget to like, comment, and subscribe for more PySpark interview preparation content! 🔥💡

👉 If you found this video helpful, don’t forget to hit the like button and subscribe for more Spark tutorials!

📢 Have questions or tips of your own? Drop them in the comments below!

#PySpark #BigData #DataScience #CloudArchitectAbhiram

"Need to find the most common words in a massive text file? 🔍

PySpark makes it a breeze! Learn how to extract the top N words using a simple and efficient approach. ⚡"

Don't forget to like, comment, and subscribe for more PySpark interview preparation content! 🔥💡

👉 If you found this video helpful, don’t forget to hit the like button and subscribe for more Spark tutorials!

📢 Have questions or tips of your own? Drop them in the comments below!

#PySpark #BigData #DataScience #CloudArchitectAbhiram

Top 15 Spark Interview Questions in less than 15 minutes Part-2 #bigdata #pyspark #interview

[2025 EDITION] Azure Data Engineer Interview Questions - with PYSPARK

PySpark Interview Questions | Azure Data Engineer #azuredataengineer #databricks #pyspark

5 Common PySpark Interview Questions

Understanding how to Optimize PySpark Job | Cache | Broadcast Join | Shuffle Hash Join #interview

Most important pyspark data engineer interview questions 2024

Azure Cloud Data Engineer Mock Interview | Important Questions asked in Big Data Interviews| Pyspark

Advantages of PARQUET FILE FORMAT in Apache Spark | Data Engineer Interview Questions #interview

Amgen Databricks Data Engineer interview Questions| Azure Data Engineer| PandeyGuruji

Coforge Data Engineering Python Interview Question

Some Techniques to Optimize Pyspark Job | Pyspark Interview Question| Data Engineer

PySpark Interview Questions | Azure Data Engineer #azuredataengineer #databricks #pyspark

PySpark Interview Questions | Azure Data Engineer #azuredataengineer #databricks #pyspark

PySpark Interview Questions (2025) | PySpark Real Time Scenarios

PySpark Interview Questions | Azure Data Engineer #azuredataengineer #databricks #pyspark

PySpark Interview Questions | Azure Data Engineer #azuredataengineer #databricks #pyspark

PySpark Interview Questions | Azure Data Engineer #azuredataengineer #databricks #pyspark

Understanding How to Handle Data Skewness in PySpark #interview

Azure Databricks Interview Questions And Answers | Azure Databricks Interview | Intellipaat

Azure Databricks Interview Questions 2025 [WITH REAL-TIME SCENARIOS]

Cluster Configuration in Apache Spark | Thumb rule fo optimal performance #interview #question

spark data engineer interview questions and answers | 3-7 years | Job Optimizations | Q4

PySpark Interview Questions | Azure Data Engineer #azuredataengineer #databricks #pyspark

spark data engineer interview questions and answers | 3-7 years | Job Optimizations | Q2

Комментарии

0:12:46

0:12:46

![[2025 EDITION] Azure](https://i.ytimg.com/vi/KJvn0ypuNtI/hqdefault.jpg) 2:32:30

2:32:30

0:00:25

0:00:25

0:00:26

0:00:26

0:00:48

0:00:48

0:00:06

0:00:06

0:29:08

0:29:08

0:00:44

0:00:44

0:08:54

0:08:54

0:06:34

0:06:34

0:00:43

0:00:43

0:00:24

0:00:24

0:00:31

0:00:31

3:47:13

3:47:13

0:00:43

0:00:43

0:00:30

0:00:30

0:00:22

0:00:22

0:00:58

0:00:58

0:52:24

0:52:24

2:33:42

2:33:42

0:01:00

0:01:00

0:00:31

0:00:31

0:00:42

0:00:42

0:00:31

0:00:31