filmov

tv

224 - Recurrent and Residual U-net

Показать описание

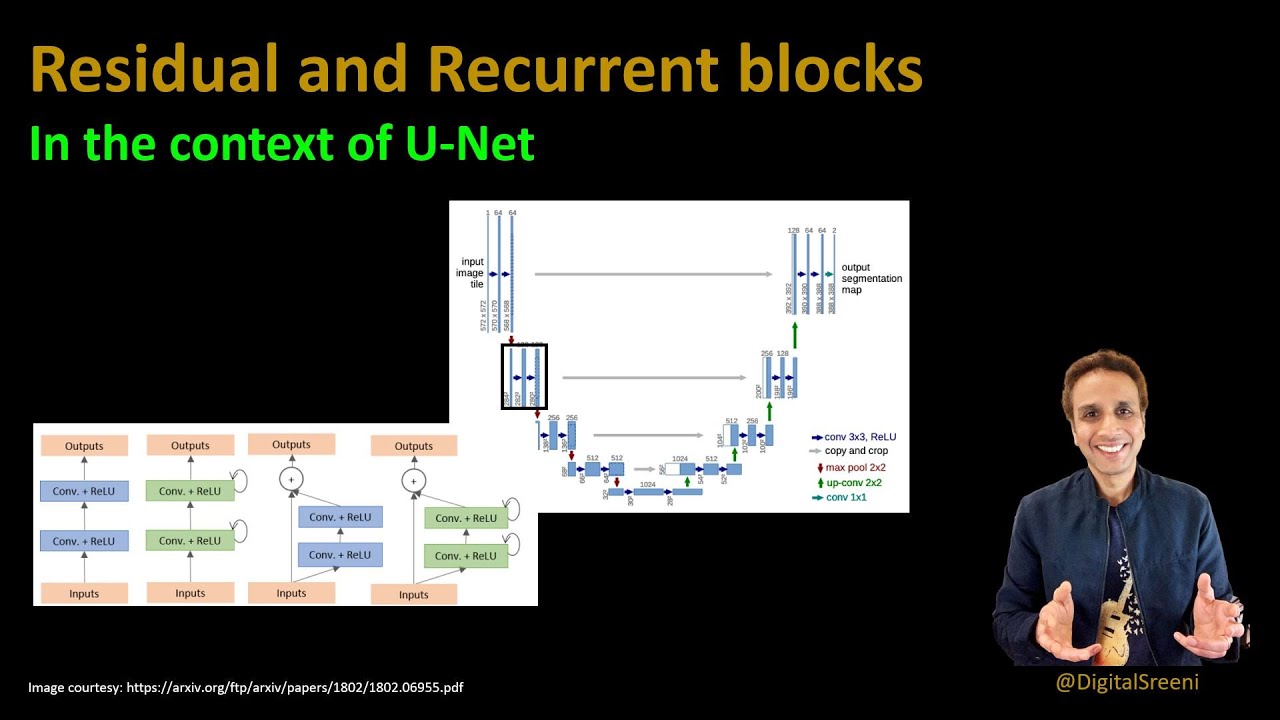

Residual Networks:

Residual networks were proposed to overcome the problems of deep CNNs (e.g., VGG). Stacking convolutional layers and making the model deeper hurts the generalization ability of the network. To address this problem, ResNet architecture was introduced which adds the idea of “skip connections”.

In traditional neural networks, each layer feeds into the next layer. In networks with residual blocks, each layer feeds into the next layer and directly into the layers about 2–3 hops away. Inputs can forward propagate faster through the residual connections (shortcuts) across layers.

Recurrent convolutional networks:

The recurrent network can use the feedback connection to store information over time. Recurrent networks use context information; as time steps increase, the network leverages more and more neighborhood information. Recurrent and CNNs can be combined for image-based applications. With recurrent convolution layers, the network can evolve over time though the input is static. Each unit is influenced by its neighboring units, includes the context information of an image.

U-net can be built using recurrent or residual or a combination block instead of the traditional double-convolutional block.

Residual networks were proposed to overcome the problems of deep CNNs (e.g., VGG). Stacking convolutional layers and making the model deeper hurts the generalization ability of the network. To address this problem, ResNet architecture was introduced which adds the idea of “skip connections”.

In traditional neural networks, each layer feeds into the next layer. In networks with residual blocks, each layer feeds into the next layer and directly into the layers about 2–3 hops away. Inputs can forward propagate faster through the residual connections (shortcuts) across layers.

Recurrent convolutional networks:

The recurrent network can use the feedback connection to store information over time. Recurrent networks use context information; as time steps increase, the network leverages more and more neighborhood information. Recurrent and CNNs can be combined for image-based applications. With recurrent convolution layers, the network can evolve over time though the input is static. Each unit is influenced by its neighboring units, includes the context information of an image.

U-net can be built using recurrent or residual or a combination block instead of the traditional double-convolutional block.

Комментарии

0:16:05

0:16:05

0:09:47

0:09:47

0:17:00

0:17:00

0:04:58

0:04:58

0:01:51

0:01:51

0:00:50

0:00:50

0:03:28

0:03:28

![[DL] Why do](https://i.ytimg.com/vi/jIz8Xw4v9DI/hqdefault.jpg) 0:03:14

0:03:14

0:02:44

0:02:44

0:34:31

0:34:31

0:06:08

0:06:08

0:27:06

0:27:06

1:21:38

1:21:38

0:00:57

0:00:57

1:24:26

1:24:26

0:13:09

0:13:09

![[Classic] Deep Residual](https://i.ytimg.com/vi/GWt6Fu05voI/hqdefault.jpg) 0:31:21

0:31:21

![[DL] Residual networks](https://i.ytimg.com/vi/Ndy58bK-E1o/hqdefault.jpg) 0:10:15

0:10:15

0:06:18

0:06:18

0:04:39

0:04:39

0:01:01

0:01:01

1:08:25

1:08:25

0:53:48

0:53:48

0:21:32

0:21:32