filmov

tv

Hadoop HDFS Commands and MapReduce with Example: Step-by-Step Guide | Hadoop Tutorial | IvyProSchool

Показать описание

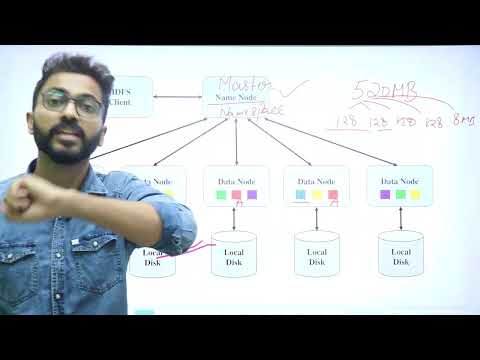

In this video, we will demonstrate the Hadoop ecosystem and deep dive into the core Hadoop commands, providing clear explanations and practical examples of how to interact with the Hadoop Distributed File System (HDFS) and manage your data effectively.

Next, we will explore MapReduce, a powerful programming model and algorithm that lies at the heart of Hadoop's data processing capabilities. You will learn how to execute MapReduce tasks to process and analyse vast amounts of data in a distributed manner, enabling parallel processing and maximising performance.

Throughout the tutorial, we will walk you through some commands and MapReduce tasks, breaking them down into easy-to-follow steps and explaining the underlying concepts along the way. By the end of this tutorial, you will have a solid understanding of Hadoop commands and MapReduce, empowering you to confidently tackle big data challenges and extract valuable insights from your datasets.

00:00:00 - Introduction

00:00:35 - Launch the hadoop cluster

00:01:26 - Check hadoop cluster from web browser

00:02:00 - Hdfs commands

00:03:04 - Upload a file into hdfs from local

00:04:07 - Upload a folder into hdfs from local

00:04:37 - Run a wordcount program in hadoop

00:05:49 - Java Heap Space Error solve

00:08:23 - copy a file from hdfs to local

00:09:45 - Conclusion

#hadooptutorial #mapreduce #dataengineering #hadoopcommands

Liked the video? Check out below more playlists on Data Science and Data Engineering learning tutorial, alumni interview experiences, live data science case studies,etc:

For more updates on courses and tips don’t forget to follow us on:

Next, we will explore MapReduce, a powerful programming model and algorithm that lies at the heart of Hadoop's data processing capabilities. You will learn how to execute MapReduce tasks to process and analyse vast amounts of data in a distributed manner, enabling parallel processing and maximising performance.

Throughout the tutorial, we will walk you through some commands and MapReduce tasks, breaking them down into easy-to-follow steps and explaining the underlying concepts along the way. By the end of this tutorial, you will have a solid understanding of Hadoop commands and MapReduce, empowering you to confidently tackle big data challenges and extract valuable insights from your datasets.

00:00:00 - Introduction

00:00:35 - Launch the hadoop cluster

00:01:26 - Check hadoop cluster from web browser

00:02:00 - Hdfs commands

00:03:04 - Upload a file into hdfs from local

00:04:07 - Upload a folder into hdfs from local

00:04:37 - Run a wordcount program in hadoop

00:05:49 - Java Heap Space Error solve

00:08:23 - copy a file from hdfs to local

00:09:45 - Conclusion

#hadooptutorial #mapreduce #dataengineering #hadoopcommands

Liked the video? Check out below more playlists on Data Science and Data Engineering learning tutorial, alumni interview experiences, live data science case studies,etc:

For more updates on courses and tips don’t forget to follow us on:

Комментарии

0:10:10

0:10:10

0:01:51

0:01:51

0:06:21

0:06:21

0:18:35

0:18:35

0:47:38

0:47:38

0:03:54

0:03:54

0:05:59

0:05:59

0:11:32

0:11:32

0:11:58

0:11:58

0:01:56

0:01:56

0:09:58

0:09:58

0:10:33

0:10:33

0:12:54

0:12:54

0:53:00

0:53:00

0:04:30

0:04:30

1:03:55

1:03:55

1:09:06

1:09:06

0:02:18

0:02:18

0:13:41

0:13:41

1:42:54

1:42:54

0:13:48

0:13:48

0:22:09

0:22:09

0:13:12

0:13:12

0:19:39

0:19:39