filmov

tv

Demo on Hadoop Flume | Edureka

Показать описание

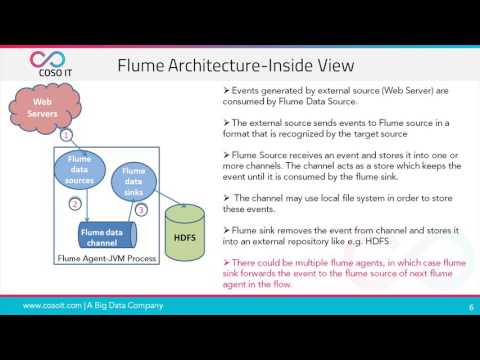

Apache Flume is a distributed and reliable service for efficiently collecting, aggregating, and moving large amounts of streaming data into the Hadoop Distributed File System (HDFS). This video shows you clearly how to load data using flume.

Related Posts:

Edureka is a New Age e-learning platform that provides Instructor-Led Live Online classes for learners who would prefer a hassle free and self paced learning environment, accessible from any part of the world.

The topics related to Flume are extensively covered in our 'Big Data & Hadoop' course.

Call us at US : 1800 275 9730 (toll free) or India : +91-8880862004

Demo on Hadoop Flume | Edureka

Streaming data to HDFS using Apache Flume | Big Data Hadoop Tutorial

12.4. Flume | Hands-On Demo On CloudxLab

Apache Flume Tutorial | Apache Hadoop Tutorial | Flume Demo | Intellipaat

Fetching Twitter Data into HDFS (Hadoop) using Apache Flume .....Step By Step Guide on Windows 10

What is Flume in Hadoop | Introduction to Flume | Big Data Tutorial for Beginners Part 11

Flume- Ingesting Data into Hadoop through Flume - learn Flume

Apache Flume Hadoop Ecosystem - Big Data Analytics Tutorial by Mahesh Huddar

Hadoop In 5 Minutes | What Is Hadoop? | Introduction To Hadoop | Hadoop Explained |Simplilearn

Twitter data extraction using Flume big data hadoop Tutorial

Apache Flume Tutorial | Apache Flume Architecture | COSO IT

Publishing logs data using Apache Flume | Real time data streaming on Hadoop

Introduction to HBase, Sqoop & Flume | Big Data Hadoop Spark | CloudxLab

Apache Flume Tutorial | Twitter Data Streaming Using Flume | Hadoop Training | Edureka

12.1. Flume | Introduction

Module 23 Apache Flume Introduction (Hadoop Tutorial)

Pull twitter data to hadoop (hdfs) using flume

3 minutes on Apache Flume with Roshan Naik

Ingest live data into hadoop cluster using flume

Flume NG Basics

What is flume? - Hadoop

Introducing Apache Flume | a distributed streaming platform for Big Data Hadoop Ecosystem

How To Stream Twitter Data Into Hadoop Using Apache Flume

3.15.1 Demo Ingesting Twitter Data from Apache Flume into HDFS

Комментарии

0:27:02

0:27:02

0:11:14

0:11:14

0:04:44

0:04:44

0:15:36

0:15:36

0:16:27

0:16:27

0:16:00

0:16:00

0:11:03

0:11:03

0:10:19

0:10:19

0:06:21

0:06:21

0:06:59

0:06:59

0:15:21

0:15:21

0:12:12

0:12:12

2:48:44

2:48:44

0:11:16

0:11:16

0:01:00

0:01:00

0:28:45

0:28:45

0:15:05

0:15:05

0:03:00

0:03:00

0:01:07

0:01:07

0:07:48

0:07:48

0:04:22

0:04:22

0:07:59

0:07:59

0:14:10

0:14:10

0:04:44

0:04:44