filmov

tv

What is PySpark RDD II Resilient Distributed Dataset II PySpark II PySpark Tutorial I KSR Datavizon

Показать описание

PySpark is a great tool for performing cluster computing operations in Python. PySpark is based on Apache’s Spark which is written in Scala. But to provide support for other languages, Spark was introduced in other programming languages as well. One of the support extensions is Spark for Python known as PySpark. PySpark has its own set of operations to process Big Data efficiently. The best part of PySpark is, it follows the syntax of Python. Thus if one has great hands-on experience on Python, it takes no time to understand the practical implementation of PySpark operations.

PySpark RDD Operations

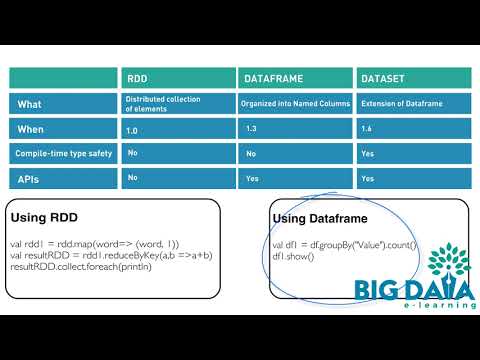

Resilient Distributed Dataset or RDD in a PySpark is a core data structure of PySpark. PySpark RDD’s is a low-level object and are highly efficient in performing distributed tasks. This article will not involve the basics of PySpark such as the creation of PySpark RDDs and PySpark DataFrames.

PySpark RDD has a set of operations to accomplish any task. These operations are of two types:

1. Transformations

2. Actions

0:00 Introduction

3:01 RDD Features

10:45 How to create a RDD

#pyspark #pysparkrdd #ResilientDistributedDataset #rddfeatures

How are we different from others?

1.24*7 recorded sessions Access & Support

2. Flexible Class Schedule

3. 100 % Job Guarantee

4. Mentors with +14 yrs.

5. Industry Oriented Courseware

6. LMS And APP availability for good live session experience.

Call us on IND: 9916961234 / 8527506810 to talk to our Course Advisors

PySpark RDD Operations

Resilient Distributed Dataset or RDD in a PySpark is a core data structure of PySpark. PySpark RDD’s is a low-level object and are highly efficient in performing distributed tasks. This article will not involve the basics of PySpark such as the creation of PySpark RDDs and PySpark DataFrames.

PySpark RDD has a set of operations to accomplish any task. These operations are of two types:

1. Transformations

2. Actions

0:00 Introduction

3:01 RDD Features

10:45 How to create a RDD

#pyspark #pysparkrdd #ResilientDistributedDataset #rddfeatures

How are we different from others?

1.24*7 recorded sessions Access & Support

2. Flexible Class Schedule

3. 100 % Job Guarantee

4. Mentors with +14 yrs.

5. Industry Oriented Courseware

6. LMS And APP availability for good live session experience.

Call us on IND: 9916961234 / 8527506810 to talk to our Course Advisors

Комментарии

0:21:59

0:21:59

0:05:15

0:05:15

0:13:40

0:13:40

0:12:41

0:12:41

0:05:01

0:05:01

0:04:09

0:04:09

0:11:36

0:11:36

0:13:17

0:13:17

0:05:03

0:05:03

0:04:17

0:04:17

0:11:45

0:11:45

0:31:56

0:31:56

0:08:50

0:08:50

0:07:50

0:07:50

0:21:10

0:21:10

0:31:19

0:31:19

0:26:52

0:26:52

0:56:50

0:56:50

0:00:54

0:00:54

0:05:10

0:05:10

0:03:24

0:03:24

0:06:11

0:06:11

0:08:03

0:08:03

0:17:21

0:17:21