filmov

tv

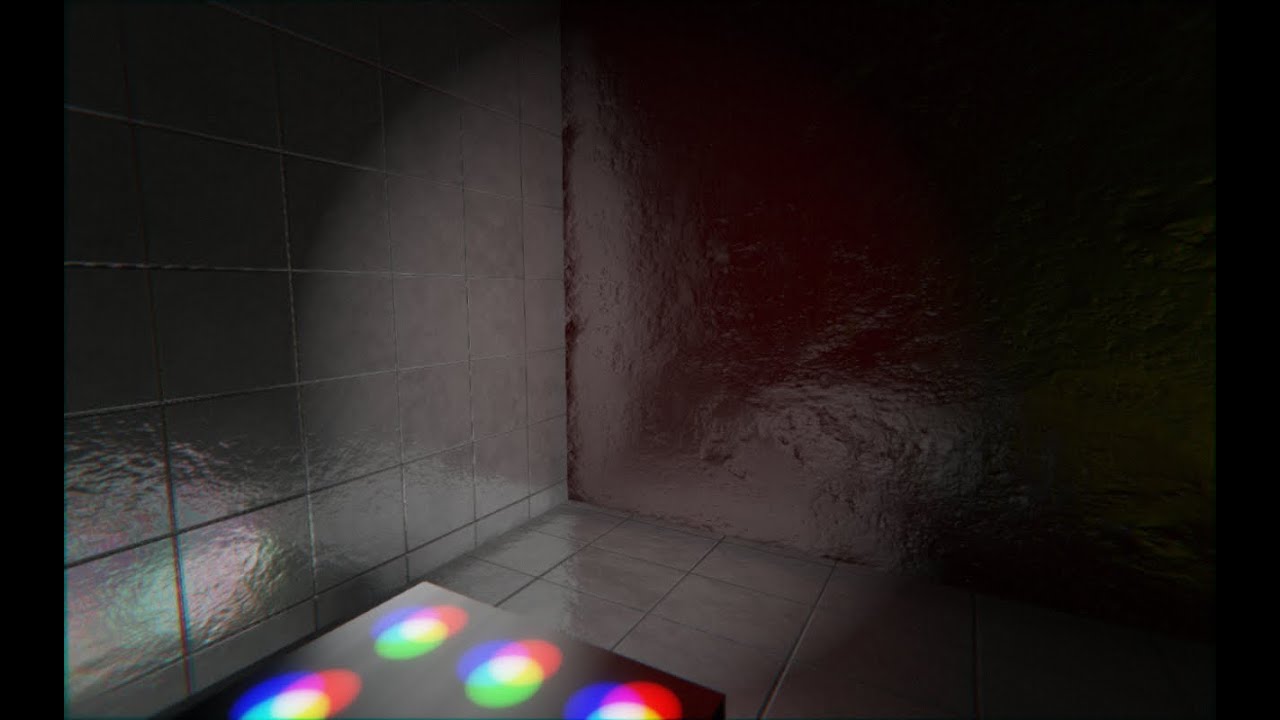

KailashEngine - Voxel Based Global Illumination - OpenGL/C#/OpenTK

Показать описание

This video is showing one of my favourite techniques that I've finally got working in my engine: Voxel Cone Traced Global Illumination.

The technique is that from Crassin and the chapter in OpenGL Insights.

1. Voxelize the scene using conservative rasterization

2. Inject light into the voxel volume

3. Mipmap the voxel volume

4. Trace cones for both indirect diffuse and specular sampling the voxel volume

In Crassin's paper he describes using a Sparse Voxel Octree to store the voxel volume and all its mips. To simplify things, I decided to just stick with a texture3D. In order to handle large scene, I've centred the volume around the camera (just like CSM). Because of this, you'll notice the lighting flicker a bit. I've yet to code some stability into it.

To simplify it further, I used OpenGL's built-in mipmaping. The performance isn't the best but one step at a time right?

As you can see from the video, this effect, in my engine, supports any number of lights (however many your rig can handle). To achieve this, during the light injection stage, I inject into the voxel volume during the light shadow depth pass. Since imageStore happens before the pass has time to write depth (after Fragment stage) I had to do the following in 2 passes:

1. Render the scene from the light's POV writing only to the attached depth buffer

2. Disable depthMask, no not clear depthMask and render the scene again from the light's POV. This time I use layout(early_fragment_tests) in; so depth culling is done prior to the fragment stage.

This worked out totally fine because all I was doing was storing the light depth in a texture to use later with VSM. Now I was able to imageStore into the voxel volume while taking into account occluders. To get the injection position I multiply the world position by a camera position matrix (since the volume is centred around the camera) and the volume dimensions and viola!

The real sneaky part was modifying Crassin's atomic averaging function. Instead of averaging all voxels at a position I simply take the maximum.

All that was left was to attenuate the light in the light depth pass.

I've yet to add this technique to point and directional lights but doing so is trivial.

The technique is that from Crassin and the chapter in OpenGL Insights.

1. Voxelize the scene using conservative rasterization

2. Inject light into the voxel volume

3. Mipmap the voxel volume

4. Trace cones for both indirect diffuse and specular sampling the voxel volume

In Crassin's paper he describes using a Sparse Voxel Octree to store the voxel volume and all its mips. To simplify things, I decided to just stick with a texture3D. In order to handle large scene, I've centred the volume around the camera (just like CSM). Because of this, you'll notice the lighting flicker a bit. I've yet to code some stability into it.

To simplify it further, I used OpenGL's built-in mipmaping. The performance isn't the best but one step at a time right?

As you can see from the video, this effect, in my engine, supports any number of lights (however many your rig can handle). To achieve this, during the light injection stage, I inject into the voxel volume during the light shadow depth pass. Since imageStore happens before the pass has time to write depth (after Fragment stage) I had to do the following in 2 passes:

1. Render the scene from the light's POV writing only to the attached depth buffer

2. Disable depthMask, no not clear depthMask and render the scene again from the light's POV. This time I use layout(early_fragment_tests) in; so depth culling is done prior to the fragment stage.

This worked out totally fine because all I was doing was storing the light depth in a texture to use later with VSM. Now I was able to imageStore into the voxel volume while taking into account occluders. To get the injection position I multiply the world position by a camera position matrix (since the volume is centred around the camera) and the volume dimensions and viola!

The real sneaky part was modifying Crassin's atomic averaging function. Instead of averaging all voxels at a position I simply take the maximum.

All that was left was to attenuate the light in the light depth pass.

I've yet to add this technique to point and directional lights but doing so is trivial.

Комментарии

0:04:36

0:04:36

0:01:07

0:01:07

0:01:21

0:01:21

0:01:11

0:01:11

0:00:46

0:00:46

0:02:37

0:02:37

0:01:07

0:01:07

0:01:51

0:01:51

0:06:58

0:06:58

0:02:32

0:02:32

0:01:55

0:01:55

0:03:49

0:03:49

0:03:28

0:03:28

0:00:35

0:00:35

0:01:51

0:01:51

0:00:51

0:00:51

![[Unity 2018][Scriptable Render](https://i.ytimg.com/vi/cOHHuDeXhgw/hqdefault.jpg) 0:02:58

0:02:58

0:05:38

0:05:38

0:03:38

0:03:38

0:03:52

0:03:52

0:01:15

0:01:15

0:00:36

0:00:36

0:04:11

0:04:11

0:02:08

0:02:08