filmov

tv

Coding a Neural Network from Scratch in Pure JAX | Machine Learning with JAX | Tutorial #3

Показать описание

❤️ Become The AI Epiphany Patreon ❤️

👨👩👧👦 Join our Discord community 👨👩👧👦

Watch me code a Neural Network from Scratch! 🥳 In this 3rd video of the JAX tutorials series.

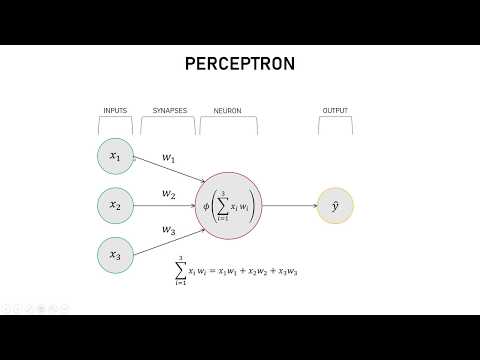

In this video, I create an MLP (multi-layer perceptron) and train it as a classifier on MNIST (although it's trivial to use a more complex dataset) - all this in pure JAX (no Flax/Haiku/Optax).

I then add cool visualizations such as:

* Visualizing MLP's learned weights

* Visualizing embeddings of a batch of images in t-SNE

* Finally, we analyze the dead neurons

Credit:

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

⌚️ Timetable:

00:00:00 Intro, structuring the code

00:03:10 MLP initialization function

00:13:30 Prediction function

00:24:10 PyTorch MNIST dataset

00:31:40 PyTorch data loaders

00:39:55 Training loop

00:49:15 Adding the accuracy metric

01:01:45 Visualize the image and prediction

01:04:40 Small code refactoring

01:09:25 Visualizing MLP weights

01:11:30 Visualizing embeddings using t-SNE

01:17:55 Analyzing dead neurons

01:24:35 Outro

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

💰 BECOME A PATREON OF THE AI EPIPHANY ❤️

If these videos, GitHub projects, and blogs help you,

consider helping me out by supporting me on Patreon!

Huge thank you to these AI Epiphany patreons:

Eli Mahler

Petar Veličković

Bartłomiej Danek

Zvonimir Sabljic

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

#jax #neuralnetwork #coding

👨👩👧👦 Join our Discord community 👨👩👧👦

Watch me code a Neural Network from Scratch! 🥳 In this 3rd video of the JAX tutorials series.

In this video, I create an MLP (multi-layer perceptron) and train it as a classifier on MNIST (although it's trivial to use a more complex dataset) - all this in pure JAX (no Flax/Haiku/Optax).

I then add cool visualizations such as:

* Visualizing MLP's learned weights

* Visualizing embeddings of a batch of images in t-SNE

* Finally, we analyze the dead neurons

Credit:

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

⌚️ Timetable:

00:00:00 Intro, structuring the code

00:03:10 MLP initialization function

00:13:30 Prediction function

00:24:10 PyTorch MNIST dataset

00:31:40 PyTorch data loaders

00:39:55 Training loop

00:49:15 Adding the accuracy metric

01:01:45 Visualize the image and prediction

01:04:40 Small code refactoring

01:09:25 Visualizing MLP weights

01:11:30 Visualizing embeddings using t-SNE

01:17:55 Analyzing dead neurons

01:24:35 Outro

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

💰 BECOME A PATREON OF THE AI EPIPHANY ❤️

If these videos, GitHub projects, and blogs help you,

consider helping me out by supporting me on Patreon!

Huge thank you to these AI Epiphany patreons:

Eli Mahler

Petar Veličković

Bartłomiej Danek

Zvonimir Sabljic

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

#jax #neuralnetwork #coding

Комментарии

0:09:15

0:09:15

0:31:28

0:31:28

0:11:35

0:11:35

0:32:32

0:32:32

0:54:51

0:54:51

0:18:40

0:18:40

0:04:32

0:04:32

0:16:59

0:16:59

0:05:39

0:05:39

0:02:39

0:02:39

0:01:00

0:01:00

0:15:40

0:15:40

0:57:28

0:57:28

0:01:00

0:01:00

0:08:40

0:08:40

0:14:15

0:14:15

0:17:38

0:17:38

0:11:01

0:11:01

0:00:50

0:00:50

0:05:11

0:05:11

0:05:45

0:05:45

0:01:04

0:01:04

0:01:00

0:01:00

0:02:43

0:02:43