filmov

tv

Analog computing will take over 30 billion devices by 2040. Wtf does that mean? | Hard Reset

Показать описание

About the episode: This model of computing would use 1/1000th of the energy today’s computers do. So why aren’t we using it?

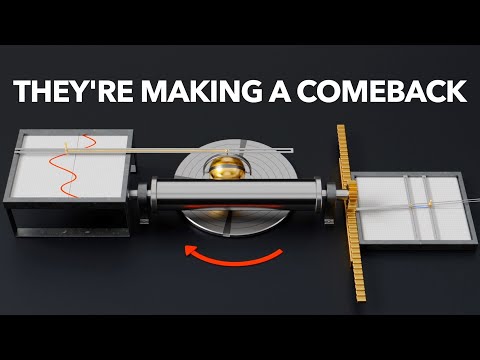

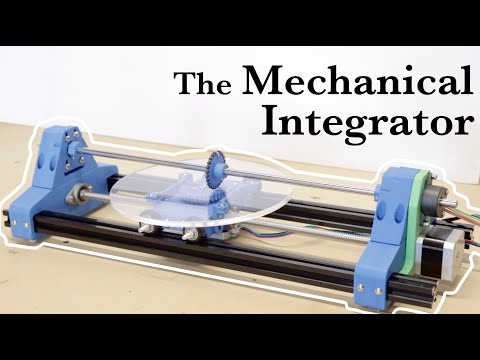

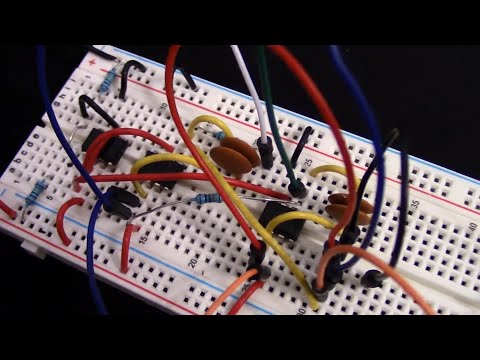

What if the next big technology was actually a pretty old technology? The first computers ever built were analog, and a return to analog processing might allow us to rebuild computing entirely.

Analog computing could offer the same programmability, power, and efficiency as the digital standard, at 1000x less energy than digital.

But would switching from digital to analog change how we interact with our technology? Aspinity is tackling the major hurdles to optimize the future landscape of computing.

◠◠◠◠◠◠◠◠◠◠◠◠◠◠◠◠◠◠

Read more of our stories on future technology:

Inventions that are fighting the rise of facial recognition technology

The technology we (or aliens) need for long-distance interstellar travel

3 emerging technologies that will give renewable energy storage a boost

◡◡◡◡◡◡◡◡◡◡◡◡◡◡◡◡◡◡◡

Watch our original series:

◠◠◠◠◠◠◠◠◠◠◠◠◠◠◠◠◠◠◠

About Freethink

No politics, no gossip, no cynics. At Freethink, we believe the daily news should inspire people to build a better world. While most media is fueled by toxic politics and negativity, we focus on solutions: the smartest people, the biggest ideas, and the most ground breaking technology shaping our future.

◡◡◡◡◡◡◡◡◡◡◡◡◡◡◡◡◡◡◡

Enjoy Freethink on your favorite platforms:

Комментарии

0:11:51

0:11:51

0:21:42

0:21:42

0:17:36

0:17:36

0:15:28

0:15:28

0:10:03

0:10:03

0:20:13

0:20:13

0:03:59

0:03:59

0:00:59

0:00:59

0:54:37

0:54:37

0:06:31

0:06:31

0:05:08

0:05:08

0:00:50

0:00:50

0:10:23

0:10:23

0:05:09

0:05:09

0:24:29

0:24:29

0:07:27

0:07:27

0:18:01

0:18:01

0:00:22

0:00:22

0:23:00

0:23:00

0:05:53

0:05:53

0:00:46

0:00:46

0:08:38

0:08:38

0:07:36

0:07:36

0:05:58

0:05:58