filmov

tv

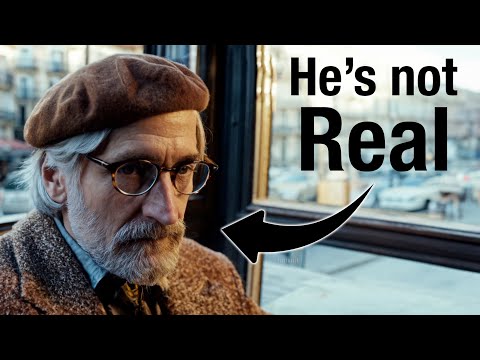

OpenAI's Sora: Text-to-Video AI is a World Simulator?!

Показать описание

check out my cool lil leaderboard website!

Sora

This video is supported by the kind Patrons & YouTube Members:

🙏Andrew Lescelius, alex j, Chris LeDoux, Alex Maurice, Miguilim, Deagan, FiFaŁ, Daddy Wen, Tony Jimenez, Panther Modern, Jake Disco, Demilson Quintao, Shuhong Chen, Hongbo Men, happi nyuu nyaa, Carol Lo, Mose Sakashita, Miguel, Bandera, Gennaro Schiano, gunwoo, Ravid Freedman, Mert Seftali, Mrityunjay, Richárd Nagyfi, Timo Steiner, Henrik G Sundt, projectAnthony, Brigham Hall, Kyle Hudson, Kalila, Jef Come, Jvari Williams, Tien Tien, BIll Mangrum, owned, Janne Kytölä, SO, Richárd Nagyfi

[Music] massobeats - lucid

Sora

This video is supported by the kind Patrons & YouTube Members:

🙏Andrew Lescelius, alex j, Chris LeDoux, Alex Maurice, Miguilim, Deagan, FiFaŁ, Daddy Wen, Tony Jimenez, Panther Modern, Jake Disco, Demilson Quintao, Shuhong Chen, Hongbo Men, happi nyuu nyaa, Carol Lo, Mose Sakashita, Miguel, Bandera, Gennaro Schiano, gunwoo, Ravid Freedman, Mert Seftali, Mrityunjay, Richárd Nagyfi, Timo Steiner, Henrik G Sundt, projectAnthony, Brigham Hall, Kyle Hudson, Kalila, Jef Come, Jvari Williams, Tien Tien, BIll Mangrum, owned, Janne Kytölä, SO, Richárd Nagyfi

[Music] massobeats - lucid

Introducing Sora — OpenAI’s text-to-video model

OpenAI's new text-to-video GenAI model Sora | TechCrunch

OpenAI’s Sora: How to Spot AI-Generated Videos | WSJ

OpenAI unveils text-to-video tool Sora

OpenAI Sora: All Demo Videos with Prompts | Upscaled 4K

How To Use OpenAI Sora Video Generator 2024 (AI Text-to-Video)

OpenAIs neue KI “Sora” schockt jeden! (Text-zu-Video)

Watch: OpenAI Tool Creates Realistic AI Videos | WSJ News

Sora Competitor? 🤯 Kling AI just released! Kling AI review and Runway comparisons

OpenAI shocks the world yet again… Sora first look

OpenAI unveils new text-to-video AI tool Sora

The FUTURE OF AI - OpenAI Sora | Text-To-Video AI

Open AI Releases the BEST AI Video Generator BY FAR. Sora Text to Video

Vidu VS OpenAI Sora - AI Text to Video Full Comparison | Which is Best AI Video Generator?

AI Generated Videos Just Changed Forever

Wow... OpenAI Sora is the FUTURE of AI

Sora - unbelievable text to video ai model from OpenAI

OpenAI’s Sora Does Video-to-Video #AI #technology #sora

OpenAI Shocks the AI Video World - Sora Changes Everything

OpenAI SORA: The FUTURE of AI (BEST Text-To-Video Model) | Ishan Sharma

OpenAI Sora: The Age Of AI Is Here!

OpenAI Video Generator Just Shocked EVERYONE(Sora)

How To Access Sora? OpenAI's text-to-video model creates realistic videos from text instruction...

THIS IS AN AI GENERATED VIDEO

Комментарии

0:10:24

0:10:24

0:00:19

0:00:19

0:07:01

0:07:01

0:03:03

0:03:03

0:07:58

0:07:58

0:17:38

0:17:38

0:18:30

0:18:30

0:01:30

0:01:30

0:16:39

0:16:39

0:04:22

0:04:22

0:02:17

0:02:17

0:11:43

0:11:43

0:23:51

0:23:51

0:01:54

0:01:54

0:12:02

0:12:02

0:00:58

0:00:58

0:00:40

0:00:40

0:00:58

0:00:58

0:12:18

0:12:18

0:00:39

0:00:39

0:08:27

0:08:27

0:11:54

0:11:54

0:06:31

0:06:31

0:00:55

0:00:55