filmov

tv

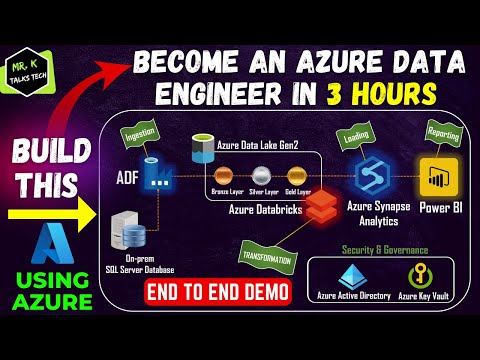

Part-3: Real time end to end Azure Data Engineering Project

Показать описание

Welcome to AnalytixCloud, In this hands-on tutorial, we'll dive into a real-time project on Azure Data Engineering. Whether you're an experienced data engineer looking to expand your skills or a newcomer to Azure, this project will provide you with valuable insights and practical experience.

This is the continuation to our Part-1,2. If you haven't watched it yet. We recommend to watch these videos before this :

In this video, we'll cover:

1. Data Ingestion:

===================================

We've designed a dynamic pipeline in Azure Data Factory that's versatile enough to handle both full load and incremental data loads seamlessly. By intelligently adapting to the specific data type and requirements of each task, this pipeline ensures efficient and scalable data integration, allowing us to maintain data consistency and minimize processing time for various data scenarios.

2. Control Table Mechanism:

===================================

A control table in an Azure Data Factory (ADF) pipeline serves as a dynamic configuration source, enabling you to centralize and manage parameter values, connection strings, and other settings for your data workflows, simplifying maintenance and enhancing scalability. By referencing this control table, ADF pipelines can adapt to changing requirements without needing code modifications, promoting flexibility and efficiency in your data integration processes.

3. Error log Capturing:

====================================

You can efficiently capture error logs through stored procedures in Azure SQL Database by creating a dedicated error log table to store relevant information. These stored procedures can be designed to log errors by inserting error details, timestamps, and additional context information into the error log table, making it easier to track and diagnose issues in your database applications. This approach streamlines error management and ensures that you have a comprehensive record of errors for troubleshooting and analysis.

You can find below the resources used in this video :

Mob: +91-7411310205

Please Subscribe us for more videos:

Don't forget to like, subscribe, and hit the notification bell to stay updated with our latest Azure and data engineering tutorials. If you have any questions or need further clarification on any topic covered in this video, please feel free to leave a comment below, and we'll be happy to assist you.

Thank you for watching, and let's dive into the world of Azure Data Engineering together!

#azuredataengineer #dataengineering engineering #dataengineeringtutorial #endtoendproject

This is the continuation to our Part-1,2. If you haven't watched it yet. We recommend to watch these videos before this :

In this video, we'll cover:

1. Data Ingestion:

===================================

We've designed a dynamic pipeline in Azure Data Factory that's versatile enough to handle both full load and incremental data loads seamlessly. By intelligently adapting to the specific data type and requirements of each task, this pipeline ensures efficient and scalable data integration, allowing us to maintain data consistency and minimize processing time for various data scenarios.

2. Control Table Mechanism:

===================================

A control table in an Azure Data Factory (ADF) pipeline serves as a dynamic configuration source, enabling you to centralize and manage parameter values, connection strings, and other settings for your data workflows, simplifying maintenance and enhancing scalability. By referencing this control table, ADF pipelines can adapt to changing requirements without needing code modifications, promoting flexibility and efficiency in your data integration processes.

3. Error log Capturing:

====================================

You can efficiently capture error logs through stored procedures in Azure SQL Database by creating a dedicated error log table to store relevant information. These stored procedures can be designed to log errors by inserting error details, timestamps, and additional context information into the error log table, making it easier to track and diagnose issues in your database applications. This approach streamlines error management and ensures that you have a comprehensive record of errors for troubleshooting and analysis.

You can find below the resources used in this video :

Mob: +91-7411310205

Please Subscribe us for more videos:

Don't forget to like, subscribe, and hit the notification bell to stay updated with our latest Azure and data engineering tutorials. If you have any questions or need further clarification on any topic covered in this video, please feel free to leave a comment below, and we'll be happy to assist you.

Thank you for watching, and let's dive into the world of Azure Data Engineering together!

#azuredataengineer #dataengineering engineering #dataengineeringtutorial #endtoendproject

Комментарии

1:12:33

1:12:33

1:31:16

1:31:16

0:13:41

0:13:41

2:47:24

2:47:24

0:00:22

0:00:22

0:08:02

0:08:02

0:00:40

0:00:40

0:13:14

0:13:14

0:33:42

0:33:42

0:37:51

0:37:51

0:01:00

0:01:00

0:00:48

0:00:48

0:13:21

0:13:21

0:09:31

0:09:31

1:27:34

1:27:34

0:17:06

0:17:06

0:00:59

0:00:59

0:12:00

0:12:00

0:17:52

0:17:52

0:00:27

0:00:27

0:09:59

0:09:59

0:09:21

0:09:21

0:01:00

0:01:00

0:11:49

0:11:49