filmov

tv

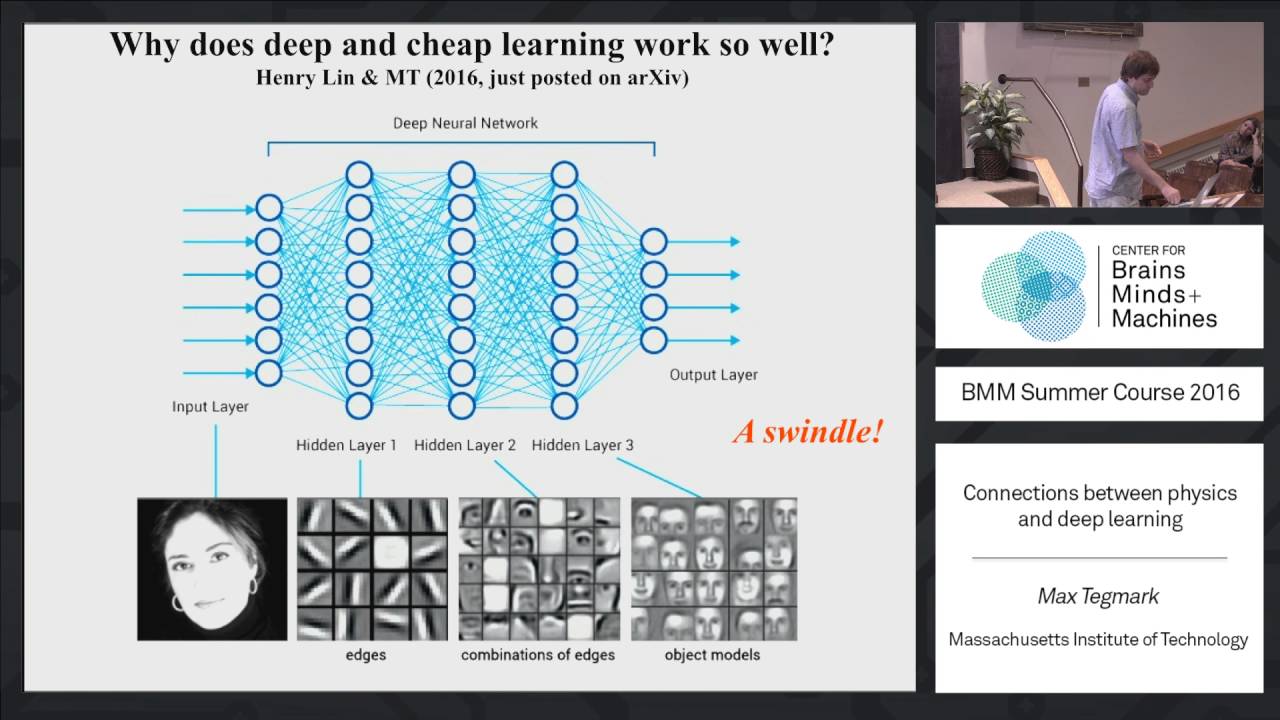

Connections between physics and deep learning

Показать описание

Max Tegmark - MIT

Connections between physics and deep learning

But what is a neural network? | Deep learning chapter 1

Centripetal or Centrifugal Force Demo? #physics

Quantum Physics & Spirituality: The Mind-Blowing Connection

Best Way To Learn Physics #physics

QUANTUM PHYSICS and “Consciousness”: How Frequency Shapes Our Reality

The Connections Between Physics and Finance

Demonstrating atmospheric pressure 💨🧪 #science #physics #scienceexperiment #sciencefacts

Relational Quantum Mechanics: A World of Perspectives and Interactions | Philosopher Friends

Quantum Physics and Emptiness: Parallels Between Buddhism and Science

Science of Manifestation: relation to Quantum Physics & How to actually Manifest your Desires

How much does a PHYSICS RESEARCHER make?

Brian Cox on quantum computing and black hole physics

Quantum Mysticism: Exploring the Connection between Physics and Spirituality

Moment of Inertia and Angular velocity Demonstration #physics

Experts Shocked: What OpenAI Deep Research Just Uncovered About The Tower of Babel

Maths vs Physics

What is Quantum Physics⁉️ Neil deGrasse Tyson on #physics #quantum #science

physics ✨ #shorts #physics #astronomy #skywalk

The Secret Connection Between Quantum Physics And IFA Religion

The Big Misconception About Electricity

Quantum Computers, Explained With Quantum Physics

How to use QUANTUM PHYSICS to manifest ANY reality you want | Dr. Joe Dispenza

The Most Misunderstood Concept in Physics

Комментарии

0:51:46

0:51:46

0:18:40

0:18:40

0:00:09

0:00:09

0:04:58

0:04:58

0:00:16

0:00:16

0:00:33

0:00:33

0:50:43

0:50:43

0:00:30

0:00:30

0:14:29

0:14:29

0:34:17

0:34:17

0:17:26

0:17:26

0:00:44

0:00:44

0:06:43

0:06:43

0:04:07

0:04:07

0:00:33

0:00:33

0:14:38

0:14:38

0:00:25

0:00:25

0:01:00

0:01:00

0:00:08

0:00:08

0:09:18

0:09:18

0:14:48

0:14:48

0:09:59

0:09:59

0:00:51

0:00:51

0:27:15

0:27:15