filmov

tv

Apache Spark Add Packages or Jars to Spark Session | Spark Tutorial

Показать описание

#apachespark #azure #dataengineering

Apache Spark Tutorial

Apache Spark is an open-source unified analytics engine for large-scale data processing. Spark provides an interface for programming clusters with implicit data parallelism and fault tolerance

In this Video, We will learn how to add external libraries like XML to our interactive Spark Session using PySpark and Spark with Scala

======================================

How to install Spark in Windows:

How to configure Spark in anaconda/Jupyter notebook

How to Read XML using Databricks in Apache Spark:

Methods to Install external package in Databricks:

Setup HBASE in Windows

=====================================

DataSet to download:

Code Snippet:

pyspark --packages Packagename

or

pyspark --jars jarfile

======================================

Blog link to learn more on Spark:

Linkedin profile:

FB page:

Apache Spark Tutorial

Apache Spark is an open-source unified analytics engine for large-scale data processing. Spark provides an interface for programming clusters with implicit data parallelism and fault tolerance

In this Video, We will learn how to add external libraries like XML to our interactive Spark Session using PySpark and Spark with Scala

======================================

How to install Spark in Windows:

How to configure Spark in anaconda/Jupyter notebook

How to Read XML using Databricks in Apache Spark:

Methods to Install external package in Databricks:

Setup HBASE in Windows

=====================================

DataSet to download:

Code Snippet:

pyspark --packages Packagename

or

pyspark --jars jarfile

======================================

Blog link to learn more on Spark:

Linkedin profile:

FB page:

Apache Spark Add Packages or Jars to Spark Session | Spark Tutorial

Spark With Databricks | Best Way to Install External Libraries/Packages With Demo | Learntospark

53. Workspace packages for Apache Spark Pool in Azure Synapse Analytics

Install Apache PySpark on Windows PC | Apache Spark Installation Guide

Learn Apache Spark in 10 Minutes | Step by Step Guide

54 requirements.txt File to Manage libraries for Apache Spark pool in Azure Synapse Analytics

52. Manage Library Packages for Apache Spark in Azure Synapse Analytics

Making Apache Spark™ Better with Delta Lake

Apache Spark - Install Apache Spark 3.x On Windows 10 |Spark Tutorial

PySpark Tutorial | PySpark Tutorial For Beginners | Apache Spark With Python Tutorial | Simplilearn

Install Python libraries for Apache Spark in Azure Synapse Analytics

Apache Spark - Install Apache Spark 3.x On Ubuntu |Spark Tutorial

Install Apache Spark 2.X - Quick Setup

Installing The Ultimate Spark Learning Environment | Spark Tutorial #2

Portable Scalable Data Visualization Techniques for Apache Spark and Python Notebook-based Analytics

What are the main libraries of Apache Spark?

Running First PySpark Application in PyCharm IDE with Apache Spark 2.3.0 | DM | DataMaking

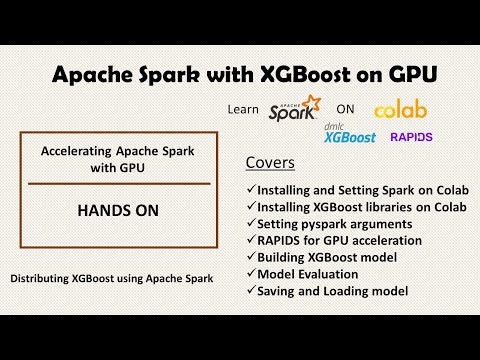

Machine Learning with Apache Spark and XGBoost on GPU

Installation of Apache Spark on Windows 11 (in 5 minutes)

Spark multinode environment setup on yarn

Apache Spark - Install Apache Spark On Ubuntu |Spark Tutorial | Part 6

Integrating Apache Spark with MongoDB | Read/Write MongoDB data using PySpark

01 - Install Apache Spark Using Docker | Docker | Apache Spark

How to Install Apache Spark on Windows | Big Data Tools

Комментарии

0:11:53

0:11:53

0:10:33

0:10:33

0:12:35

0:12:35

0:14:42

0:14:42

0:10:47

0:10:47

0:16:02

0:16:02

0:08:24

0:08:24

0:58:10

0:58:10

0:06:28

0:06:28

0:59:31

0:59:31

0:08:51

0:08:51

0:06:34

0:06:34

0:08:34

0:08:34

0:09:39

0:09:39

0:26:44

0:26:44

0:03:25

0:03:25

0:22:00

0:22:00

0:21:02

0:21:02

0:05:31

0:05:31

0:37:30

0:37:30

0:06:18

0:06:18

0:20:09

0:20:09

0:13:48

0:13:48

0:06:14

0:06:14