filmov

tv

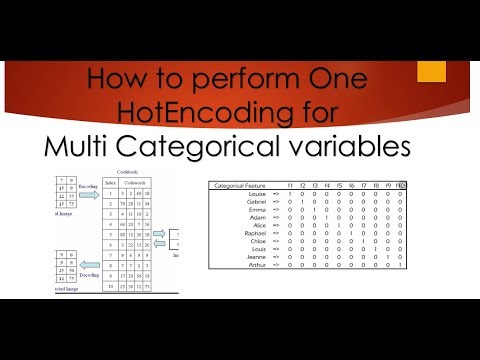

Encoding Data with one Hot Encoder in Data Preprocessing | Data Science ML (Lecture #6)

Показать описание

What happens when your dataset includes categories like ‘red’, ‘blue’, or ‘green’—and your machine learning model only understands numbers? In this sixth lecture of our Data Science & Machine Learning series, we unravel the power of One Hot Encoding, a cornerstone technique for transforming categorical data into a format that algorithms like regression, neural networks, and tree-based models can digest.

We’ll demystify why simple label encoding falls short for nominal data, walk through Python implementations using Pandas and Scikit-learn’s OneHotEncoder, and tackle challenges like the curse of dimensionality and sparse matrices. From handling binary features to managing high-cardinality categories (e.g., zip codes or product IDs), this session will equip you to encode data efficiently while preserving critical information.

Key Takeaways:

Why encoding matters: The risks of mishandling categorical variables in ML pipelines.

One Hot Encoding vs. alternatives: Label encoding, ordinal encoding, and feature hashing.

Balancing simplicity and complexity: Avoiding overfitting with high-dimensional sparse data.

Real-world applications: Case studies in retail (product categories), healthcare (diagnosis codes), and NLP (text tokenization).

Join us to master this essential preprocessing skill—and ensure your models never misinterpret a category again!

Variations for Audience/Purpose:

For Beginners: Add "No prior encoding experience needed—start with basic binary features and build to advanced workflows!"

For Advanced Learners: Include "Advanced topics: Embedding layers for high-cardinality data, trade-offs with target encoding, and sparse matrix optimizations."

For Industry Focus: Add "See how companies like Airbnb and Uber encode categorical features for recommendation systems and dynamic pricing models."

Bonus Customization Tips:

Tools: Mention integrations with TensorFlow/Keras for deep learning workflows.

Use Cases: Highlight domain-specific examples (e.g., "Encoding user demographics in marketing analytics").

Engagement Hook: "Ever trained a model that treated ‘dog’ as closer to ‘cat’ than ‘elephant’? We’ll fix that!"

Let me know if you’d like to refine the tone, dive deeper into technical nuances, or add specific examples! 🎯

We’ll demystify why simple label encoding falls short for nominal data, walk through Python implementations using Pandas and Scikit-learn’s OneHotEncoder, and tackle challenges like the curse of dimensionality and sparse matrices. From handling binary features to managing high-cardinality categories (e.g., zip codes or product IDs), this session will equip you to encode data efficiently while preserving critical information.

Key Takeaways:

Why encoding matters: The risks of mishandling categorical variables in ML pipelines.

One Hot Encoding vs. alternatives: Label encoding, ordinal encoding, and feature hashing.

Balancing simplicity and complexity: Avoiding overfitting with high-dimensional sparse data.

Real-world applications: Case studies in retail (product categories), healthcare (diagnosis codes), and NLP (text tokenization).

Join us to master this essential preprocessing skill—and ensure your models never misinterpret a category again!

Variations for Audience/Purpose:

For Beginners: Add "No prior encoding experience needed—start with basic binary features and build to advanced workflows!"

For Advanced Learners: Include "Advanced topics: Embedding layers for high-cardinality data, trade-offs with target encoding, and sparse matrix optimizations."

For Industry Focus: Add "See how companies like Airbnb and Uber encode categorical features for recommendation systems and dynamic pricing models."

Bonus Customization Tips:

Tools: Mention integrations with TensorFlow/Keras for deep learning workflows.

Use Cases: Highlight domain-specific examples (e.g., "Encoding user demographics in marketing analytics").

Engagement Hook: "Ever trained a model that treated ‘dog’ as closer to ‘cat’ than ‘elephant’? We’ll fix that!"

Let me know if you’d like to refine the tone, dive deeper into technical nuances, or add specific examples! 🎯

0:01:43

0:01:43

0:15:23

0:15:23

0:09:03

0:09:03

0:06:00

0:06:00

0:00:55

0:00:55

0:06:41

0:06:41

0:21:35

0:21:35

0:30:12

0:30:12

0:05:26

0:05:26

0:07:32

0:07:32

0:00:34

0:00:34

0:00:47

0:00:47

0:09:24

0:09:24

0:10:28

0:10:28

0:04:24

0:04:24

0:00:31

0:00:31

0:05:52

0:05:52

0:16:03

0:16:03

0:11:07

0:11:07

0:01:00

0:01:00

0:16:09

0:16:09

0:12:32

0:12:32

0:10:08

0:10:08

0:04:33

0:04:33