filmov

tv

Robust Data Processing Pipeline with Elixir and Flow - Laszlo Bacsi - ElixirConf EU 2018

Показать описание

It doesn't matter if you have "big data" or "small data" if you need to import and process it in near realtime you want to have a system that is robust and maintainable. This is where the failure tolerance and scalability of Erlang/OTP, the expressiveness of Elixir, and the flexibility of Flow and GenStage are all great assets. This is a story of how we built a data pipeline to move and process billions of rows from MySQL and CSV files into Redshift and what we learned along the way.

Robust Data Processing Pipeline with Elixir and Flow - Laszlo Bacsi - ElixirConf EU 2018

Building a Robust Data Pipeline with the 'dag Stack': dbt, Airflow, and Great Expectations

What is Data Pipeline | How to design Data Pipeline ? - ETL vs Data pipeline (2024)

Building Robust and Scalable Data Pipelines with Kafka

What is Data Pipeline? | Why Is It So Popular?

Robust Foundation for Data Pipelines at Scale - Lessons from Netflix

Building a robust data pipeline with dbt, Airflow, and Great Expectations

AWS re:Invent 2015 | (CMP310) Robust Data Processing Pipelines Using Containers & Spot Instances

Data Preprocessing in AI/ML - Part 2: A Guide for AI Enthusiasts (All about AI) - Machine Learning

Building Robust Data Pipelines for Modern Data Engineering | End to End Data Engineering Project

Paul Brebner - Building and Scaling a Robust Zero-Code Data Pipeline With Open Source Technologies

Best Practices for Building Robust Data Platform with Apache Spark and Delta

Beam Summit 2021-Leveraging Beam's Batch-Mode for Robust Recoveries and Late-Data Processing

Operationalizing Big Data Pipelines At Scale

How To DESIGN YOUR First DATA PIPELINE ??🔥 15 Minutes BASIC STEPS

Jiaqi Liu - Building a Data Pipeline with Testing in Mind - PyCon 2018

Designing the Next Generation of Data Pipelines at Zillow with Apache Spark

Data to Deployment - Crafting a Robust Machine Learning Pipeline

[Tech Talk] Enhancing Apache Spark for robust data processing

What is a Data Pipeline Engineer?

Reduce Update Latency and Build a Robust Pipeline Architecture for Ingesting at Scale

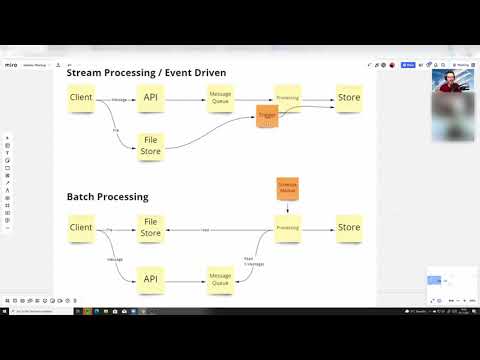

Stream vs Batch processing explained with examples

Data Pipeline Lifecycle: SQL Everywhere

Let’s Make Your Data Pipeline Robust With Great Expectations!|Keisuke Nishitani|PyCon Taiwan 2021...

Комментарии

0:41:13

0:41:13

0:42:34

0:42:34

0:10:34

0:10:34

0:27:03

0:27:03

0:05:25

0:05:25

0:38:18

0:38:18

0:20:31

0:20:31

0:34:23

0:34:23

0:22:01

0:22:01

1:59:29

1:59:29

0:24:13

0:24:13

0:27:18

0:27:18

0:37:55

0:37:55

0:23:31

0:23:31

0:31:03

0:31:03

0:20:16

0:20:16

0:27:01

0:27:01

0:19:05

0:19:05

![[Tech Talk] Enhancing](https://i.ytimg.com/vi/A3C-39VwjiA/hqdefault.jpg) 0:14:36

0:14:36

0:00:20

0:00:20

0:41:19

0:41:19

0:09:02

0:09:02

0:31:13

0:31:13

0:15:12

0:15:12