filmov

tv

Tariq Rashid - A Gentle Introduction to Neural Networks and making your own with Python

Показать описание

PyData London 2016

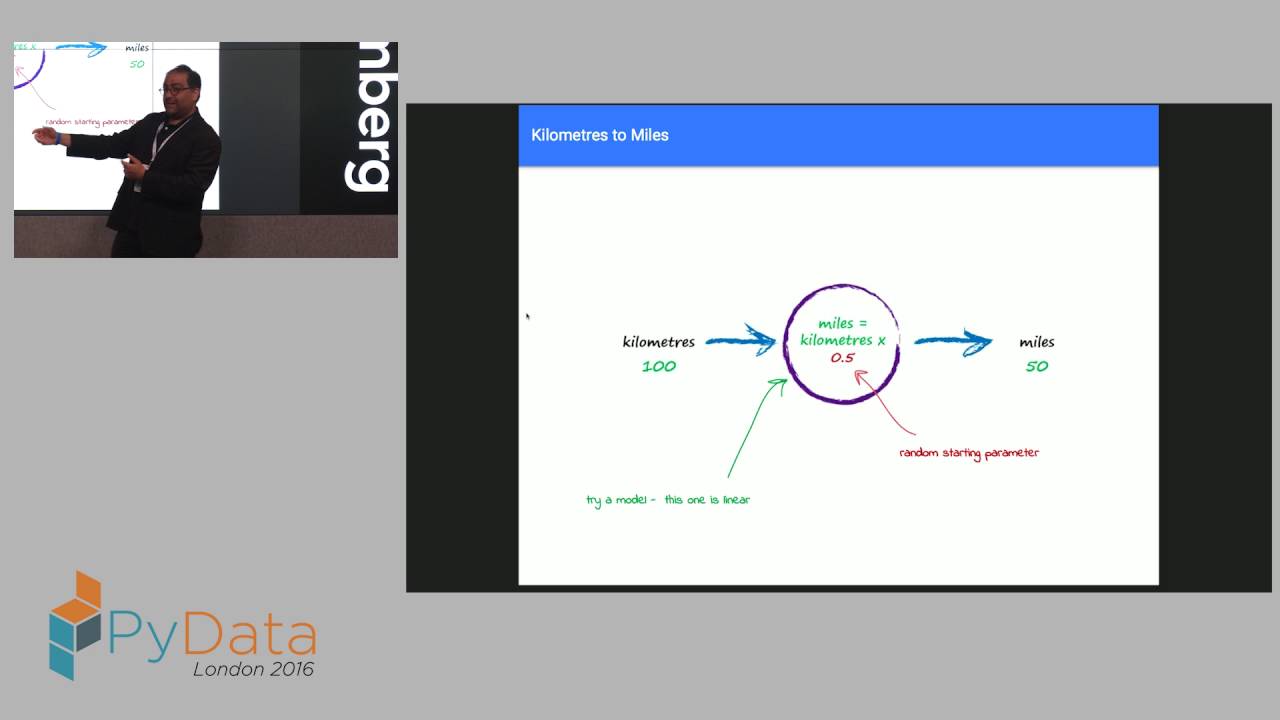

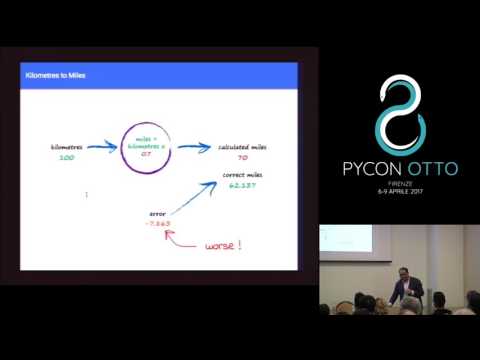

Neural networks are not only a powerful data science tool, they're at the heart of recent breakthroughs in deep learning and artificial intelligence.

This talk, designed for a complete beginners and anyone non-technical, will introduce the history and ideas behind neural networks. You won't need anything more than basic school maths. We'll gently build our own neural network in Python too.

Ideas: - search for intelligence machines, what's easy for us not easy for computers.

DIY: - MINST dataset - simple network 3 layer - matrix multiplication to aid calculations - preprocessing, priming weights - 95% accuracy with very simple code! - improvements lead to 98% accuracy!

00:10 Help us add time stamps or captions to this video! See the description for details.

Neural networks are not only a powerful data science tool, they're at the heart of recent breakthroughs in deep learning and artificial intelligence.

This talk, designed for a complete beginners and anyone non-technical, will introduce the history and ideas behind neural networks. You won't need anything more than basic school maths. We'll gently build our own neural network in Python too.

Ideas: - search for intelligence machines, what's easy for us not easy for computers.

DIY: - MINST dataset - simple network 3 layer - matrix multiplication to aid calculations - preprocessing, priming weights - 95% accuracy with very simple code! - improvements lead to 98% accuracy!

00:10 Help us add time stamps or captions to this video! See the description for details.

Tariq Rashid - A Gentle Introduction to Neural Networks and making your own with Python

Tariq Rashid - A Gentle Introduction to Neural Networks (with Python)

Tariq Rashid - A Gentle Introduction to Neural Networks (with Python)

A Gentle Introduction to Neural Networks - Tariq Rashid - Agile on the Beach Conference 2018

Tariq Rashid - Dimension Reduction and Extracting Topics - A Gentle Introduction

Neural Networks Event

A Python a Day...Fixes Your Neural Network Problem by Tariq Rashid

Tariq Rashid - NYSE Euronext - The C Word

Tariq Rashid - Pitch up at the Pad

Tariq Rashid- Safe Fair and Ethical AI A Practical Framework| PyData Global 2020

Tariq Rashid Demo Reel 2015

Tariq rashid story part 2

086 Surah At Tariq by Mishary Al Afasy (iRecite)

Tariq Rashid, Digital & Technology Strategy at the Cabinet Office

Dr. Tariq Rashid, Interventional Cardiologist speaking on World Heart Day with Mudasir Manzoor.

Facebook main naukri Kaisay Hasil kar saktay hain ? Explained by Tariq Rashid who works in Facebook

How To Ensure Responsible Use Of AI With A Real-World Example - Tariq Rashid | PyData Global 2021

10.1: Introduction to Neural Networks - The Nature of Code

Safe Fair and Ethical AI - A Practical Framework Tariq Rashid

Tariq Rashid - Safe Responsible and Ethical AI

EX MUSLIM CANT RECITE SHORTEST CHAPTER - HILARIOUS

Story of Tariq Rashid from Facebook part 2 Meri Kahaani

Tariq Rashid vs Bartholomew 13 December 2015, Leicestershire

Music Is HARAM But...

Комментарии

0:55:41

0:55:41

0:56:23

0:56:23

0:45:04

0:45:04

0:43:01

0:43:01

0:37:51

0:37:51

0:01:34

0:01:34

1:10:30

1:10:30

0:11:49

0:11:49

0:02:47

0:02:47

0:32:26

0:32:26

0:04:51

0:04:51

0:16:58

0:16:58

0:01:49

0:01:49

0:10:57

0:10:57

0:06:17

0:06:17

0:15:54

0:15:54

0:53:51

0:53:51

0:07:32

0:07:32

0:32:27

0:32:27

0:53:46

0:53:46

0:00:40

0:00:40

0:16:59

0:16:59

0:12:29

0:12:29

0:01:00

0:01:00