filmov

tv

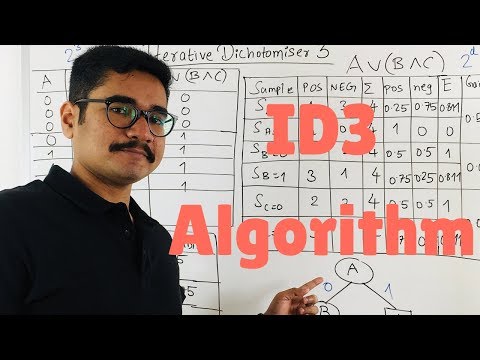

3 Decision Tree | ID3 Algorithm | Solved Numerical Example by Mahesh Huddar

Показать описание

3 Decision Tree – ID3 Algorithm Solved Numerical Example by Mahesh Huddar

id3 algorithm decision tree,

id3 algorithm in machine learning,

decision tree in ML,

decision tree solved example,

decision tree numerical example solved,

id3 algorithm in data mining,

id3 algorithm decision tree in data mining,

id3 algorithm decision tree python,

id3 algorithm decision tree in machine learning,

id3 algorithm example,

id3 algorithm in data mining with an example,

id3 in data mining,

decision tree problem,

decision tree problem in big data analytics,

decision tree machine learning,

decision tree,

decision tree in data mining,

decision tree analysis,

decision tree by Mahesh Huddar,

id3 algorithm decision tree,

id3 algorithm in machine learning,

decision tree in ML,

decision tree solved example,

decision tree numerical example solved,

id3 algorithm in data mining,

id3 algorithm decision tree in data mining,

id3 algorithm decision tree python,

id3 algorithm decision tree in machine learning,

id3 algorithm example,

id3 algorithm in data mining with an example,

id3 in data mining,

decision tree problem,

decision tree problem in big data analytics,

decision tree machine learning,

decision tree,

decision tree in data mining,

decision tree analysis,

decision tree by Mahesh Huddar,

3 Decision Tree | ID3 Algorithm | Solved Numerical Example by Mahesh Huddar

Lec-10: Decision Tree 🌲 ID3 Algorithm with Example & Calculations 🧮

1. Decision Tree | ID3 Algorithm | Solved Numerical Example | by Mahesh Huddar

2 Decision Tree | ID3 Algorithm | Solved Numerical Example by Mahesh Huddar

ID3 Decision tree Learning Algorithm | ID3 Algorithm | Decision Tree Algorithm Example Mahesh Huddar

DECISION TREE- Part 3 (ID3 Algorithm) - Solved Example

ID3 Algorithm to Build Decision Tree Buys Computer Solved Example in Machine Learning Mahesh Huddar

Decision Tree Classification Clearly Explained!

84. The Decision Tree ID3 algorithm from scratch Part 3

Decision Tree ID3 Algorithm | Decision Tree | ID3 Algorithm | Machine Learning | 2024| Simplilearn

6 Decision Trees ID3 Solved

IAML7.3 Quinlan's ID3 algorithm

Machine Learning #38 - Entscheidungsbäume #2 - Der ID3-Algorithmus

Iterative Dichotomiser 3

Decision tree Learning example | ID3 | Artificial intelligence | Lec-50 | Bhanu Priya

10 Decision Tree Classification - ID3 (Iterative Dichotomiser) Algorithm - Part 3

7 Decision Trees ID3 Multi-class classification Solved

Part III: Decision Tree Algorithm, ID3, Problem Solved, Info. Gain, Data Mining, Machine Learning

ID3 Decision Tree Learning Inductive Bias | Inductive bias of ID3 | Occam's razor ID3 Mahesh Hu...

1.6 Decision Tree using ID3 Algorithm

Decision Tree Algorithm _ Iterative Dichotomiser -3 (ID3) with examples

Machine Learning | ID3 Algorithm

Decision Tree, ID3 Algorithm information Gain, Entropy

KI #11 ID3 Decission Tree / Entscheidungsbaum

Комментарии

0:12:14

0:12:14

0:16:38

0:16:38

0:23:53

0:23:53

0:08:53

0:08:53

0:08:06

0:08:06

0:07:05

0:07:05

0:18:05

0:18:05

0:10:33

0:10:33

0:04:08

0:04:08

0:12:46

0:12:46

0:23:38

0:23:38

0:03:33

0:03:33

0:09:50

0:09:50

0:04:27

0:04:27

0:16:13

0:16:13

0:07:55

0:07:55

0:07:27

0:07:27

0:13:05

0:13:05

0:06:44

0:06:44

0:15:38

0:15:38

0:25:08

0:25:08

0:21:02

0:21:02

0:35:26

0:35:26

0:15:50

0:15:50