filmov

tv

Generative Adversarial Networks

Показать описание

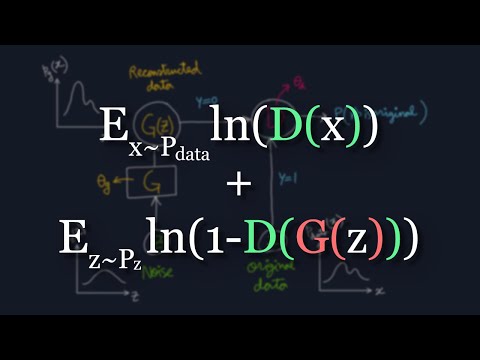

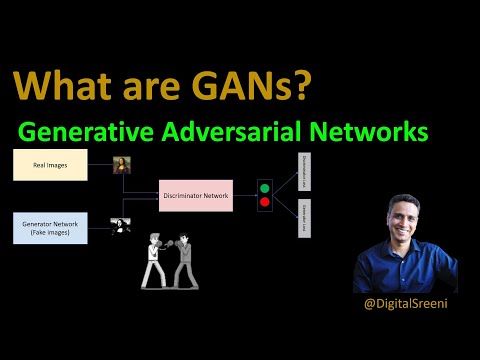

A lecture that discusses Generative Adversarial Networks. We discuss generative modeling, latent spaces, semantically meaningful arithmetic in latent space, minimax optimization formulation for GANs, theory for minimax formulation, Earth mover distance, Wasserstein GANs, and challenges of GANs.

This lecture is from Northeastern University's CS 7150 Summer 2020 class on Deep Learning, taught by Paul Hand.

References:

Goodfellow et al. 2014:

Goodfellow, Ian, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. "Generative adversarial nets." In Advances in neural information processing systems, pp. 2672-2680. 2014.

Radford et al. 2016:

Radford, Alec, Luke Metz, and Soumith Chintala. "Unsupervised representation learning with deep convolutional generative adversarial networks." arXiv preprint arXiv:1511.06434 (2015).

Ulyanov et al. 2018:

Ulyanov, Dmitry, Andrea Vedaldi, and Victor Lempitsky. "It takes (only) two: Adversarial generator-encoder networks." In Thirty-Second AAAI Conference on Artificial Intelligence. 2018.

Karras et al. 2018:

Karras, Tero, Timo Aila, Samuli Laine, and Jaakko Lehtinen. "Progressive growing of gans for improved quality, stability, and variation." arXiv preprint arXiv:1710.10196 (2017).

Lucas et al. 2018:

Lucas, Alice, Michael Iliadis, Rafael Molina, and Aggelos K. Katsaggelos. "Using deep neural networks for inverse problems in imaging: beyond analytical methods." IEEE Signal Processing Magazine 35, no. 1 (2018): 20-36.

Arjovsky et al. 2017:

Arjovsky, Martin, Soumith Chintala, and Léon Bottou. "Wasserstein gan." arXiv preprint arXiv:1701.07875 (2017).

Park et al. 2020:

Park, Sung-Wook, Jun-Ho Huh, and Jong-Chan Kim. "BEGAN v3: Avoiding Mode Collapse in GANs Using Variational Inference." Electronics 9, no. 4 (2020): 688.

This lecture is from Northeastern University's CS 7150 Summer 2020 class on Deep Learning, taught by Paul Hand.

References:

Goodfellow et al. 2014:

Goodfellow, Ian, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. "Generative adversarial nets." In Advances in neural information processing systems, pp. 2672-2680. 2014.

Radford et al. 2016:

Radford, Alec, Luke Metz, and Soumith Chintala. "Unsupervised representation learning with deep convolutional generative adversarial networks." arXiv preprint arXiv:1511.06434 (2015).

Ulyanov et al. 2018:

Ulyanov, Dmitry, Andrea Vedaldi, and Victor Lempitsky. "It takes (only) two: Adversarial generator-encoder networks." In Thirty-Second AAAI Conference on Artificial Intelligence. 2018.

Karras et al. 2018:

Karras, Tero, Timo Aila, Samuli Laine, and Jaakko Lehtinen. "Progressive growing of gans for improved quality, stability, and variation." arXiv preprint arXiv:1710.10196 (2017).

Lucas et al. 2018:

Lucas, Alice, Michael Iliadis, Rafael Molina, and Aggelos K. Katsaggelos. "Using deep neural networks for inverse problems in imaging: beyond analytical methods." IEEE Signal Processing Magazine 35, no. 1 (2018): 20-36.

Arjovsky et al. 2017:

Arjovsky, Martin, Soumith Chintala, and Léon Bottou. "Wasserstein gan." arXiv preprint arXiv:1701.07875 (2017).

Park et al. 2020:

Park, Sung-Wook, Jun-Ho Huh, and Jong-Chan Kim. "BEGAN v3: Avoiding Mode Collapse in GANs Using Variational Inference." Electronics 9, no. 4 (2020): 688.

Комментарии

0:08:23

0:08:23

0:21:01

0:21:01

0:21:21

0:21:21

0:09:58

0:09:58

0:17:04

0:17:04

![[Classic] Generative Adversarial](https://i.ytimg.com/vi/eyxmSmjmNS0/hqdefault.jpg) 0:37:04

0:37:04

0:03:55

0:03:55

0:12:01

0:12:01

1:33:38

1:33:38

0:05:23

0:05:23

0:14:13

0:14:13

0:44:19

0:44:19

1:08:37

1:08:37

2:01:24

2:01:24

0:59:11

0:59:11

1:55:54

1:55:54

0:14:21

0:14:21

1:58:27

1:58:27

0:45:21

0:45:21

0:59:52

0:59:52

0:28:51

0:28:51

1:17:41

1:17:41

1:17:56

1:17:56

0:33:34

0:33:34