filmov

tv

Sebastian Borgeaud - Efficient Training of Large Language Models @ UCL DARK

Показать описание

(there is a lag in sound until 2:15)

Invited talk by Sebastian Borgeaud on September 1, 2022 at UCL DARK.

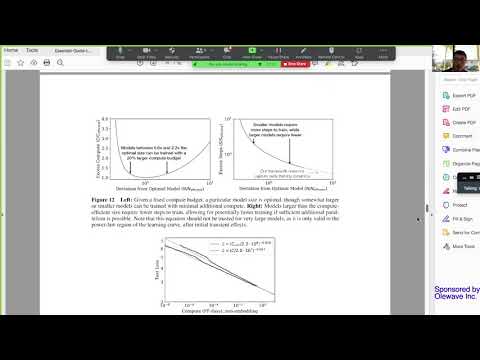

Abstract: Large language models have become ubiquitous in many areas of deep learning research, however little is known today about how to train these models efficiently. In this talk, I’ll cover some recent advances in large language model pre-training, focusing on how these advancements decrease the compute requirement of training these large models. In particular, I’ll focus on our work revisiting the “neural scaling laws” which showed that previous large language models were too large for their compute budget, and our work on RETRO, where we enhance auto-regressive large language models with retrieval over a large database of text.

Bio: Sebastian Borgeaud is a Research Engineer at DeepMind where he co-leads the large scale language modeling team. Sebastian’s research focuses on large language models (Gopher, Chinchilla, Perceiver, Flamingo and RETRO) and more generally large scale deep learning. Before joining DeepMind in 2018, Sebastian completed his undergraduate and master's degrees at the University of Cambridge, with a focus on theoretical computer science and NLP.

Invited talk by Sebastian Borgeaud on September 1, 2022 at UCL DARK.

Abstract: Large language models have become ubiquitous in many areas of deep learning research, however little is known today about how to train these models efficiently. In this talk, I’ll cover some recent advances in large language model pre-training, focusing on how these advancements decrease the compute requirement of training these large models. In particular, I’ll focus on our work revisiting the “neural scaling laws” which showed that previous large language models were too large for their compute budget, and our work on RETRO, where we enhance auto-regressive large language models with retrieval over a large database of text.

Bio: Sebastian Borgeaud is a Research Engineer at DeepMind where he co-leads the large scale language modeling team. Sebastian’s research focuses on large language models (Gopher, Chinchilla, Perceiver, Flamingo and RETRO) and more generally large scale deep learning. Before joining DeepMind in 2018, Sebastian completed his undergraduate and master's degrees at the University of Cambridge, with a focus on theoretical computer science and NLP.

0:34:52

0:34:52

0:45:31

0:45:31

0:09:38

0:09:38

2:15:57

2:15:57

0:02:17

0:02:17

0:08:36

0:08:36

0:07:14

0:07:14

0:24:07

0:24:07

2:12:42

2:12:42

0:20:51

0:20:51

0:58:24

0:58:24

0:00:52

0:00:52

0:36:05

0:36:05

0:32:46

0:32:46

1:14:11

1:14:11

1:12:45

1:12:45

0:14:30

0:14:30

0:51:07

0:51:07

0:16:41

0:16:41

0:15:17

0:15:17

0:23:53

0:23:53

0:15:37

0:15:37

0:10:53

0:10:53

0:10:35

0:10:35