filmov

tv

Advancing Spark - JSON Schema Drift with Databricks Autoloader

Показать описание

We've come full circle - the whole idea of lakes was that you could land data without worrying about the schema, but the move towards more managed, governed lakes using Delta has meant we need to apply a schema again... so how do we balance evolving schemas with the need for managed structures?

The new schema drift features in Databricks Autoloader take a decent stab at this problem - when reading from JSON sources, we can now pull the attributes we want into a known schema, but keep everything else as a json string that we can then extract further details from. In this week's video, Simon takes a look into the new feature, how it works and one or two of the limitations.

As always, don't forget to like & subscribe!

The new schema drift features in Databricks Autoloader take a decent stab at this problem - when reading from JSON sources, we can now pull the attributes we want into a known schema, but keep everything else as a json string that we can then extract further details from. In this week's video, Simon takes a look into the new feature, how it works and one or two of the limitations.

As always, don't forget to like & subscribe!

Advancing Spark - JSON Schema Drift with Databricks Autoloader

Advancing Spark - Runtime 8 2 and Advanced Schema Evolution

Pyspark Scenarios 21 : Dynamically processing complex json file in pyspark #complexjson #databricks

Advancing Spark - The Photon Whitepaper

Advancing Spark - Delta Merging with Structured Streaming Data

AWS Glue PySpark: Flatten Nested Schema (JSON)

How to create Schema Dynamically? | Databricks Tutorial | PySpark |

So you think you understand JSON Schema? - Ben Hutton, Postman/JSON Schema

Advancing Spark - Give your Delta Lake a boost with Z-Ordering

Advancing Spark - Getting hands-on with Delta Cloning

Advancing Spark - Dynamic Data Decryption

95. Databricks | Pyspark | Schema | Different Methods of Schema Definition

Working with JSON in PySpark - The Right Way

flatten nested json in spark | Lec-20 | most requested video

46. from_json() function to convert json string into StructType in Pyspark | Azure Databricks #spark

Advancing Spark - Autoloader Resource Management

Advancing Spark - Databricks Delta Live Tables First Look

Advancing Spark - Your Delta & Spark Q&A (SQLBits 2020 Part 1)

Advancing Spark - Building Delta Live Table Frameworks

Easy JSON Data Manipulation in Spark - Yin Huai (Databricks)

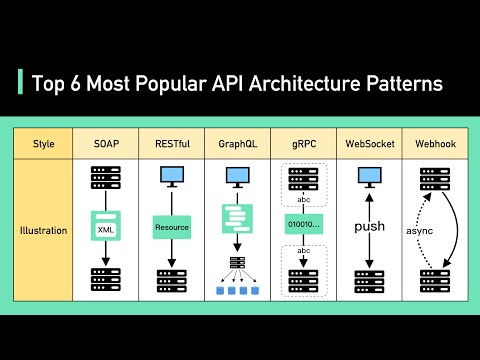

Top 6 Most Popular API Architecture Styles

Advancing Spark - Rethinking ETL with Databricks Autoloader

Working PySpark with JSON file | How to work with JSON file using Spark | dr.dataspark

JSON Schema in Production - #1 Chuck Reeves at Zones

Комментарии

0:17:17

0:17:17

0:23:41

0:23:41

0:11:59

0:11:59

0:49:28

0:49:28

0:17:20

0:17:20

0:07:51

0:07:51

0:11:28

0:11:28

0:26:27

0:26:27

0:20:31

0:20:31

0:18:11

0:18:11

0:15:37

0:15:37

0:15:32

0:15:32

0:23:41

0:23:41

0:17:56

0:17:56

0:07:56

0:07:56

0:22:11

0:22:11

0:33:20

0:33:20

0:20:45

0:20:45

0:24:14

0:24:14

0:31:38

0:31:38

0:04:21

0:04:21

0:21:09

0:21:09

1:06:24

1:06:24

0:16:28

0:16:28