filmov

tv

Derivative of Cost function for Logistic Regression | Machine Learning

Показать описание

We will compute the Derivative of Cost Function for Logistic Regression. While implementing Gradient Descent algorithm in Machine learning, we need to use Derivative of Cost Function. Computing it, can be difficult if you are new to Derivative and Calculus. But going step by step, we can simply compute Derivative of Cost Function for Logistic Regression.

It will help us minimizing the Logistic Regression Cost Function, and thus improving our model accuracy.

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

This is Your Lane to Machine Learning ⭐

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

It will help us minimizing the Logistic Regression Cost Function, and thus improving our model accuracy.

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

This is Your Lane to Machine Learning ⭐

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

Marginal cost & differential calculus | Applications of derivatives | AP Calculus AB | Khan Acad...

Derivative of Cost function for Logistic Regression | Machine Learning

Derivatives of Cost function for linear regression

Logistic Regression Cost Function | Machine Learning | Simply Explained

How to Derive a Cobb-Douglas Cost Function

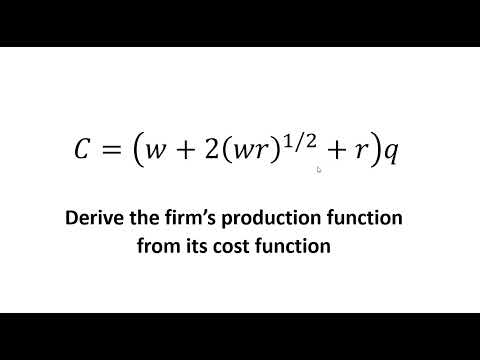

Derive a Production Function from Cost function

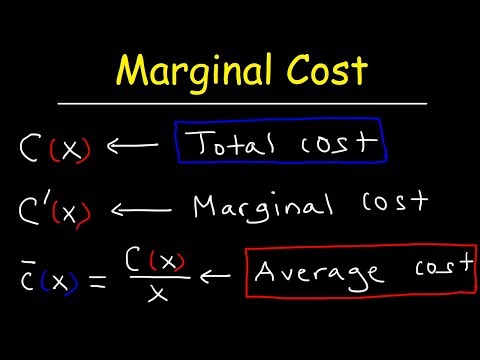

Calculus - Marginal cost

derivative of cost function for Logistic Regression (7 Solutions!!)

Ex: Derivative Application - Maximize Profit

Ex: Find the Average Cost Function and Minimize the Average Cost

How to Derive Marginal Cost Equations

#21 Business Mathematics | Application of Differentiation : Cost Function | TC, MC, AC | B.COM | BBA

Wk-11 Derivatives: Optimization - Cost/Revenue/Profit

Gradient Descent Algorithm | The minima | Derivative of cost function | Weight Update Equation

Marginal Cost and Average Total Cost

Understanding how we calculate the derivative of the Cost Function in NN

Derivation of Total Cost Function from Production Function

Marginal Cost Function from Average Cost Function: Differentiation Rules

Machine Learning Tutorial Python - 4: Gradient Descent and Cost Function

Minimize a Cost Function Using the First Derivative Test

Basics of Deep Learning Part 6: Backpropagation explained – Cost Function and Derivatives

Math 1A 2.6 Example 6 Derivative of a cost function

Cost functions: TC to MC, TC=FC+VC. Math with context.

Ex: Determine Total Cost and Marginal Cost (No Derivative)

Комментарии

0:04:40

0:04:40

0:08:34

0:08:34

0:07:41

0:07:41

0:06:22

0:06:22

0:04:21

0:04:21

0:03:16

0:03:16

0:05:37

0:05:37

0:07:51

0:07:51

0:04:25

0:04:25

0:06:51

0:06:51

0:05:49

0:05:49

0:08:33

0:08:33

0:03:29

0:03:29

0:16:57

0:16:57

0:12:58

0:12:58

0:04:23

0:04:23

0:12:43

0:12:43

0:05:21

0:05:21

0:28:26

0:28:26

0:06:54

0:06:54

0:21:21

0:21:21

0:04:33

0:04:33

0:03:40

0:03:40

0:04:13

0:04:13