filmov

tv

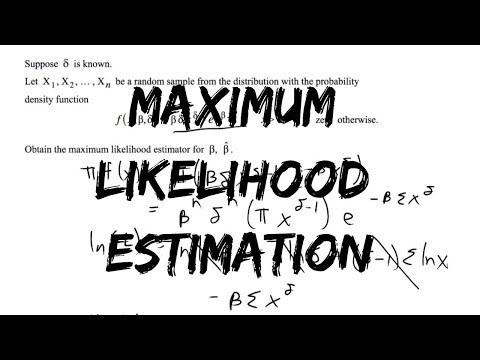

L20.10 Maximum Likelihood Estimation Examples

Показать описание

MIT RES.6-012 Introduction to Probability, Spring 2018

Instructor: John Tsitsiklis

License: Creative Commons BY-NC-SA

Instructor: John Tsitsiklis

License: Creative Commons BY-NC-SA

L20.10 Maximum Likelihood Estimation Examples

Maximum Likelihood Estimation | Example 2

Maximum likelihood estimation - example

L20.9 Maximum Likelihood Estimation

Maximum Likelihood Estimator | L20

Maximum Likelihood Estimation

Likelihood vs Probability

Maximum Likelihood For the Normal Distribution, step-by-step!!!

Parameter estimation

Maximum Likelihood Estimaton Examples

Maximum Likelihood Examples

Maximum Likelihood Estimation: Exponential distribution

Maximum Likelihood Estimation (MLE) with Examples

Maximum Likelihood Estimation Examples

Maximum Likelihood Estimation of the AR(1) Model

Maximum Likelihood Estimation for Exponential Random Variables

method of maximum likelihood function and it's properties

Method of Maximum Likelihood Estimation

Basic Idea behind Maximum Likelihood

Numerical Algebraic Geometry for Maximum Likelihood Estimation

Maximum Likelihood Explained

Maximum Likelihood Estimation (MLE): The Intuition

MLE and Sufficiency | Maximum Likelihood Estimate | Sufficient Statistics | Cheenta Statistics

Maximum likelihood

Комментарии

0:10:20

0:10:20

0:05:11

0:05:11

0:05:00

0:05:00

0:06:32

0:06:32

0:34:17

0:34:17

0:05:36

0:05:36

0:00:30

0:00:30

0:19:50

0:19:50

1:26:36

1:26:36

0:11:53

0:11:53

0:06:10

0:06:10

0:03:46

0:03:46

0:15:50

0:15:50

0:20:38

0:20:38

0:10:10

0:10:10

0:05:19

0:05:19

0:08:45

0:08:45

0:18:03

0:18:03

0:00:16

0:00:16

0:25:16

0:25:16

0:09:23

0:09:23

0:01:56

0:01:56

0:16:50

0:16:50

0:19:25

0:19:25