filmov

tv

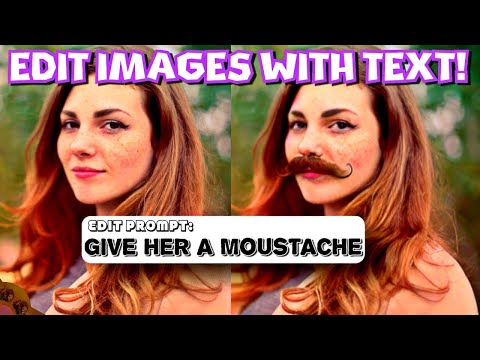

InstructPix2Pix Explained - Edit Images with Words!

Показать описание

Join me as I explain the core idea behind 'InstructPix2Pix' which let's you edit images using natural language instructions!

The thumbnail was generated with "Add sunglasses", "make it in a city at night" and "give him a leather jacket" - far better than messing about with photoshop :)

The thumbnail was generated with "Add sunglasses", "make it in a city at night" and "give him a leather jacket" - far better than messing about with photoshop :)

InstructPix2Pix Explained - Edit Images with Words!

InstructPix2Pix Explained: Edit Images with Text Prompts – Simplified!

Crazy AI image editing from text! (InstructPix2Pix explained)

Edit photos with plain English! InstructPix2Pix

InstructPix2Pix Edit Image using Text Instruction High Level Explanation Hugging Face Space Demo

InstructPix2Pix: Pioneering Text-Prompt Image Editing.

Stable Diffusion InstructPix2Pix - Image Editing with Text Instructions

InstructPix2Pix: Learning to Follow Image Editing Instructions - Paper Summary

InstructPix2Pix: Learning to Follow Image Editing Instructions

Photoshop with Text-based Editing using instructpix2pix!

Edit images using instructions : InstructPix2Pix, Research Paper Expalined!

Instruct Pix2Pix AI! - Edit Images with Text in Automatic1111!

NEW Instruct Pix2Pix Text To Image Editor Artificial Intelligence | Tech News

Instruct-Pix2Pix - Edit the Image with text Prompt

A quick walkthrough on how I edited these videos using instruct pix2pix and EbSynth. #videoediting

AI image editing is 🤯

The Easiest Way to Edit Images with AI

Transform Your Photos with Pix2Pix Using Only Text: 3 Easy Ways to Get Started

Forget Photoshop - How To Transform Images With Text Prompts using InstructPix2Pix Model in NMKD GUI

PR-413: InstructPix2Pix: Learning to Follow Image Editing Instructions

Edit images with instructions in Stable Diffusion (locally) with Instructpix2pix model

AI Image Editing - free and easy, just type what you want

Instruct-pix2pix on Stable Diffusion

Stable Diffusion instruct-pix2pix Detailed Guide Explained | instruct-pix2pix | #stablediffusion

Комментарии

0:13:22

0:13:22

0:19:09

0:19:09

0:08:36

0:08:36

0:10:53

0:10:53

0:07:37

0:07:37

0:05:40

0:05:40

0:05:32

0:05:32

0:23:43

0:23:43

0:08:01

0:08:01

0:08:25

0:08:25

0:30:50

0:30:50

0:09:51

0:09:51

0:00:12

0:00:12

0:18:15

0:18:15

0:00:45

0:00:45

0:00:56

0:00:56

0:02:51

0:02:51

0:24:52

0:24:52

0:20:34

0:20:34

0:39:49

0:39:49

0:10:25

0:10:25

0:05:22

0:05:22

0:01:00

0:01:00

0:08:58

0:08:58