filmov

tv

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

Показать описание

Abstract:

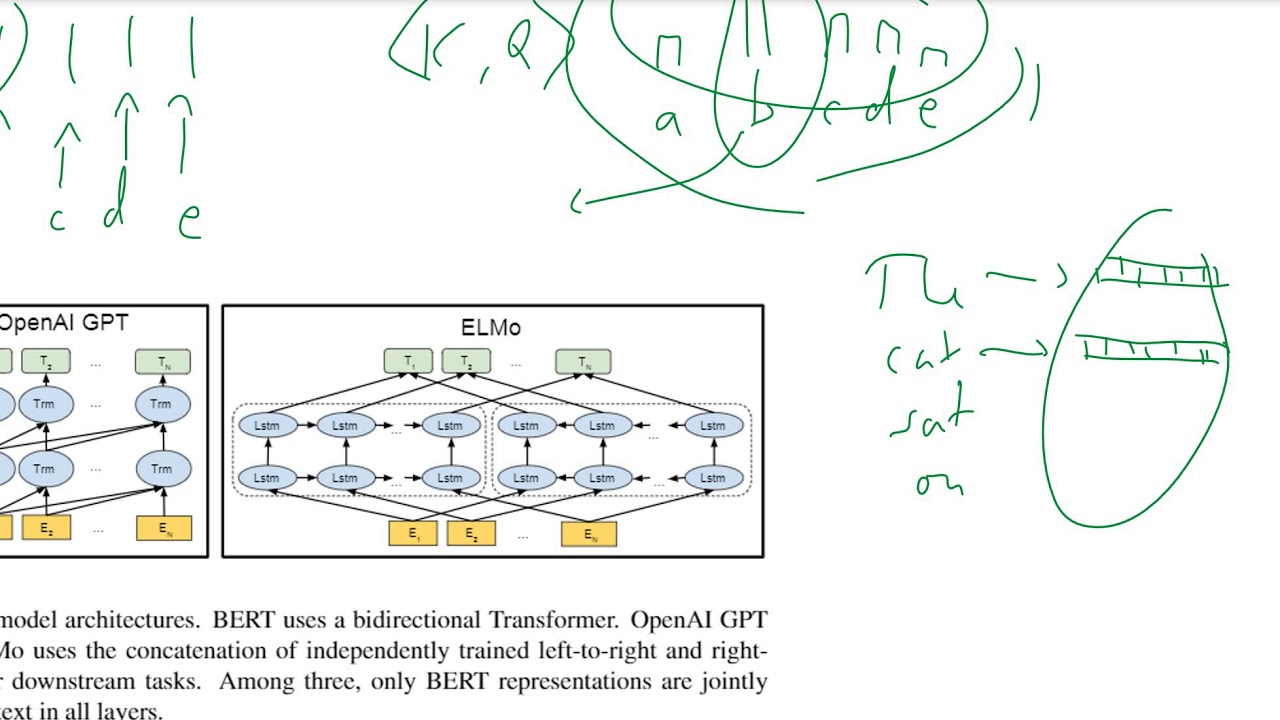

We introduce a new language representation model called BERT, which stands for Bidirectional Encoder Representations from Transformers. Unlike recent language representation models, BERT is designed to pre-train deep bidirectional representations by jointly conditioning on both left and right context in all layers. As a result, the pre-trained BERT representations can be fine-tuned with just one additional output layer to create state-of-the-art models for a wide range of tasks, such as question answering and language inference, without substantial task-specific architecture modifications.

BERT is conceptually simple and empirically powerful. It obtains new state-of-the-art results on eleven natural language processing tasks, including pushing the GLUE benchmark to 80.4% (7.6% absolute improvement), MultiNLI accuracy to 86.7 (5.6% absolute improvement) and the SQuAD v1.1 question answering Test F1 to 93.2 (1.5% absolute improvement), outperforming human performance by 2.0%.

Authors:

Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova

Комментарии

0:40:13

0:40:13

0:11:37

0:11:37

0:05:06

0:05:06

0:11:38

0:11:38

0:50:18

0:50:18

0:50:22

0:50:22

0:17:49

0:17:49

![[CW Paper-Club] BERT:](https://i.ytimg.com/vi/uyNArsMBW5Q/hqdefault.jpg) 0:52:49

0:52:49

0:27:24

0:27:24

0:09:45

0:09:45

0:02:15

0:02:15

1:04:06

1:04:06

![[LLM 101 Series]](https://i.ytimg.com/vi/r3GwaIrXTWs/hqdefault.jpg) 0:15:42

0:15:42

0:00:51

0:00:51

0:07:00

0:07:00

0:22:40

0:22:40

![[BERT] Pretranied Deep](https://i.ytimg.com/vi/BhlOGGzC0Q0/hqdefault.jpg) 0:53:07

0:53:07

1:12:30

1:12:30

![[DS Interface] BERT:](https://i.ytimg.com/vi/mADjlH6VUOc/hqdefault.jpg) 0:16:36

0:16:36

0:17:58

0:17:58

0:13:22

0:13:22

0:26:56

0:26:56

0:04:07

0:04:07

![[Paper Review] BERT:](https://i.ytimg.com/vi/2AvoJPhrOQY/hqdefault.jpg) 0:14:01

0:14:01